Statistical Mechanics for Molecular Dynamics Ensembles: From Theory to Biomedical Applications

This article provides a comprehensive guide to the theory and application of statistical mechanics for generating and validating molecular dynamics ensembles, tailored for researchers and drug development professionals.

Statistical Mechanics for Molecular Dynamics Ensembles: From Theory to Biomedical Applications

Abstract

This article provides a comprehensive guide to the theory and application of statistical mechanics for generating and validating molecular dynamics ensembles, tailored for researchers and drug development professionals. It covers foundational principles connecting thermodynamics and statistical mechanics to MD, explores advanced methodological frameworks including enhanced sampling and machine-learning potentials, addresses critical troubleshooting for sampling and force field inaccuracies, and establishes robust validation protocols using experimental data. By synthesizing current best practices and emerging trends, this resource aims to empower scientists to generate more reliable, predictive molecular ensembles for understanding biomolecular function and accelerating therapeutic discovery.

Theoretical Foundations: Connecting Statistical Mechanics to Molecular Dynamics

The Potential Energy Surface (PES) is a foundational concept in computational chemistry and physics, providing a mathematical representation of the energy of a molecular system as a function of the positions of its constituent atoms. [1] [2] It forms the critical bridge between the quantum mechanical description of electronic structure and the classical modeling of nuclear motion in molecular dynamics (MD) simulations. Within the framework of statistical mechanics for molecular dynamics ensembles, the PES is the function that determines the forces governing the evolution of a system through its phase space. The energy landscape metaphor is apt: for a system with multiple degrees of freedom, the potential energy defines a topography of "hills," "valleys," and "passes," where minima correspond to stable molecular configurations, and saddle points represent transition states for chemical reactions. [1] [2] The accuracy of any MD simulation, and consequently the thermodynamic and kinetic properties derived from it, is inherently and completely dependent on the fidelity of the underlying PES.

Quantum Mechanical Foundations of the PES

The Born-Oppenheimer Approximation

The theoretical justification for separating nuclear motion from electronic motion, and thus for the very concept of a PES, is the Born-Oppenheimer approximation. Because nuclei are thousands of times more massive than electrons, they move on a much slower timescale. This allows one to solve the electronic Schrödinger equation for a fixed set of nuclear positions, ( \mathbf{R} ):

[ \hat{H}{elec} \psi{elec} = E{elec} \psi{elec} ]

Here, ( \hat{H}{elec} ) is the electronic Hamiltonian, ( \psi{elec} ) is the electronic wavefunction, and ( E{elec} ) is the electronic energy. The potential energy surface is then defined as the sum of this electronic energy and the constant nuclear repulsion energy for that configuration: ( E(\mathbf{R}) = E{elec}(\mathbf{R}) + E_{nuc-nuc}(\mathbf{R}) ). This function ( E(\mathbf{R}) ) is the PES upon which the nuclei move. [1]

Computational Methods for PES Calculation

Calculating the PES involves computing ( E(\mathbf{R}) ) for a vast number of nuclear configurations. The choice of quantum chemical method involves a trade-off between computational cost and accuracy.

- Ab Initio Methods: These methods, such as those used to create the modern PESs for N₂ + N₂ collisions, [3] attempt to solve the electronic Schrödinger equation from first principles, using no empirical data. They range from highly accurate but expensive methods like Coupled Cluster (e.g., CCSD(T)) to more approximate Density Functional Theory (DFT) methods. The NASA Ames and University of Minnesota N₄ PESs are examples of ab initio surfaces fitted to thousands of such quantum chemistry calculations. [3]

- Semi-Empirical Methods: These methods simplify the quantum mechanical equations and parameterize them against experimental data to reduce computational cost. A classic example is the London-Eyring-Polanyi-Sato (LEPS) potential, an empirical surface based on valence bond theory. [3] [2] While computationally efficient, the accuracy of semi-empirical surfaces can be limited, as seen in the differing topography (e.g., collinear vs. bent transition state) between the LEPS and ab initio surfaces for Nâ‚‚ + N collisions. [3]

Table 1: Comparison of PES Calculation Methods

| Method Type | Theoretical Basis | Advantages | Limitations | Example Applications |

|---|---|---|---|---|

| Ab Initio | First principles solution of electronic Schrödinger equation. | High accuracy, no empirical parameters required. | Computationally expensive, limits system size. | N₂ + N₂ PES (NASA Ames, Univ. of Minnesota) [3] |

| Semi-Empirical | Simplified QM equations parameterized with experimental data. | Computationally efficient, allows larger systems. | Lower accuracy, transferability can be poor. | LEPS potential for H₃ and N₃ [3] [2] |

From the PES to Classical Molecular Dynamics

The Force Field Approximation

In classical MD, the explicit quantum mechanical PES is replaced by an analytical molecular mechanics force field. This is a critical step that makes simulating large biomolecules feasible. The force field is a mathematical approximation of the true PES, typically decomposed into terms for bonded and non-bonded interactions:

[ E{FF}(\mathbf{R}) = \sum{bonds} Kr(r - r{eq})^2 + \sum{angles} K\theta(\theta - \theta{eq})^2 + \sum{dihedrals} \frac{V_n}{2}[1 + \cos(n\phi - \gamma)]

- \sum{i

{ij}}{R{ij}^{12}} - \frac{B{ij}}{R{ij}^6} + \frac{qi qj}{\epsilon R{ij}} \right] ] }>

The parameters in these equations (e.g., ( Kr ), ( r{eq} ), ( A{ij} ), ( qi )) are often derived from high-level ab initio calculations on smaller model systems or fit to experimental data. The quality of an MD simulation is thus directly tied to the quality of this approximate PES (the force field). [4] [5]

The Statistical Mechanical Connection

MD simulations generate a trajectory of the system through phase space (the combined space of all atomic positions and momenta). According to the ergodic hypothesis of statistical mechanics, the time average of a property along an MD trajectory is equal to the ensemble average over a large number of copies of the system. For the canonical (NVT) ensemble, the probability of finding the system in a state with energy ( E(\mathbf{R}) ) is given by the Boltzmann distribution:

[ P(\mathbf{R}) \propto \exp\left(-E(\mathbf{R}) / k_B T\right) ]

The PES, ( E(\mathbf{R}) ), is the key determinant of this probability and therefore of all thermodynamic properties derived from the ensemble, such as free energies, entropies, and equilibrium constants. [5] Free energy calculation methods, such as thermodynamic perturbation or umbrella sampling, are essentially sophisticated protocols for sampling the PES to compute these ensemble averages. [5]

Practical Protocols: Constructing and Validating a PES

The process of moving from a quantum mechanical description to a validated classical simulation involves several critical stages, as outlined in the workflow below.

Protocol 1: Ab Initio PES Construction for Reaction Dynamics

This protocol is used for constructing highly accurate PESs for specific chemical reactions, often in gas phase.

- Configuration Sampling: Select a representative grid of molecular geometries (nuclear configurations, ( \mathbf{R} )) spanning reactants, products, transition states, and relevant intermediate structures. For an atom-diatom reaction like H + Hâ‚‚, this involves varying the two relevant bond lengths. [2] For Nâ‚‚ + Nâ‚‚, six internal coordinates are required. [3]

- Electronic Energy Calculation: For each geometry on the grid, perform a high-level ab initio quantum chemistry calculation (e.g., CCSD(T) with a large basis set) to compute the electronic energy, ( E_{elec}(\mathbf{R}) ). [3]

- Analytical Representation: Fit a smooth, analytical function (a process requiring non-linear fitting in multiple dimensions) to the computed energies. This function must allow for rapid evaluation of energy and forces for any arbitrary geometry during dynamics calculations. [3]

- Dynamics and Validation: Use the analytical PES in quasiclassical trajectory (QCT) calculations to compute reaction cross-sections and thermal rate coefficients. Validate the results by comparing them with experimental data from shock-tube or other kinetic experiments. [3]

Protocol 2: Force Field Refinement for Biomolecular Simulation

This protocol is common in studies of proteins and other soft-matter systems, where the focus is on conformational dynamics rather than bond breaking/forming.

- Initial System Setup: Obtain high-resolution structures of individual domains (e.g., from NMR or crystallography). Use modeling software like Modeller to add missing linkers and construct a full-length initial structure. [4]

- Coarse-Grained (CG) MD: Run initial MD simulations using a CG force field (e.g., Martini) to enhance conformational sampling. In the case of TIA-1, this involved applying an elastic network within folded domains but leaving inter-domain linkers fully flexible. [4]

- Comparison with Experiment: Calculate experimental observables from the simulation trajectory. For small-angle X-ray scattering (SAXS), this requires "backmapping" CG structures to all-atom resolution and then computing the theoretical scattering profile. [4]

- Force Field Refinement: Identify systematic errors in the force field. For example, the standard Martini force field was found to produce overly compact conformations for TIA-1. A key refinement was to strengthen the protein-water interaction parameter, which improved agreement with SAXS data. [4]

- Bayesian/Maximum Entropy (BME) Reweighting: As a final step, use the BME method to reweight the simulation ensemble to achieve full consistency with the experimental data, providing a robust description of the conformational landscape. [4]

Table 2: Key Reagents and Computational Tools for PES/MD Research

| Item / Software | Function / Role | Specific Application Example |

|---|---|---|

| Quantum Chemistry Software | Computes electronic energies for nuclear configurations. | Generating the ab initio data points for the Nâ‚„ PES. [3] |

| GROMACS | Molecular dynamics simulation package. | Running coarse-grained and all-atom MD simulations. [4] |

| PLUMED | Plugin for enhanced sampling and free energy calculations. | Calculating collective variables like radius of gyration and inter-domain distances. [4] |

| Martini Force Field | Coarse-grained potential for accelerated dynamics. | Simulating large, flexible proteins like TIA-1. [4] |

| Bayesian/Max. Entropy (BME) | Data integration and ensemble reweighting algorithm. | Refining MD ensembles to match SAXS/SANS data. [4] |

| Modeller | Homology and comparative protein structure modeling. | Adding missing residues and linkers to initial protein structures. [4] |

Case Studies in PES Application

High-Temperature Nitrogen Dissociation

This case highlights the direct PES-to-rate-coefficient pipeline for aerothermodynamics. Comparisons between different ab initio PESs for N₂ + N (NASA Ames vs. Laganà 's empirical LEPS) and for N₂ + N₂ (NASA Ames vs. University of Minnesota) revealed significant topological differences. [3] For instance, the LEPS surface features a collinear transition state with a 36 kcal/mol barrier, while the ab initio surfaces show a bent transition state with a higher barrier (44-47 kcal/mol). [3] Despite these differences, QCT-derived thermal dissociation rate coefficients in the 10,000-30,000 K range were in excellent agreement. However, under non-equilibrium conditions relevant to hypersonic shocks, quasi-steady state (QSS) rate coefficients were significantly lower than thermal ones, demonstrating the critical importance of the PES in modeling non-equilibrium phenomena. [3]

Conformational Dynamics of Multi-Domain Proteins

The study of the three-domain protein TIA-1 exemplifies the force field refinement approach. The research combined CG-MD with SAXS and small-angle neutron scattering (SANS) data. The initial Martini force field led to overly compact conformations, poor agreement with SAXS data, and highlighted a force field limitation. [4] By strengthening protein-water interactions, the agreement was dramatically improved. Furthermore, using the BME approach to reweight the simulation ensemble against SAXS data also improved the fit to SANS data, validating the refined conformational ensemble. [4] This demonstrates a synergistic loop where MD provides an atomic-resolution model and experiments provide the constraints to refine the effective PES governing the dynamics.

The derivation of the Potential Energy Surface from quantum mechanics and its careful approximation for classical Molecular Dynamics is a cornerstone of modern computational molecular science. The PES is the single source of truth in a simulation, dictating the forces and hence the statistical mechanical ensemble. As the case studies show, the approach is versatile, enabling everything from the calculation of high-temperature reaction rates for atmospheric entry to the resolution of conformational ensembles of flexible proteins for drug development. Current challenges remain in the accurate and efficient representation of the PES, particularly for large, complex systems involving bond breaking, electronic degeneracies, and strong solvent interactions. Future progress will rely on continued advances in quantum chemical methods, machine learning approaches for representing PESs, and robust statistical mechanical frameworks for integrating simulation with experimental data.

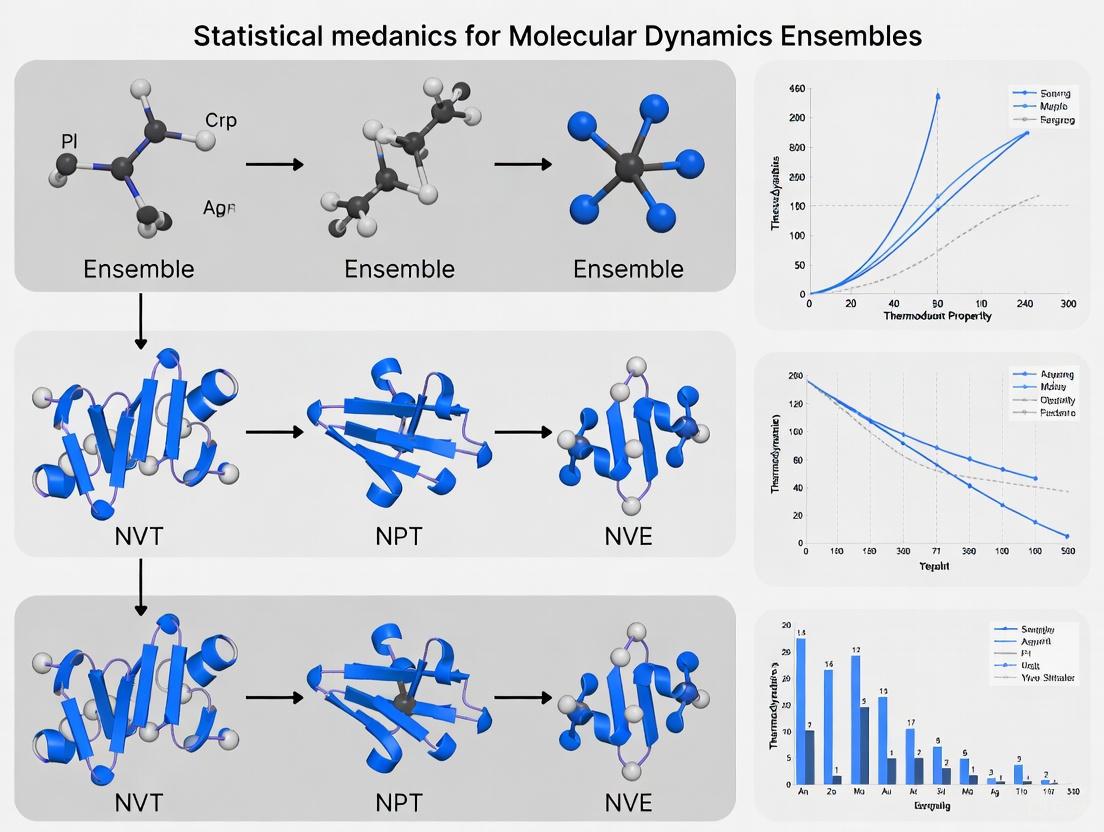

Core Statistical Ensembles (NVE, NVT, NPT) and Their Thermodynamic Connections

In the field of molecular dynamics (MD) simulations, statistical ensembles provide the fundamental theoretical framework that defines the thermodynamic conditions under which a system is studied. An ensemble represents a collection of all possible microstates of a system that are consistent with a set of macroscopic constraints, such as constant energy, temperature, or pressure. The concept originated from the pioneering work of Boltzmann and Gibbs in the 19th century, who established that macroscopic phenomena result from the statistical behavior of microscopic particles [6]. This theoretical foundation enables researchers to connect the microscopic world of atoms and molecules, governed by Newton's equations of motion, to macroscopic thermodynamic observables that can be measured experimentally [6].

The transition from theoretical statistical mechanics to practical molecular simulation represents a crucial bridge in computational science. While Boltzmann postulated that macroscopic observations result from time averages of microscopic events along particle trajectories, Gibbs further developed this concept by demonstrating that this time average could be replaced by an ensemble average—an collection of system states distributed according to a specific probability density [6]. The advent of computers in the 1940s enabled scientists to numerically solve the equations of statistical mechanics, leading to the development of MD as a powerful computational technique that applies Newton's laws of motion to model and analyze the behavior of atoms and molecules over time [6] [7].

Molecular Dynamics simulations have become indispensable across numerous scientific disciplines, including drug discovery, materials science, and nanotechnology, by providing atomic-level insights into molecular behavior that are difficult to obtain through experimental methods alone [7]. The core principle of MD involves calculating forces between particles using potential energy functions (force fields) that describe atomic interactions, including bond stretching, angle bending, van der Waals forces, and electrostatic interactions [7]. The choice of statistical ensemble directly determines which thermodynamic properties can be naturally extracted from simulations and influences the system's sampling of phase space, making ensemble selection a critical decision in any MD study [8].

Theoretical Foundation and Thermodynamic Connections

Statistical Mechanics Framework

The theoretical framework for understanding statistical ensembles begins with the fundamental laws governing nuclei and electrons in a system. For condensed matter systems at low energies—typical conditions for most MD simulations—we can often treat nuclei as classical particles interacting via an effective potential derived from the electronic ground state [6]. The connection between thermodynamics and statistical mechanics is established through the concept of ensembles, which provide a systematic procedure for calculating thermodynamic quantities that otherwise could only be obtained experimentally [6].

The core idea is that macroscopic thermodynamic properties emerge from averaging over microscopic states. In the language of statistical mechanics, different ensembles correspond to different free energies. The microcanonical ensemble (NVE) connects to the internal energy (U), the canonical ensemble (NVT) connects to the Helmholtz free energy (F = U - TS), and the isothermal-isobaric ensemble (NPT) connects to the Gibbs free energy (G = U + PV - TS) [8]. This connection to free energies is particularly important because free energies, not energies alone, determine the equilibrium state of a system at finite temperature.

While in the thermodynamic limit (for an infinite system size) ensembles are theoretically equivalent away from phase transitions, this equivalence breaks down for the finite systems typically simulated in MD [8]. This fundamental limitation makes the development and understanding of different ensembles not merely academically interesting but essential for obtaining physically meaningful results from molecular simulations.

Equations of Motion and Thermodynamic Derivatives

The mathematical implementation of ensembles in MD involves modifying Newton's equations of motion to include thermostatting and barostatting mechanisms. In the microcanonical ensemble (NVE), the standard Newtonian equations conserve energy exactly. For other ensembles, extended Lagrangian formulations introduce additional degrees of freedom that act as heat baths or pressure reservoirs.

The thermodynamic derivatives that connect microscopic simulations to macroscopic observables can be expressed through statistical mechanical relations. For instance, in the NVT ensemble, the constant volume heat capacity can be calculated from energy fluctuations through the relation: Cv = (∂Uâ„∂T)v = (⟨E²⟩ - ⟨E⟩²)â„(kBT²), where E is the instantaneous energy, kB is Boltzmann's constant, and the angle brackets denote ensemble averages. Similar fluctuation formulas exist for other thermodynamic properties, including the isothermal compressibility from volume fluctuations in the NPT ensemble and the thermal expansion coefficient from cross-correlations between energy and volume fluctuations.

Table 1: Fundamental Thermodynamic Connections of Core Ensembles

| Ensemble | Fixed Parameters | Control Variables | Connected Free Energy | Natural Experimental Analog |

|---|---|---|---|---|

| NVE (Microcanonical) | Number of particles (N), Volume (V), Energy (E) | None (isolated system) | Internal Energy (U) | Adiabatic processes, gas-phase reactions |

| NVT (Canonical) | Number of particles (N), Volume (V), Temperature (T) | Temperature via thermostats | Helmholtz Free Energy (F = U - TS) | Systems in thermal contact with reservoir |

| NPT (Isothermal-Isobaric) | Number of particles (N), Pressure (P), Temperature (T) | Temperature via thermostats, Pressure via barostats | Gibbs Free Energy (G = U + PV - TS) | Most bench experiments, biological systems |

The Core Ensembles: Definitions, Implementations, and Applications

Microcanonical Ensemble (NVE)

The NVE ensemble, also known as the microcanonical ensemble, describes isolated systems where the number of particles (N), the system volume (V), and the total energy (E) remain constant. This ensemble represents the most fundamental approach to molecular dynamics, as it directly implements Newton's equations of motion without additional control mechanisms [7]. In the NVE ensemble, the system evolves conservatively according to the Hamiltonian H(p,q) = E, where p and q represent the momenta and coordinates of all particles [6].

From an implementation perspective, NVE simulations use numerical integrators such as the Verlet algorithm or related schemes (Velocity Verlet, Leapfrog) to propagate particle positions and velocities forward in time with discrete time steps typically on the order of femtoseconds (10â»Â¹âµ seconds) [7]. These integrators conserve energy well over short time scales, making them suitable for NVE simulations. However, in practice, numerical errors and finite time steps can lead to energy drift over long simulations, requiring careful monitoring.

The NVE ensemble is particularly suited for studying gas-phase reactions, isolated molecular systems, and fundamental investigations where energy conservation is paramount [8]. It also serves as the foundation for many advanced simulation techniques, where production runs may be conducted in NVE after equilibration in other ensembles. For instance, when simulating infrared spectra of liquids, researchers often equilibrate the system in the NVT ensemble at the desired temperature before switching to NVE for production dynamics, as thermostats can decorrelate velocities in ways that destroy spectral information calculated from correlation functions [8].

A significant limitation of the NVE ensemble emerges when simulating barrier crossing events. If the barrier height exceeds the total energy of the system, the rate of crossing will be zero in NVE, whereas in NVT with the same average energy, thermal fluctuations can occasionally push the system over the barrier, leading to non-zero rates [8]. This example highlights how ensemble choice can qualitatively alter simulation outcomes, particularly for rare events or processes with significant energy barriers.

Canonical Ensemble (NVT)

The NVT ensemble, or canonical ensemble, maintains constant number of particles (N), volume (V), and temperature (T), making it suitable for systems in thermal contact with a heat reservoir [7]. Temperature control is achieved through mathematical constructs known as thermostats, which modify the equations of motion to maintain the kinetic energy of the system (and thus its temperature) around a target value.

Several thermostat algorithms are commonly used in MD simulations. The Berendsen thermostat applies a simple scaling of velocities to adjust the temperature toward the desired value, providing strong coupling and efficient equilibration but not generating a true canonical distribution [9]. The Nosé-Hoover thermostat and its extension, Nosé-Hoover chains (NHC), introduce additional dynamical variables that act as heat baths, producing correct canonical distributions [9]. According to implementation details in the ReaxFF documentation, NHC-NVT typically uses parameters including Nc=5, Nys=5, and Nchains=10, with the tdamp control parameter (τt) determining the first thermostat mass as Q = NfreekBTsetτt² [9]. The documentation notes that relaxation with a Nosé-Hoover thermostat usually takes longer than with the Berendsen thermostat using the same τ parameter [9].

Advanced thermostat implementations can provide separate control for different degrees of freedom. For example, some MD packages allow separate damping parameters for translational, rotational, and vibrational degrees of freedom, which can be particularly useful when initial system configurations contain strongly out-of-equilibrium distributions, such as many quickly spinning molecules with relatively small translational velocity [9].

The NVT ensemble is widely used for simulating biological systems at physiological conditions, solid-state materials, and any system where volume constraints are natural [8] [7]. It provides direct access to the Helmholtz free energy and is often employed in studies of conformational dynamics, protein folding, and ligand binding where constant volume approximations are reasonable.

Isothermal-Isobaric Ensemble (NPT)

The NPT ensemble, or isothermal-isobaric ensemble, maintains constant number of particles (N), pressure (P), and temperature (T), making it the most appropriate choice for simulating systems under constant environmental conditions [7]. This ensemble is particularly valuable for modeling processes in solution, biomolecular systems in their native environments, and materials under specific pressure conditions.

Implementation of the NPT ensemble requires both a thermostat to control temperature and a barostat to control pressure. Common combinations include the Berendsen barostat and thermostat for efficient equilibration, and the more rigorous Anderson-Hoover (AH) or Parrinello-Rahman-Hoover (PRH) NPT implementations with NHC thermostats [9]. In the Anderson-Hoover NPT implementation, the barostat mass is determined by the pdamp control parameter τp as W = (Nfree + 3)kBTsetτp², while the thermostat component uses parameters similar to NHC-NVT [9].

For anisotropic systems, the Parrinello-Rahman barostat allows full fluctuation of the simulation cell shape, enabling studies of crystalline materials under stress or systems with directional forces. The ReaxFF documentation notes that anisotropic NPT dynamics should be considered experimental, with options for fixed cell angles (imdmet=10) or full cell fluctuations (imdmet=11) [9].

The NPT ensemble provides a direct connection to the Gibbs free energy and is often considered the most appropriate for comparing with experimental measurements, as most laboratory experiments occur at constant pressure rather than constant volume [8]. As noted by researchers on Matter Modeling Stack Exchange, "In many cases, that means you want to run dynamics in the NPT ensemble (since bench experiments tend to be at fixed pressure and temperature)" [8]. This ensemble naturally accounts for density fluctuations and thermal expansion effects that are missing in constant-volume simulations.

Table 2: Implementation Details of Core Ensembles in MD Simulations

| Ensemble | Common Algorithms | Key Control Parameters | Typical Applications | Advantages | Limitations |

|---|---|---|---|---|---|

| NVE | Verlet, Velocity Verlet, Leapfrog | Time step (0.5-2 fs) | Gas-phase reactions, fundamental studies, spectral calculations | Energy conservation, simple implementation | Cannot control temperature, inappropriate for most condensed phases |

| NVT | Berendsen, Nosé-Hoover, Nosé-Hoover Chains | Target temperature, damping constant (τt) | Biological systems, solid materials, constant-volume processes | Correct canonical sampling, temperature control | Constant volume may be artificial for some systems |

| NPT | Berendsen NPT, Anderson-Hoover, Parrinello-Rahman | Target temperature/pressure, damping constants (Ï„t, Ï„p) | Solutions, biomolecules, materials under specific pressure | Matches most experiments, allows density fluctuations | More complex implementation, slower equilibration |

Practical Implementation and Protocols

Ensemble Selection Guidelines

Choosing the appropriate ensemble for a molecular dynamics simulation requires careful consideration of the scientific question, system characteristics, and intended comparison with experimental data. For researchers studying gas-phase reactions or isolated molecular systems without environmental coupling, the NVE ensemble provides the most natural description [8]. However, when modeling systems in solution or with implicit environmental effects, the choice between NVT and NPT becomes more nuanced.

As a general guideline, the NPT ensemble is recommended for most biomolecular simulations and studies of condensed phases, as it most closely mimics laboratory conditions where pressure rather than volume is controlled [8]. The NVT ensemble may be preferable for specific applications where volume constraints are physically justified, such as proteins in crowded cellular environments, materials confined in porous matrices, or when comparing directly with constant-volume experimental data.

Practical simulation workflows often employ multiple ensembles sequentially. A typical protocol might involve: (1) energy minimization to remove steric clashes, (2) NVT equilibration to stabilize the temperature, (3) NPT equilibration to adjust density, and (4) production simulation in either NVT or NPT depending on the properties of interest [8] [10]. For specific applications like spectroscopic property calculation, researchers may return to NVE for production dynamics to avoid artificial decorrelation of velocities by thermostats [8].

The following workflow diagram illustrates a typical multi-ensemble simulation protocol for biomolecular systems:

Simulation Workflow: Typical multi-ensemble protocol for biomolecular MD simulations.

Advanced Ensemble Implementations

Modern molecular dynamics packages offer sophisticated ensemble implementations beyond the basic three discussed previously. For example, the ReaxFF program includes specialized ensembles such as Hugoniostat for modeling shock waves, non-equilibrium NVE with shear forces for studying material deformation, and anisotropic NPT with full cell fluctuations for crystalline materials [9].

The development of machine learning potentials and neural network potentials (NNPs) has further expanded ensemble capabilities. Recent advancements like Meta's Open Molecules 2025 (OMol25) dataset and Universal Models for Atoms (UMA) provide highly accurate potential energy surfaces that can be integrated with various ensemble sampling techniques [11]. These approaches combine the computational efficiency of classical force fields with the accuracy of quantum mechanical calculations, enabling more reliable sampling of complex thermodynamic ensembles.

Enhanced sampling techniques, such as replica exchange molecular dynamics (REMD), meta-dynamics, and variational free energy methods, often employ sophisticated ensemble designs to accelerate rare events and improve phase space sampling. These methods frequently combine elements from different standard ensembles or employ generalized ensembles that smoothly transition between thermodynamic states.

Successful implementation of molecular dynamics simulations requires both theoretical knowledge and practical tools. The following table summarizes key resources mentioned in recent literature that facilitate research using statistical ensembles:

Table 3: Essential Research Tools and Resources for Ensemble-Based MD Simulations

| Resource Category | Specific Tools/References | Function/Purpose | Key Features |

|---|---|---|---|

| Textbooks | "Computer Simulation of Liquids" (Allen & Tildesley) [12] | Fundamental MD theory and practice | Comprehensive coverage of basic MD methods |

| "Understanding Molecular Simulation" (Frenkel & Smit) [12] | Practical simulation methodology | Example code, enhanced sampling methods | |

| "Statistical Mechanics: Theory and Molecular Simulation" (Tuckerman) [12] | Theoretical foundation | Path integral methods, statistical mechanics theory | |

| Software Packages | ReaxFF [9] | Reactive force field MD | Multiple ensemble implementations, NHC thermostats |

| GROMACS [12] | Biomolecular MD | Extensive ensemble options, high performance | |

| i-PI [12] | Path integral MD | Quantum effects, advanced sampling | |

| Datasets & Potentials | Meta's OMol25 [11] | Neural network potential training | 100M+ quantum calculations, broad chemical diversity |

| Universal Models for Atoms (UMA) [11] | Transferable ML potentials | Mixture of Experts architecture, multi-dataset training | |

| Benchmarks | Rowan Benchmarks [11] | Model performance evaluation | Molecular energy accuracy comparisons |

The following diagram illustrates the relationship between different statistical ensembles and their connected free energies, providing a conceptual map for understanding the thermodynamic foundations of molecular simulation:

Ensemble Thermodynamics: Relationship between statistical ensembles and their connected free energies.

Current Research Trends and Future Directions

The field of molecular dynamics and statistical ensemble methodology continues to evolve rapidly, driven by advances in computational hardware, algorithmic innovations, and emerging scientific questions. Recent research trends highlight several exciting developments that are expanding the capabilities and applications of ensemble-based simulations.

The integration of machine learning and artificial intelligence with molecular dynamics represents one of the most promising directions [13] [7]. Machine learning algorithms can accelerate MD simulations by providing more accurate predictions of molecular interactions and optimizing simulation parameters [7]. Recent releases such as Meta's Open Molecules 2025 (OMol25) dataset and associated neural network potentials (NNPs) demonstrate the potential of these approaches, achieving essentially perfect performance on molecular energy benchmarks while enabling simulations of systems that were previously computationally prohibitive [11]. The eSEN architecture, for instance, improves the smoothness of potential-energy surfaces, making molecular dynamics and geometry optimizations better-behaved [11].

Another significant trend involves the development of multi-scale simulation methodologies that seamlessly connect different levels of theory, from quantum mechanics to coarse-grained models [13]. These approaches often employ sophisticated ensemble designs to facilitate information transfer between scales. For example, the Universal Models for Atoms (UMA) architecture introduces a novel Mixture of Linear Experts (MoLE) approach that enables knowledge transfer across datasets computed using different DFT engines, basis set schemes, and levels of theory [11].

The application of molecular dynamics simulations is also expanding into new domains, including the design of oil-displacement polymers for enhanced oil recovery [13], predictive modeling of hazardous substances for safe handling [10], and the development of advanced materials for energy storage and conversion. In each of these applications, careful selection and implementation of statistical ensembles is crucial for obtaining physically meaningful results that can guide experimental efforts.

Future research will likely focus on developing more efficient sampling algorithms, improving the accuracy of force fields through machine learning, and extending simulation capabilities to larger systems and longer timescales [13] [7]. As computational power continues to grow and algorithms become more sophisticated, the role of statistical ensembles in connecting microscopic simulations to macroscopic observables will remain fundamental to the field of molecular modeling.

The ergodic hypothesis is a cornerstone of statistical mechanics and, by extension, molecular dynamics (MD) simulations. It asserts that, over long periods, the time average of a property from a single system's trajectory is equal to the ensemble average of that property across a vast collection of identical systems at one instant in time [14] [15]. This equivalence is a fundamental, often implicit, assumption that validates the use of MD to compute thermodynamic properties. Without it, the connection between the microscopic world described by Newton's equations and the macroscopic thermodynamic properties we wish to predict would be severed.

This principle finds its formal justification in the framework of statistical mechanics, which provides the link between the microscopic states of atoms and molecules and macroscopic observables. The expectation value of an observable, such as energy (E), in the canonical (NVT) ensemble is defined using the partition function (Z): [ \langle E \rangle{\text{ensemble}} = \frac{\sumi Ei e^{-Ei/kB T}}{Z} ] where the sum is over all microstates [16]. The ergodic hypothesis allows this ensemble average to be replaced in MD simulations by a time average: [ \langle E \rangle{\text{time}} = \lim{\tau \to \infty} \frac{1}{\tau} \int0^\tau E(t) \, dt \approx \frac{1}{N{\text{steps}}} \sum{t=1}^{N{\text{steps}}} Et ] This makes MD a practical tool, as we can simulate a single system over time rather than attempting to create and average over an impossibly large number of replica systems [15].

The Centrality of the Ergodic Hypothesis in Molecular Dynamics

In practice, MD simulations rely heavily on the ergodic assumption. A simulation generates a sequence of configurations (a trajectory) by numerically integrating Newton's equations of motion. Each configuration provides an instantaneous value for properties like temperature, pressure, and energy. The "true" thermodynamic value is then taken to be the average of these instantaneous values over the entire simulation trajectory [15]. This procedure implicitly assumes the ergodic hypothesis, equating the time average from one simulation to the ensemble average of the theoretical statistical mechanical ensemble.

Almost every property of interest obtained from an MD simulation—including temperature, pressure, structural correlations, and elastic properties—is ultimately the result of such a time average [15]. The hypothesis is considered valid provided the simulation is run for a "long enough" time, allowing the single simulated system to explore a representative sample of the phase space consistent with its thermodynamic constraints [15].

Limitations and Practical Challenges

Despite its foundational role, the ergodic hypothesis is not universally true for all systems, and its strict validity in real simulations is often a matter of careful consideration.

Theoretical and Phenomenological Violations

From a theoretical standpoint, the literal form of the ergodic hypothesis can be broken in several ways. The famous Fermi-Pasta-Ulam-Tsingou (FPUT) experiment in 1953 was an early numerical demonstration of non-ergodic behavior, where energy failed to equilibrate evenly among all vibrational modes in a non-linear system, instead exhibiting recurrent behavior [14] [17]. Macroscopic systems can also exhibit ergodicity breaking. For example, in ferromagnetic materials below the Curie temperature, the system becomes trapped in a state with non-zero magnetization rather than exploring all states with an average magnetization of zero [14]. Complex disordered systems like spin glasses or conventional window glasses display more complicated ergodicity breaking, behaving as solids on human timescales but as liquids over immensely long periods [14].

The mathematical foundation for these violations often lies in the Kolmogorov-Arnold-Moser (KAM) theorem. This theorem describes the existence of stable, regular trajectories (KAM tori) in phase space that can prevent a system from exploring all accessible states, even over infinite time. Systems with hidden symmetries that support phenomena like solitons (stable, localized traveling waves) are classic examples where ergodicity fails [17].

Practical Implications for Molecular Dynamics

These theoretical limitations translate into concrete challenges for MD simulations:

- Insufficient Sampling: The most common practical issue is that simulations are too short to adequately explore phase space. A system might be trapped in a local energy minimum, such as one conformational state of a biomolecule, and never transition to other, equally important states during the simulation time.

- Calculation of Specific Quantities: Some thermodynamic properties are more sensitive to ergodicity violations than others. While average energy can often be reliably estimated, the calculation of free energies and entropies is notoriously difficult because they depend on the partition function and the total number of accessible states (density of states), which may be undersampled [16]. High-energy regions that contribute significantly to these averages might never be visited in a typical simulation.

- Physical Constraints: Real-world systems like solids (e.g., an ice cube) or multi-phase systems (e.g., a vapor-liquid mixture) are inherently non-ergodic on practical timescales because atoms are confined to lattice sites or localized regions [17]. Statistical mechanics can still be applied, but it requires careful consideration of the relevant, accessible portion of phase space.

Methodologies and Computational Protocols

Given the challenges of ergodicity, several computational strategies have been developed to improve phase space sampling in MD simulations.

Standard Averaging Protocols

Table 1: Comparison of Averaging Methods in Molecular Dynamics

| Method | Description | Key Advantage | Key Disadvantage |

|---|---|---|---|

| Time Averaging | A single, long simulation is run, and properties are averaged over the entire trajectory. | Conceptually simple; mirrors the fundamental ergodic hypothesis. | Can be computationally serial; prone to getting stuck in metastable states. |

| Ensemble Averaging | Multiple independent simulations are run from different initial conditions, and results are averaged across these trajectories. | Highly parallelizable; better at exploring diverse regions of phase space. | Requires more initial setup; computational cost is multiplied by the number of trajectories. |

A key study comparing these methods for calculating thermal conductivity found that ensemble averaging can drastically reduce the total wall-clock simulation time because the independent trajectories can be run in parallel on high-performance computing clusters. This is particularly advantageous for expensive simulations like ab initio MD [18].

Enhanced Sampling Techniques

For properties like free energy that are not simple time averages, specialized "enhanced sampling" methods are required. These methods manipulate the system to encourage exploration of otherwise inaccessible regions of phase space.

- Metadynamics: This method adds a history-dependent bias potential to the system's energy function, which discourages the system from revisiting already sampled states. This "fills up" free energy basins and pushes the system to explore new regions [16].

- Statistical Temperature Molecular Dynamics: This algorithm modifies the MD equations to achieve a flat histogram in potential energy, forcing the system to sample all energy levels uniformly [16].

- Replica Exchange MD (Parallel Tempering): Multiple copies (replicas) of the system are simulated simultaneously at different temperatures. Periodically, exchanges between replicas are attempted based on a Metropolis criterion. This allows conformations trapped at low temperatures to be "heated up," escape local minima, and then "cooled down" again, thus enhancing sampling [16].

The workflow below illustrates how enhanced sampling integrates with the standard MD process to achieve more robust, ergodic sampling.

Integrating Experimental Data

Another powerful approach, especially for challenging systems like intrinsically disordered proteins (IDPs), is the integration of experimental data with simulations. Nuclear Magnetic Resonance (NMR) spectroscopy provides ensemble-averaged, site-specific structural and dynamic information. This experimental data can be used to restrain MD simulations or to reweight simulation-generated ensembles, ensuring the final model is consistent with empirical observations and thus more accurately reflects the true accessible phase space [19].

The Scientist's Toolkit: Essential Reagents and Methods

Table 2: Key Computational Tools for Ergodicity and Sampling

| Tool / Method | Category | Primary Function |

|---|---|---|

| Force Fields | Interaction Potential | Defines the potential energy surface (PES) governing atomic interactions; accuracy is paramount for realistic sampling. |

| Thermostats (e.g., Nosé-Hoover) | Ensemble Control | Controls temperature by mimicking heat exchange with a bath, essential for sampling NVT or NPT ensembles. |

| Metadynamics | Enhanced Sampling | Accelerates escape from free energy minima by applying a history-dependent bias potential. |

| Replica Exchange MD | Enhanced Sampling | Enhances sampling across energy barriers by running parallel simulations at different temperatures. |

| Maxwell-Boltzmann Distribution | Initialization | Generates physically realistic initial velocities for atoms at the start of a simulation [20]. |

| Neighbor Searching (Verlet List) | Algorithmic Efficiency | Efficiently identifies atom pairs within interaction range, a critical performance optimization in MD [20]. |

| ETN029 | ETN029, MF:C101H140N22O26S2, MW:2142.5 g/mol | Chemical Reagent |

| OAC1 | OAC1, MF:C14H11N3O, MW:237.26 g/mol | Chemical Reagent |

The ergodic hypothesis provides the essential bridge that makes molecular dynamics a powerful tool for connecting atomic-scale motion to macroscopic thermodynamic properties. While its strict mathematical validity is limited, and practical violations are common, it remains a productive and necessary foundation. The ongoing development of enhanced sampling methods, parallel ensemble techniques, and hybrid experimental-computational approaches represents the field's active response to the challenges of ergodicity. By consciously addressing these limitations, researchers can design more robust simulations, achieve more representative sampling, and compute a wider range of accurate thermodynamic properties, thereby strengthening the predictive power of molecular dynamics in fields from materials science to drug development.

The Potential of Mean Force and Landau Free Energy in Complex Biomolecular Systems

In the realm of molecular dynamics (MD) and statistical mechanics, accurately describing and quantifying complex biomolecular processes—such as protein folding, ligand binding, and conformational changes—remains a central challenge. These processes are governed by free energy landscapes, where minima represent stable states and barriers represent transitions. The Potential of Mean Force (PMF) and the Landau Free Energy (also referred to as the Landau theory of phase transitions) are two fundamental concepts that provide a powerful framework for quantifying these landscapes [6] [21].

The PMF provides a statistical mechanical description of the effective free energy along a reaction coordinate, effectively averaging out all other degrees of freedom [6]. Landau Free Energy, a phenomenological theory originally developed for phase transitions, offers a versatile approach to modeling how free energy depends on an order parameter, which can describe the progression of a biomolecular reaction [21] [22]. Within the context of a broader thesis on statistical mechanics for MD ensembles research, this whitepaper explores the theoretical underpinnings, computational methodologies, and practical applications of these two concepts. It is demonstrated that they are not merely abstract ideas but are indispensable tools for connecting microscopic simulations to macroscopic, experimentally observable properties, thereby bridging the gap between molecular-level dynamics and drug-relevant phenotypes [23] [24].

Theoretical Foundations

Statistical Mechanics of Molecular Dynamics Ensembles

Statistical mechanics forms the theoretical bedrock for interpreting MD simulations, connecting the chaotic motion of individual atoms to predictable thermodynamic averages. The core idea is that a macroscopic observable is the average over all possible microscopic states of the system (the ensemble) [6] [23].

- The Ensemble Picture: Instead of tracking a single system over time, Gibbs introduced the concept of an ensemble—a vast collection of identical systems in different microstates. The average value of an observable is then computed over this ensemble [6].

- The Ergodic Hypothesis: This principle asserts that the time average of a property for a single system, over a sufficiently long period, equals the ensemble average. This justifies the use of MD trajectories, which are time-dependent, to compute equilibrium thermodynamic properties [23].

- Relevance for Biomolecules: For biomolecular systems, key ensembles include the canonical ensemble (NVT) for systems at constant temperature and the isothermal-isobaric ensemble (NPT) for constant temperature and pressure, which mimic common experimental conditions [23].

Potential of Mean Force (PMF)

The Potential of Mean Force is a central concept for understanding processes characterized by a specific reaction coordinate, such as the distance between a drug and its binding pocket.

- Definition and Derivation: The PMF, often denoted as ( W(\xi) ), is defined as the effective free energy along a chosen reaction coordinate ( \xi ). It is derived from the configurational integral of the system by integrating out all other degrees of freedom [6]. Formally, for a reaction coordinate ( \xi ), the probability distribution ( P(\xi') ) is related to the PMF by: ( W(\xi') = -kB T \ln P(\xi') + C ) where ( kB ) is Boltzmann's constant, ( T ) is temperature, and ( C ) is an arbitrary constant.

- Physical Interpretation: The negative gradient of the PMF with respect to the reaction coordinate gives the mean force acting along that coordinate, averaged over all other thermal motions of the system. Its global minimum identifies the most stable state, while local minima and the barriers between them describe metastable states and transition states [6].

- Connection to Landau Free Energy: The review by [6] explicitly states that the Landau free energy is "also known as the potential of the mean force." This establishes that in the context of biomolecular simulations, the Landau free energy describing a process can be numerically computed as the PMF along a suitably chosen order parameter or reaction coordinate.

Landau Free Energy Theory

Landau theory provides a general framework for describing phase transitions and, by extension, cooperative transitions in biomolecules, through the expansion of free energy in terms of an order parameter [21] [22].

- Order Parameter: The theory is built around an order parameter ( \eta ), a quantity that is zero in the symmetric (disordered) phase and non-zero in the broken-symmetry (ordered) phase. In biophysics, this could be a measure of helicity in protein folding, a distance in binding, or a collective variable describing a conformational change [21].

- Free Energy Expansion: Near a critical point (or transition point), the Landau free energy ( F ) is expanded as a Taylor series in the order parameter. For a symmetric system, the expansion takes the form: ( F(T, \eta) - F_0 = a(T)\eta^2 + \frac{b(T)}{2}\eta^4 + \cdots ) where ( a(T) ) and ( b(T) ) are temperature-dependent coefficients [21] [22].

- Application to Biomolecular Transitions: The minima of this free energy function determine the stable and metastable states of the system. For a second-order transition, ( a(T) ) changes sign at the critical temperature ( T_c ), leading to a continuous shift from a single minimum at ( \eta=0 ) to two minima at ( \eta \neq 0 ). First-order transitions can be modeled by including a negative ( \eta^4 ) term and a positive ( \eta^6 ) term, leading to discontinuous jumps in the order parameter [21].

Computational Methodologies

Calculating the PMF/Landau free energy is computationally demanding because it requires sufficient sampling of high-energy regions and transition states. Several powerful techniques have been developed for this purpose.

Key Enhanced Sampling Methods

Table 1: Comparison of Key Enhanced Sampling Methods for PMF Calculation

| Method | Core Principle | Key Inputs/Parameters | Primary Output | Key Advantages |

|---|---|---|---|---|

| Umbrella Sampling | Biases the simulation along a reaction coordinate using harmonic potentials to sample all regions, including high-energy ones. | Reaction coordinate, number and positions of windows, force constant for harmonic bias. | A series of biased probability distributions along the coordinate. | Conceptually simple, highly parallelizable. |

| Metadynamics | Adds a history-dependent repulsive potential (often Gaussians) to the free energy landscape to discourage the system from revisiting sampled states. | Reaction coordinate(s) (collective variables), Gaussian height and width, deposition rate. | The time-dependent biasing potential, which converges to the negative of the PMF. | Good for exploring complex landscapes without pre-defining all states. |

| Adaptive Biasing Force | Directly calculates the mean force along the reaction coordinate and integrates it to obtain the PMF. | Reaction coordinate. | The instantaneous force acting on the reaction coordinate. | Can be computationally very efficient once the force is converged. |

Protocol for PMF Calculation via Umbrella Sampling

Umbrella Sampling (US) is a widely used and reliable method for calculating the PMF. The following is a detailed protocol.

- Step 1: Reaction Coordinate Selection: Identify a physically meaningful reaction coordinate ( \xi ) that distinguishes between the initial, final, and intermediate states of the process (e.g., distance, angle, or a combination thereof).

- Step 2: Steered MD for Initial Sampling: Perform a Steered Molecular Dynamics (SMD) simulation to rapidly pull the system from the initial to the final state along ( \xi ). This generates an initial pathway and helps identify the range of ( \xi ) to be studied.

- Step 3: Window Setup and Simulation: Divide the range of ( \xi ) into multiple overlapping "windows." For each window ( i ), center a harmonic restraint potential ( Ui = \frac{1}{2} k (\xi - \xii)^2 ) at ( \xi_i ). Run an independent MD simulation for each window under the influence of this bias.

- Step 4: Weighted Histogram Analysis Method: After all simulations are complete, use the WHAM to combine the biased probability distributions ( P_i^b(\xi) ) from all windows. WHAM solves for the unbiased PMF ( W(\xi) ) by ensuring that the statistical weight of each configuration is consistent across all windows, effectively removing the effect of the umbrella potentials.

The workflow for this protocol is visualized below.

Integrating Experiments with Maximum Entropy

A modern approach to refining PMFs involves integrating experimental data directly into the simulation framework. The Maximum Entropy (MaxEnt) principle is used to bias the simulation ensemble so that it agrees with experimental observables (e.g., from NMR, SAXS, or smFRET) while minimally perturbing the simulated distribution [25] [23]. This results in a conformational ensemble that is consistent with both physics-based simulations and experimental data, providing a more accurate and reliable free energy landscape.

The Scientist's Toolkit: Research Reagents & Computational Solutions

Table 2: Essential Research Reagents and Computational Tools

| Item / Reagent | Function / Purpose | Example Use in Biomolecular Studies |

|---|---|---|

| Molecular Dynamics Software | Provides the engine to perform simulations; integrates force fields, solvation models, and analysis tools. | GROMACS, NAMD, AMBER, OpenMM for running production and enhanced sampling simulations. |

| Enhanced Sampling Plugins | Implements advanced algorithms for PMF calculation not always available in core MD packages. | PLUMED is the standard library for implementing US, Metadynamics, ABF, etc. |

| Force Field | A set of empirical functions and parameters that describe the potential energy of a system of particles. | CHARMM36, AMBER/ff19SB, OPLS-AA to define bonded and non-bonded interactions for proteins, nucleic acids, and lipids. |

| Explicit Solvent Model | Represents water and ions explicitly in the simulation box to model solvation effects accurately. | TIP3P, TIP4P water models; ion parameters compatible with the chosen force field. |

| WHAM / Analysis Tools | Post-processing software to compute the PMF from the raw data of enhanced sampling simulations. | gmx wham (GROMACS), wham (PLUMED-related) for US analysis; PyEMMA for Markov State Models. |

| YT-8-8 | YT-8-8, MF:C18H23FN2O2, MW:318.4 g/mol | Chemical Reagent |

| NUCC-390 | NUCC-390, MF:C23H33N5O, MW:395.5 g/mol | Chemical Reagent |

Applications in Drug Development

The PMF is a critical quantitative tool in modern drug discovery, providing deep insights into the molecular interactions that underpin efficacy.

Quantifying Protein-Ligand Binding Affinity

The absolute binding affinity, measured experimentally as the binding free energy ( \Delta G_{bind} ), can be directly computed as the PMF difference between the bound and unbound states along a pathway that physically separates the ligand from the protein. This provides a rigorous, physics-based alternative to empirical scoring functions, offering a more accurate prediction of a drug candidate's potency [23].

Mapping Allosteric Pathways and Mutational Effects

Allosteric regulation, where a binding event at one site affects function at a distant site, is a key feature of many drug targets. The PMF can be computed along collective variables that describe allosteric transitions, revealing the communication pathways within a protein. Furthermore, by comparing PMFs for wild-type and mutated proteins, researchers can quantitatively predict how mutations alter conformational equilibria and free energy barriers, aiding in the understanding of genetic diseases and resistance mechanisms [23].

The following diagram illustrates a generalized workflow for applying PMF studies to a drug discovery pipeline.

The Potential of Mean Force and the framework of Landau Free Energy provide an indispensable connection between the microscopic world sampled by molecular dynamics and the macroscopic thermodynamic properties that govern biomolecular function and drug action. As this whitepaper has detailed, rigorous statistical mechanics underpins the computational methodologies that allow researchers to extract free energy landscapes from simulations. The integration of these approaches with experimental data and their application to challenges in drug discovery—from predicting binding affinities to understanding allostery—demonstrates their critical role in modern biophysical research. The ongoing development of enhanced sampling algorithms, coupled with increases in computational power and the rise of AI-driven approaches [6] [13], promises to further enhance the accuracy and scope of PMF calculations, solidifying their place as a cornerstone of quantitative molecular science.

Foundations of Non-Equilibrium Statistical Mechanics and Transport Phenomena

Non-equilibrium statistical mechanics provides the fundamental framework for understanding systems driven away from thermal equilibrium, serving as the theoretical bedrock for analyzing transport phenomena—the movement of mass, energy, and momentum within physical systems [26] [27]. Unlike equilibrium states characterized by maximum randomness and zero net fluxes, non-equilibrium states exhibit directional transport and irreversible processes that dominate most real-world systems, from biological organisms to semiconductor devices [28] [27]. This domain connects microscopic particle dynamics with observable macroscopic properties through statistical methods, enabling predictions of system behavior under non-equilibrium conditions across physics, chemistry, engineering, and drug discovery [26] [29].

The field has evolved significantly beyond near-equilibrium thermodynamics to address complex far-from-equilibrium scenarios through innovative approaches. Recent developments incorporate nonlocal and memory effects through integral-differential formulations, variational principles, and advanced computational methods that capture transient dynamics, microscopic fluctuations, and turbulent regimes [30]. These advancements refine our fundamental grasp of irreversible processes at micro and mesoscopic scales while enabling technological progress in diverse areas including pharmaceutical design, energy systems, and materials science [29] [30].

Fundamental Theoretical Frameworks

Foundational Approaches and Equations

Non-equilibrium statistical mechanics encompasses four principal methodological approaches, each with distinct foundational equations and applications as summarized in Table 1 [31].

Table 1: Fundamental Approaches in Non-Equilibrium Statistical Mechanics

| Category | Founder | Basic Equation | Key Concepts | Primary Applications |

|---|---|---|---|---|

| I | Boltzmann | Boltzmann equation | One-particle distribution function, Molecular chaos | Kinetic theory, Dilute gases |

| II | Gibbs | Master equation, Fokker-Planck equation | Density operator, Ensemble | Quantum systems, Chemical reactions |

| III | Einstein | Langevin equation | Random force, Dynamical variable | Brownian motion, Barrier crossing |

| IV | Kubo | Stochastic Liouville equation | Random force, Phase space variable | Magnetic resonance, Spectroscopy |

The Boltzmann equation describes the evolution of the particle distribution function in phase space and introduces irreversibility through molecular chaos assumptions [26] [31]. It forms the basis for deriving macroscopic transport equations and remains fundamental to kinetic theory. The Fokker-Planck equation provides a continuum description of probability evolution, while the Langevin equation offers a stochastic trajectory-based approach that is particularly valuable for modeling Brownian motion and barrier crossing phenomena [31]. For quantum systems, the density operator formalism extends these concepts through approaches like Non-Equilibrium Thermo Field Dynamics (NETFD), which maintains structural resemblance to quantum field theory while incorporating dissipative processes [31].

Core Transport Phenomena

Transport phenomena encompass three primary modes of transfer governed by constitutive relationships between fluxes and gradients [26]:

Mass Transport: Described by Fick's Laws of diffusion, where the diffusive flux is proportional to the concentration gradient: ( J = -D \frac{\partial c}{\partial x} ). The time evolution of concentration follows ( \frac{\partial c}{\partial t} = D \frac{\partial^2 c}{\partial x^2} ) [26].

Energy Transport: Governed by Fourier's Law of heat conduction, where heat flux is proportional to the temperature gradient: ( q = -k \frac{dT}{dx} ) [26].

Momentum Transport: Characterized by Newton's Law of viscosity, relating shear stress to velocity gradient: ( \tau = \mu \frac{du}{dy} ) [26].

These linear flux-gradient relationships represent the foundational constitutive equations for transport processes near equilibrium, though they undergo significant modification in strongly non-equilibrium regimes [26] [30].

Figure 1: Multi-scale framework connecting microscopic dynamics to macroscopic transport

Mathematical Formalisms and Key Relationships

Irreversible Thermodynamics and Linear Response Theory

The framework of irreversible thermodynamics extends classical thermodynamics to non-equilibrium systems through the local equilibrium assumption and entropy production principles [26]. Within this formalism, the Onsager reciprocal relations establish fundamental symmetry in coupled transport phenomena: ( Ji = \sumj L{ij} Xj ), where the phenomenological coefficients satisfy ( L{ij} = L{ji} ) as a consequence of microscopic reversibility [26]. This formalism provides the theoretical foundation for understanding coupled phenomena such as thermoelectric effects, electrokinetic phenomena, and thermophoresis [26].

Linear response theory describes system behavior under small perturbations from equilibrium, connecting macroscopic response functions to microscopic correlation functions through the fluctuation-dissipation theorem: ( \chi''(\omega) = \frac{\omega}{2kBT} S(\omega) ) [26]. This approach leads to Green-Kubo relations, which express transport coefficients as integrals of time correlation functions: ( L = \frac{1}{kBT} \int_0^\infty dt \langle J(t)J(0) \rangle ) [26]. These formalisms establish profound connections between spontaneous fluctuations and dissipative properties, fundamentally linking microscopic reversibility to macroscopic irreversibility.

Advanced Theoretical Frameworks

Chapman-Enskog theory provides a systematic perturbation method for solving the Boltzmann equation through expansion in terms of the Knudsen number, yielding transport coefficients expressed through collision integrals [26]. This approach is particularly valuable for dilute gases and plasmas, where particle interactions can be well-characterized. For dense systems, the Zubarev non-equilibrium statistical operator method enables self-consistent description of kinetic and hydrodynamic processes, facilitating derivation of generalized transport equations that remain valid across wider parameter ranges [32].

The mean free path concept represents the average distance particles travel between collisions, serving as a key parameter determining the validity of continuum approximations and playing a critical role in rarefied gas dynamics and plasma physics [26]. This concept enables derivation of transport coefficients from first principles using kinetic theory, with temperature and pressure dependencies emerging naturally from the analysis.

Quantitative Transport Properties

Transport Coefficients and Their Relationships

Transport coefficients quantitatively characterize a material's response to applied gradients, enabling prediction of heat, mass, and momentum transfer rates in diverse systems [26]. These coefficients can be derived from statistical mechanics or measured experimentally, typically exhibiting characteristic temperature and pressure dependencies that provide insight into underlying microscopic mechanisms.

Table 2: Key Transport Coefficients and Their Physical Significance

| Transport Coefficient | Governing Law | Mathematical Expression | Microscopic Interpretation | Temperature Dependence |

|---|---|---|---|---|

| Diffusion Coefficient (D) | Fick's Law | ( J = -D \frac{\partial c}{\partial x} ) | Mean square displacement: ( \langle x^2 \rangle = 2Dt ) | Increases with T (Arrhenius) |

| Thermal Conductivity (k) | Fourier's Law | ( q = -k \frac{dT}{dx} ) | Related to specific heat and mean free path | Complex T dependence |

| Viscosity (μ) | Newton's Law | ( \tau = \mu \frac{du}{dy} ) | Momentum transfer between fluid layers | Gas: Increases with T; Liquid: Decreases with T |

| Electrical Conductivity (σ) | Ohm's Law | ( J = \sigma E ) | Charge carrier mobility and density | Metal: Decreases with T; Semiconductor: Increases with T |

The Einstein relation connects diffusion and mobility through ( D = \mu kB T ), exemplifying the profound relationships between different transport coefficients that emerge from statistical mechanical principles [26]. Similarly, the Stokes-Einstein relation ( D = \frac{kB T}{6\pi\eta r} ) describes diffusion in liquids, connecting Brownian motion to fluid viscosity and particle size [26].

Computational Methodologies for Transport Properties

Advanced computational approaches enable prediction of transport properties from molecular simulations, bridging microscopic interactions with macroscopic behavior [30]. The mean first passage time (MFPT) methodology calculates transition rates between states using equilibrium properties and non-equilibrium statistical mechanics, avoiding computationally expensive direct simulations [31]. For a Smoluchowski process describing overdamped dynamics on a potential of mean force, the MFPT from ( q0 ) to ( qF ) satisfies ( \hat{L}^\dagger \tau{MFP}(qF|q0) = -1 ) with boundary condition ( \tau{MFP}(qF|qF) = 0 ), where ( \hat{L}^\dagger ) is the adjoint Fokker-Planck operator [31].

Green-Kubo relations provide an alternative approach, expressing transport coefficients as time integrals of autocorrelation functions: ( L = \frac{1}{kBT} \int0^\infty dt \langle J(t)J(0) \rangle ), where ( J(t) ) represents the appropriate flux [26]. This formalism enables extraction of transport coefficients from equilibrium molecular dynamics simulations through careful analysis of fluctuation statistics.

Experimental and Computational Methodologies

Nonequilibrium Switching for Binding Free Energy Calculations

Nonequilibrium switching (NES) represents a transformative methodology for predicting binding free energies in drug discovery applications, replacing slow equilibrium simulations with rapid, parallel transitions to achieve 5-10X higher throughput [29]. Unlike traditional methods like free energy perturbation (FEP) and thermodynamic integration (TI) that require intermediate states to reach thermodynamic equilibrium, NES employs many short, bidirectional transformations directly connecting molecular states without equilibrium constraints [29]. The mathematical framework ensures that despite each switch being driven far from equilibrium, collective statistics yield accurate free energy differences through application of the Jarzynski equality and related fluctuation theorems.

The NES protocol for relative binding free energy (RBFE) calculation involves several critical steps [29]:

System Preparation: Construct hybrid topology connecting initial and final states through alchemical transformation pathway.

Nonequilibrium Trajectory Generation: Perform multiple independent, fast switching simulations (typically tens to hundreds of picoseconds) in both forward and reverse directions.

Work Measurement: Calculate work values for each switching trajectory using ( W = \int_0^\tau dt \, \frac{\partial H}{\partial \lambda} \dot{\lambda} ), where ( H ) is the Hamiltonian and ( \lambda ) the switching parameter.

Free Energy Estimation: Apply Bayesian bootstrap methods to work distributions to estimate free energy differences with uncertainty quantification.

This approach offers significant advantages for drug discovery pipelines, including enhanced sampling efficiency, rapid feedback through partial results, inherent scalability through massive parallelism, and resilience to individual simulation failures [29].

Figure 2: NES workflow for binding free energy calculation

Generalized Collective Mode Analysis

The method of generalized collective modes provides a computer-adapted formalism for studying spectra of collective excitations, time correlation functions, and wave-vector/frequency dependent transport coefficients in dense liquids and mixtures [32]. This approach enables investigation of dynamical processes across wide ranges of wave-vector and frequency, from hydrodynamic regimes to limiting Gaussian behavior, without adjustable parameters. Applications include simple fluids, semi-quantum and magnetic fluids, liquid metals, alloys, and multi-component mixtures, with demonstrated agreement with computer simulation data [32].

Key achievements of this methodology include clarification of shear wave and "heat wave" mechanisms in simple fluids, explanation of "fast sound" phenomena in binary mixtures with large mass differences, and derivation of analytical solutions for dynamical structure factors in magnetic fluids [32]. For molecular systems like water, explicit accounting of atomic structure within molecules and non-Markovian effects in kinetic memory kernels enables quantitative calculation of bulk viscosity, thermal conductivity, and dynamical structure factors across complete frequency and wave-vector ranges [32].

Research Reagents and Computational Tools

Modern research in non-equilibrium statistical mechanics and transport phenomena employs sophisticated computational tools and theoretical frameworks that constitute the essential "research reagents" for advancing the field.

Table 3: Key Research Reagents and Methodologies in Non-Equilibrium Statistical Mechanics

| Research Reagent | Type | Function | Application Examples |

|---|---|---|---|

| Boltzmann Equation | Theoretical Framework | Describes evolution of particle distribution in phase space | Kinetic theory of gases, Plasma physics |

| Langevin Equation | Stochastic Model | Models Brownian motion with random forces | Barrier crossing, Polymer dynamics |

| Fokker-Planck Equation | Differential Equation | Evolves probability distributions in state space | Chemical reactions, Particle settling |

| Green-Kubo Relations | Analytical Method | Extracts transport coefficients from correlations | Viscosity, conductivity from MD |

| Nonequilibrium Switching | Computational Protocol | Accelerates free energy calculations | Drug binding affinity prediction |

| Generalized Collective Modes | Computational Formalism | Calculates dynamical properties of liquids | Collective excitations in water |

| Mean First Passage Time | Analytical/Numerical Method | Computes transition rates between states | Protein folding, Barrier crossing |

These methodological reagents enable investigation of diverse non-equilibrium phenomena across multiple scales, from molecular rearrangements to continuum transport processes. Their implementation typically requires integration of theoretical insight with advanced computational resources, particularly for complex systems with strong interactions or multi-scale characteristics.

Applications in Molecular Biophysics and Drug Discovery

Biophysical Applications and Machine Learning Integration

Non-equilibrium statistical mechanics provides the fundamental framework for understanding biological processes at the molecular level, where true equilibrium states are rare and most functional processes occur under steady-state conditions with constant fluxes [33] [27]. Key application areas include protein folding, enzymatic catalysis, allostery, signal transduction, RNA transcription, and gene expression regulation [33]. These processes inherently involve thermal fluctuations that demand statistical mechanical treatment for comprehensive understanding.

The field currently stands at a critical transition where traditional theory-based approaches are increasingly complemented by machine learning methods [33]. AI techniques are being employed to accelerate calculations, perform standard analyses more efficiently, provide novel perspectives on established problems, and even generate knowledge—from molecular structures to thermodynamic functions [33]. This integration is particularly valuable for addressing the challenges posed by high-dimensional configuration spaces and complex free energy landscapes characteristic of biomolecular systems.

Pharmaceutical Development Applications

In pharmaceutical research, nonequilibrium statistical mechanics enables more efficient and accurate prediction of drug-target binding affinities through methods like nonequilibrium switching [29]. Traditional relative binding free energy (RBFE) calculations using free energy perturbation or thermodynamic integration require extensive sampling of intermediate states at thermodynamic equilibrium, consuming substantial computational resources [29]. The NES approach transforms this paradigm by leveraging short, independent, far-from-equilibrium transitions that collectively yield accurate free energy estimates through sophisticated statistical analysis.

This methodology offers transformative potential for drug discovery pipelines by enabling evaluation of more compounds within fixed computational budgets, providing faster feedback on promising candidates, and supporting more confident decisions when experimental resources are limited [29]. The inherent scalability and fault tolerance of NES aligns particularly well with modern distributed computing infrastructures, potentially reducing discovery timelines and costs while expanding explorable chemical space [29].

Emerging Frontiers and Research Directions

Current Research Trends and Innovations

Contemporary research in non-equilibrium statistical mechanics and transport phenomena reveals several exciting frontiers, including unexpected quantum effects like the strong Mpemba effect (accelerated relaxation through specific initial conditions) and scale-invariant fluctuation patterns during diffusive transport under microgravity conditions [30]. These phenomena challenge conventional understanding and suggest new principles for controlling non-equilibrium systems.

Innovative approaches bridging microscopic interactions with macroscopic behavior include machine learning integration with experimental databases and molecular dynamics simulations for high-fidelity prediction of transport properties in complex fluids [30]. Advanced numerical schemes, such as asymptotic preserving methods for Euler-Poisson-Boltzmann systems, enable accurate simulation of carrier transport in semiconductor devices under challenging quasi-neutral conditions [30]. Theoretical advances employing polynomial optimization techniques provide nearly sharp bounds on heat transport in convective systems, refining understanding of turbulence and energy dissipation in Rayleigh-Bénard convection [30].

Future Research Trajectories

The evolving landscape of non-equilibrium statistical mechanics points toward several promising research directions:

Multiscale Integration: Developing unified frameworks connecting quantum, molecular, and continuum descriptions of non-equilibrium processes across temporal and spatial scales.

Machine Learning Enhancement: Creating hybrid approaches that leverage AI for accelerated sampling, feature identification, and model reduction while maintaining physical interpretability.

Quantum Non-Equilibrium Phenomena: Exploring quantum transport, coherence, and information processing in strongly driven quantum systems.