Mastering MLIP Training Set Generation: Strategies for Accurate Drug Discovery & Materials Science

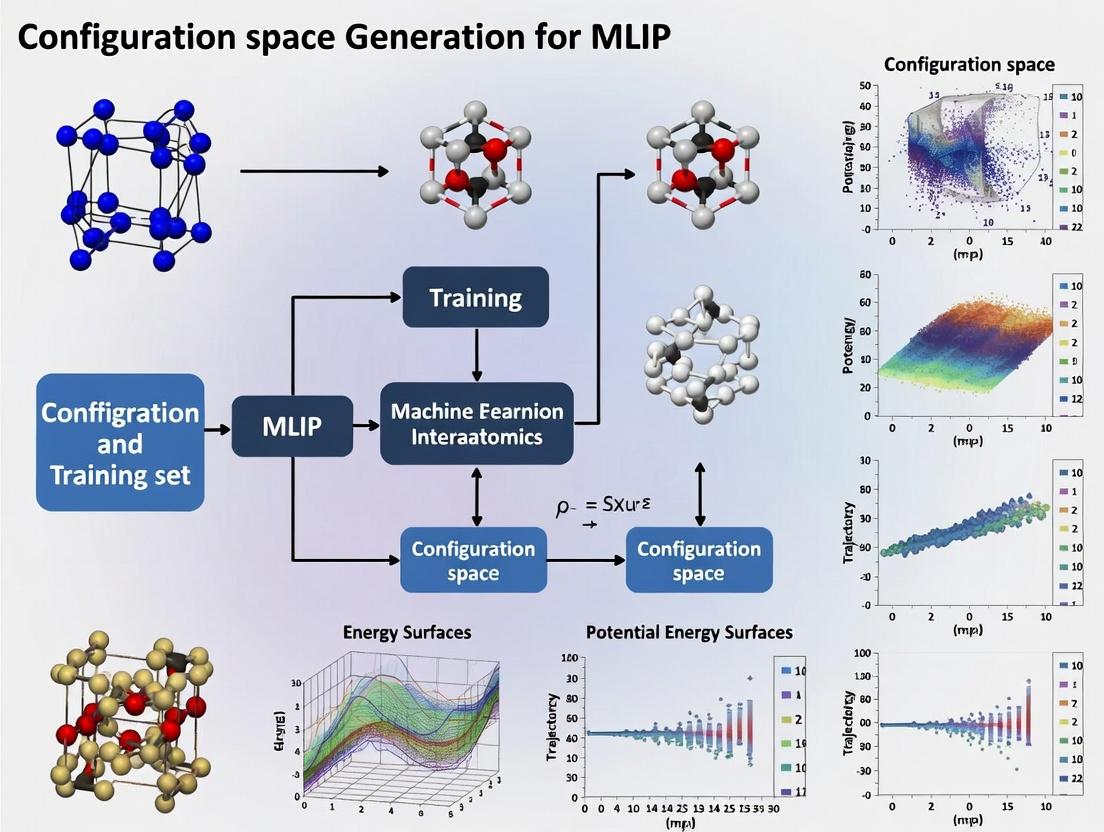

This comprehensive guide explores the critical process of configuration space generation for Machine Learning Interatomic Potentials (MLIPs).

Mastering MLIP Training Set Generation: Strategies for Accurate Drug Discovery & Materials Science

Abstract

This comprehensive guide explores the critical process of configuration space generation for Machine Learning Interatomic Potentials (MLIPs). Aimed at computational researchers, materials scientists, and drug development professionals, we detail foundational concepts, advanced methodological workflows, optimization strategies for common pitfalls, and rigorous validation techniques. The article provides a practical roadmap to build robust, data-efficient, and physically accurate training sets that power reliable MLIPs for biomedical simulations and materials discovery, enabling faster innovation cycles.

Understanding MLIP Training Sets: Why Configuration Space is Everything

Within the broader thesis on Machine Learning Interatomic Potential (MLIP) training set generation, the configuration space is the foundational set of all atomic configurations used to train, validate, and test the potential. It defines the scope of the potential's applicability (its transferability) by encompassing the relevant geometries, elemental compositions, energies, and forces that the MLIP must learn. A poorly sampled configuration space leads to unreliable extrapolation and poor performance in production simulations, such as drug discovery workflows involving protein-ligand dynamics or material stability.

Definition and Quantitative Dimensions

A configuration space for an MLIP is a high-dimensional manifold defined by atomic coordinates, cell vectors, and chemical species. Its sampling is characterized by key quantitative descriptors.

Table 1: Core Dimensions of an MLIP Configuration Space

| Dimension | Description | Typical Metric/Data Type |

|---|---|---|

| Structural Diversity | Coverage of relevant bond lengths, angles, dihedrals, polyhedra. | Radial Distribution Function (RDF), Angle Distribution Histograms. |

| Compositional Diversity | Range of chemical elements and stoichiometries. | Elemental pair counts, stoichiometry distribution. |

| Energy Range | Span of potential energies per atom (or relative energies). | min/max/mean/std of energy/atom (eV). |

| Force Range | Span of interatomic force magnitudes. | min/max/mean/std of force components (eV/Ã…). |

| Phase Space Coverage | Inclusion of different phases (crystalline, amorphous, liquid), surfaces, defects. | Classification label per configuration. |

| Temporal/Disorder | Sampling from molecular dynamics (MD) trajectories at various temperatures. | Temperature (K), root-mean-square displacement (Ã…). |

Table 2: Source Data for Configuration Space Generation (Comparative)

| Source Method | Data Produced | Computational Cost | Relevance to Drug Development |

|---|---|---|---|

| Ab Initio MD | Accurate energies/forces for small systems. | Very High | Benchmarking, small ligand/active site. |

| Density Functional Theory (DFT) | Single-point calculations for diverse geometries. | High | Ligand conformation, protein-ligand binding poses. |

| Active Learning | Iteratively selected configurations from candidate explorations. | Medium (focused) | Efficiently exploring reaction pathways or free energy landscapes. |

| Classical MD with Legacy FF | Large volumes of structural data (forces are less reliable). | Low | Initial sampling of large biomolecular systems (e.g., protein folding). |

Experimental Protocols for Configuration Space Generation

Protocol 3.1: Active Learning Loop for MLIP Training Set Curation

Purpose: To iteratively build a minimal yet comprehensive configuration space that targets the MLIP's error.

Materials: Initial ab initio dataset, pre-trained MLIP (seed model), candidate pool generator (e.g., high-T MD, random structure search), Quantum Mechanics (QM) calculator (DFT).

Procedure:

- Initialization: Train a seed MLIP on a small, diverse ab initio dataset (Protocol 3.2).

- Candidate Generation: Run exploration simulations (e.g., MD at relevant temperatures, metadynamics) using the current MLIP to generate a large pool of candidate atomic configurations not present in the training set.

- Uncertainty Quantification: For each candidate, compute MLIP's predictive uncertainty (e.g., using the variance from a committee of models

(ΔE)or single-model deviation metrics). - Selection: Rank candidates by uncertainty and select the top N (e.g., 10-100) configurations that exceed a predefined uncertainty threshold

(σ_threshold). - QM Calculation: Perform high-fidelity QM single-point calculations (or short MD) on the selected configurations to obtain target energies and forces.

- Augmentation: Add these new {configuration, energy, forces} data points to the training set.

- Retraining: Retrain the MLIP on the augmented dataset.

- Convergence Check: Evaluate MLIP performance on a held-out validation set. If error metrics are satisfactory and no new high-uncertainty configurations are found, stop. Otherwise, return to Step 2.

Protocol 3.2: Generating a Foundational Dataset viaAb InitioMolecular Dynamics (AIMD)

Purpose: To create an initial training set with accurate thermodynamic sampling for a specific chemical composition.

Materials: DFT software (e.g., VASP, CP2K), structure file for initial configuration.

Procedure:

- System Setup: Define initial atomic coordinates, periodic boundary conditions, and simulation cell size. Select appropriate DFT functional (e.g., PBE) and pseudopotentials.

- Equilibration: Run AIMD in the NVT ensemble (using a Nosé-Hoover thermostat) at the target temperature (e.g., 300 K or elevated for faster sampling) for 5-10 ps until properties (temperature, potential energy) stabilize.

- Production Run: Continue AIMD in the NVE or NVT ensemble for a duration sufficient to sample relevant dynamics (e.g., 20-100 ps). The timestep is typically 0.5-1.0 fs.

- Configuration Sampling: Extract atomic snapshots (frames) from the trajectory at regular intervals (e.g., every 10-100 fs). The interval should be longer than the correlation time of the fastest vibrations.

- Data Curation: For each snapshot, store atomic positions, cell vectors, species, total energy, and atomic forces (directly from the DFT calculation).

- (Optional) Augmentation: Apply symmetry operations (e.g., rotation, translation) to snapshots to increase data diversity without new calculations.

Visualizations

Active Learning Loop for MLIP Development

MLIP Training Set Construction Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Tools for MLIP Configuration Space Research

| Item (Software/Resource) | Category | Function in Configuration Space Work |

|---|---|---|

| VASP / CP2K / Quantum ESPRESSO | QM Calculator | Generates high-accuracy reference data (energy, forces) for atomic configurations. |

| LAMMPS / GROMACS | Molecular Dynamics Engine | Performs exploration sampling using interim MLIPs or legacy force fields to generate candidate structures. |

| ASE (Atomic Simulation Environment) | Python Library | Central hub for manipulating atoms, interfacing calculators, and workflow automation. |

| DeePMD-kit / MACE / NequIP | MLIP Framework | Provides the model architecture, training, and uncertainty estimation capabilities for active learning. |

| PYMATGEN / Pymatflow | Materials Informatics | Aids in generating initial structure sets, analyzing symmetry, and calculating structural descriptors. |

| DP-GEN / FLARE | Active Learning Automation | Specialized packages for automating the active learning loop described in Protocol 3.1. |

| Jupyter Notebook / MLflow | Computational Lab Notebook | Enables reproducible experimentation, tracking of training iterations, and result visualization. |

| Calcium hexametaphosphate | Calcium Hexametaphosphate | Calcium Hexametaphosphate for research into scale inhibition, biomineralization, and calcium signaling. For Research Use Only. Not for human use. |

| Zinc hydroxide carbonate | Zinc Hydroxide Carbonate Powder | High-purity Zinc Hydroxide Carbonate for industrial and materials research. A precursor for ZnO, catalyst, and flame retardant. For Research Use Only. Not for human use. |

Within the broader research on Machine Learning Interatomic Potential (MLIP) training set configuration space generation, a fundamental axiom emerges: the predictive accuracy and transferability of an MLIP are direct, bounded functions of the quality and diversity of its training data. This application note details the quantitative relationships and provides protocols for constructing training sets that maximize MLIP performance for materials science and drug development applications.

Table 1: Impact of Training Set Diversity on Error Metrics for a Generalized Neural Network Potential (NNP)

| Training Set Property | Mean Absolute Error (MAE) on Test Set (meV/atom) | MAE on Extrapolative Structures (meV/atom) | Force Error (meV/Ã…) | Reference |

|---|---|---|---|---|

| Single-Minimum (Equilibrium Only) | 2.1 | 152.7 | 45.3 | [Botu et al., 2017] |

| + MD Snapshots (300K) | 1.8 | 48.5 | 38.2 | [Smith et al., 2017] |

| + Nudged Elastic Band (NEB) Paths | 1.5 | 22.1 | 32.1 | [Jinnouchi et al., 2019] |

| + Active Learning (ALD) Iterations | 1.2 | 8.6 | 24.7 | [Zhang et al., 2019] |

| + Explicit Defect & Surface Configs | 1.4 | 6.3 | 28.5 | [Chen et al., 2022] |

Table 2: Performance of Different MLIPs Trained on the Same High-Quality Dataset (SPICE Dataset)

| MLIP Architecture | Energy MAE (meV/atom) | Force MAE (meV/Ã…) | Inference Speed (ms/atom) | Transferability Score* |

|---|---|---|---|---|

| ANI-2x (AEV-based) | 5.8 | 41.2 | 0.05 | 0.78 |

| MACE (Equivariant) | 2.1 | 15.3 | 0.15 | 0.94 |

| NequIP (SE(3)-Equivariant) | 1.7 | 12.8 | 0.18 | 0.96 |

| Allegro (BOT) | 2.0 | 14.1 | 0.03 | 0.93 |

*Transferability Score (0-1): Metric aggregating performance on unseen molecular compositions, charge states, and long-range interaction benchmarks.

Experimental Protocols

Protocol 3.1: Generating a Foundational Training Set via ab initio Molecular Dynamics (AIMD)

Objective: To sample a thermodynamically representative configuration space for a target system.

- Initial Structure Preparation: Obtain initial structures from databases (e.g., Materials Project, CSD, PDB). Use VESTA or ASE to create supercells (≥ 64 atoms for solids).

- DFT Pre-Optimization: Perform geometry optimization using a planewave code (VASP, Quantum ESPRESSO) with a medium-tier functional (PBE-D3) and standard pseudopotentials. Convergence criteria: energy < 1e-5 eV, force < 0.01 eV/Ã….

- AIMD Simulation: a. Equilibrate the system in the NVT ensemble at the target temperature (e.g., 300K, 600K) for 5 ps using a Nosé–Hoover thermostat. b. Continue production run in the NVE ensemble for 20-50 ps. Timestep: 0.5-1.0 fs.

- Configuration Sampling: Extract snapshots every 50-100 fs from the production run. For a 50 ps trajectory, this yields 500-1000 configurations.

- Single-Point Calculations: Perform high-accuracy DFT single-point energy and force calculations on all sampled configurations. Use a tighter energy cutoff and k-point grid than in step 2. Store configurations, energies, forces, and stresses.

Protocol 3.2: Active Learning-Driven Training Set Augmentation (ALD)

Objective: Iteratively identify and fill gaps in the configuration space to improve extrapolative power.

- Initial Model Training: Train a candidate MLIP (e.g., MACE) on the foundational set (from Protocol 3.1).

- Exploration Simulation: Run extended MD simulations (e.g., 1 ns) using the MLIP at elevated temperatures or under stress to explore unseen regions.

- Uncertainty Quantification: For each visited configuration, compute the model's uncertainty (e.g., using committee disagreement for ensemble models, or latent distance metrics for single models).

- Selection and Labeling: Identify the N configurations (e.g., top 1% of 10,000) with the highest uncertainty. Perform high-accuracy DFT calculations on these configurations.

- Augmentation and Retraining: Add the newly labeled, high-uncertainty configurations to the training set. Retrain the MLIP from scratch or fine-tune.

- Convergence Check: Evaluate the model on a held-out, diverse benchmark set. If performance plateaus, return to Step 2. Typically, 3-10 ALD cycles are performed.

Protocol 3.3: Targeted Inclusion of Rare Events and Reaction Pathways

Objective: Explicitly include transition states and defect configurations critical for drug-protein binding or catalysis studies.

- Nudged Elastic Band (NEB) Calculations: For known reaction pathways (e.g., ligand dissociation, proton transfer), perform CI-NEB calculations using DFT to locate the transition state and 5-7 intermediate images.

- Dimers and Clusters: For non-covalent interactions relevant to drug development, generate dimer configurations at varying distances and orientations (e.g., using the

MBXlibrary for SAPT-FF). - Point Defect & Surface Sampling: Create explicit vacancy, interstitial, and surface slab models. Perform short AIMD (∼5 ps) on each to sample distorted local environments.

- Incorporate into Training Set: Add all configurations from Steps 1-3, with their DFT-calculated labels, to the primary training set. Weight these configurations appropriately during training (e.g., using a higher loss weight) to ensure they are learned.

Visualizations

Training Set Construction & Active Learning Workflow

Core Relationship: Quality Drives MLIP Performance

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Tools for MLIP Training Set Generation

| Item / Solution | Function in Training Set Generation | Example / Note |

|---|---|---|

| ASE (Atomic Simulation Environment) | Python library for manipulating atoms, building structures, and interfacing with calculators. Core workflow automation tool. | Used to script supercell creation, run MD via LAMMPS, and parse outputs. |

| DP-GEN | Active learning pipeline specifically for generating MLIP training data. Automates Protocol 3.2. | Integrates with VASP, PWmat, CP2K, and LAMMPS for exploration and labeling. |

| VASP / Quantum ESPRESSO / CP2K | High-accuracy ab initio (DFT) calculators. Provide the "ground truth" energy, force, and stress labels. | Choice depends on system (metals, organics, periodic vs. molecular). |

| LAMMPS with MLIP Plugin | High-performance MD engine. Used to run fast exploration simulations with a preliminary MLIP during active learning. | Plugins exist for DeePMD-kit, MACE, and others. |

| SPICE, ANI-1x, rMD17 Datasets | Curated, public quantum chemical datasets for organic molecules. Serve as benchmarks or foundational training data. | SPICE contains ~1.1M drug-like molecule configurations. |

| OCP (Open Catalyst Project) Framework | PyTorch-based toolkit for training and applying MLIPs, especially for catalysis. Includes standard training workflows. | Provides models like GemNet and MACE. |

| FINETUNA / AMPT | Tools for fine-tuning pre-trained MLIPs on small, targeted datasets (e.g., a specific protein-ligand system). | Reduces need for massive system-specific data. |

| PLOTMAP / PANTONE | Analysis tools for visualizing the sampled configuration space and identifying coverage gaps in training data. | Projects high-dimensional data to 2D for human inspection. |

| Tetramethylammonium siloxanolate | Tetramethylammonium siloxanolate, MF:C4H12NO2Si2, MW:162.31 g/mol | Chemical Reagent |

| "Adenosine 3',5'-diphosphate" | "Adenosine 3',5'-diphosphate", MF:C10H15N5O10P2, MW:427.20 g/mol | Chemical Reagent |

Within the broader thesis on Machine Learning Interatomic Potential (MLIP) training set generation, the central challenge is the sampling problem. The configuration space of atomic systems—defined by atomic coordinates, chemical species, and environmental conditions—is astronomically vast. Generating a finite, computationally tractable training dataset that adequately covers this space to produce a robust, transferable, and accurate MLIP is the fundamental research problem. This document outlines application notes and protocols for addressing this challenge.

Core Sampling Methodologies: Protocols & Data

Effective strategies balance random exploration with targeted sampling of high-probability or high-importance regions. The following table summarizes key quantitative metrics and applicability of primary methods.

Table 1: Comparative Analysis of Configuration Space Sampling Methods for MLIP Training

| Method | Core Principle | Key Quantitative Metrics (Typical Ranges) | Best For Systems With |

|---|---|---|---|

| Random/ MD Sampling | Generate configurations via molecular dynamics (MD) at various temperatures. | Temperature range: 50K - 2000K; Simulation time: 10 ps - 1 ns per trajectory; Configurations sampled: 1,000 - 100,000. | Stable phases, exploring thermal vibrations around minima. |

| Active Learning (AL) | Iterative query of an MLIP's uncertainty to select new configurations for labeling. | Uncertainty threshold (σ): 0.01 - 0.1 eV/atom; Iteration cycles: 5-20; New configs per cycle: 50-500. | Broad, unknown landscapes (e.g., reaction paths, defect migration). |

| Metadynamics/ Enhanced Sampling | Biasing simulation to escape free energy minima and visit metastable states. | Hill height: 0.1 - 1.0 kJ/mol; Deposition rate: 0.1 - 10 ps; Collective Variables (CVs): 1-3. | Systems with high energy barriers and rare events. |

| Normal Mode Sampling | Displace atoms along harmonic vibrational modes derived from Hessian matrix. | Displacement scale factor: 0.1 - 2.0 (relative to mode amplitude); Modes sampled: All or low-frequency subset. | Initial exploration near equilibrium, capturing anharmonicity. |

| Structural Enumeration | Systematic generation of derivative structures, defects, or surfaces. | Supercell sizes: 2x2x2 - 4x4x4; Vacancy concentrations: 0.5% - 5%; Surface slab depths: 3-10 atomic layers. | Ordered materials, point defects, surface chemistries. |

Detailed Protocol: Active Learning Loop for MLIP Training

This protocol is central to modern MLIP development.

A. Initial Dataset Creation

- Start with a small seed dataset (

N=100-1000configurations) using random displacements, primitive MD, or structural enumeration. - Compute reference energies and forces for these configurations using Density Functional Theory (DFT). Use a converged plane-wave cutoff and k-point mesh.

B. Iterative Active Learning Loop

- Step 1 - MLIP Training: Train an ensemble of MLIPs (e.g., 4 models) on the current dataset. Use a 80/20 train/validation split.

- Step 2 - Candidate Pool Generation: Perform MD simulations using the current MLIPs at relevant thermodynamic conditions (temperatures, pressures). Aggregate ~10,000-100,000 candidate structures.

- Step 3 - Uncertainty Quantification: For each candidate, calculate the predictive uncertainty. Common metrics include:

- Ensemble Variance:

σ²_E = (1/(M-1)) * Σ_i (E_i - Ē)², whereMis the number of models. - Forces Uncertainty: Root mean square variance across the ensemble.

- Ensemble Variance:

- Step 4 - Query & Label: Select the

Ncandidates (e.g.,N=200) with the highest uncertainty metric. Compute DFT references for these. - Step 5 - Augmentation & Convergence Check: Add the new labeled data to the training set. Check for convergence: if the maximum uncertainty of the candidate pool falls below a threshold (e.g., σ_E < 0.02 eV/atom) for 3 consecutive cycles, stop. Otherwise, return to Step 1.

Detailed Protocol: Metadynamics for Rare Event Sampling

Use to explicitly sample transition states and reaction pathways.

A. Collective Variable (CV) Selection

- Identify 1-3 physically relevant CVs (e.g., bond distance, coordination number, dihedral angle) that describe the event of interest.

- Validate CVs by ensuring they distinguish initial, final, and suspected intermediate states.

B. Well-Tempered Metadynamics Simulation

- Parameters: Set Gaussian hill height

W= 1.0 kJ/mol, widthσ_CV= 10% of CV range, deposition strideτ= 1 ps. - Bias Factor: Set a well-tempered bias factor

γ= 10-20 to gradually flatten the free energy surface. - Simulation: Run the biased MD simulation. The frequency of hill addition decreases over time, allowing convergence.

- Sampling: Extract all unique visited configurations, focusing on those from different free energy basins. A cluster analysis (e.g., using RMSD) can be used to select representative structures.

- Labeling: Submit representative configurations from each basin for DFT calculation.

Visualization of Workflows

Title: Active Learning Workflow for MLIP Training

Title: Metadynamics Sampling for Rare Events

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for MLIP Training Set Generation

| Item / Software | Category | Primary Function in Sampling |

|---|---|---|

| VASP, Quantum ESPRESSO, CP2K | Ab Initio Calculator | Provides the reference "ground truth" energy, forces, and stresses for atomic configurations. |

| LAMMPS, ASE (Atomic Simulation Environment) | MD Engine | Performs classical and MLIP-driven molecular dynamics to explore configuration space. |

| PLUMED | Enhanced Sampling Library | Implements metadynamics and other advanced sampling algorithms by biasing simulations. |

| DP-GEN, FLARE, AL4CHEM | Active Learning Platform | Automates the iterative active learning loop (training, candidate generation, uncertainty query). |

| SOAP, ACE, Behler-Parrinello | Descriptor | Translates atomic coordinates into a mathematical representation (fingerprint) for ML models. |

| DASK, SLURM | High-Performance Computing | Manages parallel computation of thousands of DFT calculations and ML training tasks. |

| VESTA, OVITO | Visualization | Visualizes atomic structures, defects, and diffusion pathways from sampled configurations. |

| N-nitroso-Ritalinic Acid | N-nitroso-Ritalinic Acid Reference Standard|2932440-73-6 | |

| 2-Bromo-1-methylcyclohexanol | 2-Bromo-1-methylcyclohexanol, MF:C7H13BrO, MW:193.08 g/mol | Chemical Reagent |

Application Notes

Within Machine Learning Interatomic Potential (MLIP) training set generation research, the core principles of energy, forces, and stresses form a triadic foundation for constructing a complete and thermodynamically consistent configuration space. Energy provides the scalar reference, forces (the negative gradient of energy) dictate atomic motion, and stresses describe the response to deformation. The "Quest for Completeness" refers to the systematic sampling of atomic environments across relevant thermodynamic states, reaction pathways, and defect geometries to ensure the MLIP's robustness and transferability. For drug development, MLIPs enable high-fidelity simulations of protein-ligand binding dynamics, solvation effects, and polymorph stability, which are critical for predicting binding affinities and bioavailability.

Table 1: Quantitative Benchmarks for MLIP Training Set Completeness

| Metric | Target Value for Drug Development Applications | Purpose |

|---|---|---|

| Energy per Atom RMSE | < 1 meV/atom | Ensures accurate thermodynamic property prediction. |

| Force Component RMSE | < 25 meV/Ã… | Critical for correct molecular dynamics trajectories and vibration spectra. |

| Stress Tensor RMSE | < 0.01 GPa | Necessary for simulating pressure-induced phase changes and mechanical properties. |

| Configurational Space Coverage (e.g., Dimensionality) | > 95% of variance in 50 PCA dimensions | Measures diversity of sampled atomic environments (bond lengths, angles, coordination). |

| Rare Event Sampling (Activation Barriers) | Explicit inclusion of TS geometries (NEB/MTD) | Enables prediction of reaction rates and conformational changes. |

Experimental Protocols

Protocol 1: Active Learning Loop for Training Set Generation

This protocol outlines an iterative ab initio active learning workflow to achieve a complete training set.

- Initialization: Generate a seed dataset of ~100 configurations using random displacements (at 300K), simple lattice distortions, and a few key molecular crystals or protein-ligand snapshots from docking.

- MLIP Training: Train an ensemble of MLIPs (e.g., NequIP, MACE) on the current dataset.

- Exploration via Molecular Dynamics: Perform extensive MD simulations (NVT, NPT) at multiple thermodynamic conditions relevant to the target application (e.g., 300-450K, 0.1 MPa - 1 GPa) using the MLIP ensemble.

- Uncertainty Quantification: For each step of the MD trajectories, compute the committee disagreement (standard deviation) on predicted energies and forces.

- Configuration Selection: Extract all configurations where the uncertainty exceeds a threshold (e.g., energy std. dev. > 1 meV/atom). Cluster these configurations to remove redundancy.

- Ab Initio Calculation: Perform DFT (for materials) or DFTB/force field (for large biosystems) calculations on the selected configurations to obtain reference energy, forces, and stresses.

- Dataset Augmentation: Add the newly labeled configurations to the training pool.

- Convergence Check: Repeat from Step 2 until the uncertainty on a held-out validation set of known critical configurations (e.g., transition states, defect cores) falls below target benchmarks (Table 1) and no new high-uncertainty configurations are discovered in exploratory MD.

Protocol 2: Explicit Stress-Strain Sampling for Polymorph Stability

A targeted protocol to ensure training set completeness for solid-form prediction in pharmaceutical compounds.

- Supercell Construction: Build supercells (3x3x3 min.) for each known crystal polymorph of the target molecule.

- Strain Application: Apply a series of homogeneous strain tensors to each supercell. Use a Latin hypercube sampling scheme to cover the six independent components of the strain tensor up to ±5%.

- Atomic Relaxation: For each strained supercell, perform a constrained geometry optimization where only the atomic positions are relaxed while fixing the deformed lattice vectors.

- Ab Initio Evaluation: Compute the total energy, atomic forces, and the stress tensor for each relaxed, strained configuration using a dispersion-corrected DFT functional.

- Inclusion Criteria: All configurations are added to the global training set. The stress tensor data is crucial for the MLIP to learn the accurate elastic response and the energy landscape around each polymorph's minimum.

Protocol 3: Targeted Sampling of Protein-Ligand Binding Pockets

Protocol for enhancing training data in biologically relevant regions.

- Trajectory Generation: Run classical MD of the solvated protein-ligand complex.

- Pocket Region Identification: Define the binding pocket as all residues within 6 Ã… of the ligand's initial position.

- Frame Selection & Clustering: Extract simulation frames at regular intervals. Cluster the atomic configurations of the pocket region (protein atoms + ligand) based on root-mean-square deviation (RMSD).

- QM/MM Partitioning: For each cluster centroid, define a QM region encompassing the ligand and key interacting sidechains (e.g., charged residues, catalytic site). The MM region includes the remaining protein and solvent.

- High-Level Calculation: Perform QM/MM calculations (e.g., DFTB3/MM) to obtain accurate energies and forces for the QM region, using MM forces for the environment.

- Data Integration: Isolate the QM region's atomic positions, energies, and forces. These are combined with the full-system MM data to create a composite training example, teaching the MLIP the detailed interactions at the binding interface.

Visualizations

Active Learning Loop for MLIP Data Generation

Core Principles Drive Completeness

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for MLIP Training Set Generation

| Item | Function in Research |

|---|---|

| VASP / Quantum ESPRESSO | First-principles electronic structure codes to generate the reference ab initio energy, force, and stress data. |

| LAMMPS / ASE | Molecular dynamics and simulation environments used to run exploratory MD with MLIPs and apply strain. |

| NequIP / MACE / Allegro | Modern, equivariant graph neural network architectures for building accurate, data-efficient MLIPs. |

| GPUMD | High-performance MD code optimized for GPU acceleration, crucial for rapid sampling of configuration space. |

| PLUMED | Plugin for enhanced sampling and free-energy calculations, used to bias MD towards rare events (TS, binding/unbinding). |

| DP-GEN | Automated active learning framework that orchestrates the iterative exploration-labeling-training loop. |

| QM/MM Interface (e.g., sander) | Enables hybrid calculations for large biosystems, providing high-accuracy data in binding pockets. |

| 1-Bromo-1-chlorocyclobutane | 1-Bromo-1-chlorocyclobutane|CAS 31038-07-0 |

| Dihydrohonokiol | Dihydrohonokiol, MF:C18H20O2, MW:268.3 g/mol |

This article details application notes and protocols within the context of a broader thesis on Machine Learning Interatomic Potential (MLIP) training set configuration space generation. The core challenge is generating representative, unbiased atomic configurations that capture the vast and distinct energy landscapes of hard materials versus soft biomolecular systems.

Application Notes: Configuration Space Sampling

Note 1.1: Inorganic Materials (Silicon Crystal & Defects) The goal is to sample configurations for training an MLIP that accurately models a pristine silicon lattice and its point defects (vacancies, interstitials). The configuration space is high-dimensional but bounded by strong covalent bonds, leading to a relatively well-defined energy landscape with deep minima.

Note 1.2: Biomolecular Systems (Protein-Ligand Binding) The goal is to sample configurations for training an MLIP to model the binding of a small-molecule inhibitor to a kinase protein (e.g., Imatinib to Abl kinase). The configuration space involves complex, hierarchical interactions (covalent, ionic, hydrophobic, hydrogen bonding) across multiple timescales, with a shallow, multi-minima energy landscape and critical entropic contributions.

Table 1: Quantitative Comparison of Sampling Challenges

| Parameter | Inorganic Material (Si) | Biomolecular System (Protein-Ligand) |

|---|---|---|

| Primary Bonding | Strong, directional covalent | Mixed: covalent (backbone), weak non-covalent |

| Energy Landscape | Steep, deep minima | Shallow, numerous metastable minima |

| Key Sampling Metric | Formation energy, phonon spectra | Free energy (ΔG), RMSD, radius of gyration |

| Critical Configurations | Defect structures, surfaces | Bound/unbound states, transition paths |

| Dominant MD Method | NVT/NPT, VASP/LAMMPS |

Enhanced sampling (MetaD, REST2), AMBER/GROMACS |

| Sampling Scale | ~100-1000 atoms, ps-ns | ~10,000-100,000 atoms, ns-μs |

Protocols for Training Set Generation

Protocol 2.1: Active Learning for Materials Defects Objective: Iteratively generate a training set for silicon that includes rare defect events.

- Initial Dataset: Perform DFT (

VASP) calculations on 2x2x2 Si supercell: pristine, 1 vacancy, 1 interstitial (3 configurations). - Train Initial MLIP: Train a

M3GNetorACEmodel on the initial set. - Exploration MD: Run an NVT MD simulation with the MLIP at 1200K for 100 ps to encourage defect formation.

- Uncertainty Sampling: Use the MLIP's latent space or committee disagreement to select 10 structures with highest prediction uncertainty.

- DFT Labeling: Perform single-point DFT energy/force calculations on the selected structures.

- Augmentation & Iteration: Add labeled data to training set. Retrain MLIP. Repeat steps 3-5 until energy/force errors on a test set converge (< 10 meV/atom, < 100 meV/Ã…).

Protocol 2.2: Enhanced Sampling for Protein-Ligand Conformations Objective: Generate a training set capturing the bound, unbound, and intermediate states of a protein-ligand complex.

- System Preparation: Obtain PDB structure (e.g., 1IEP). Prepare with

tleap(AMBER): add hydrogens, solvate in TIP3P water box, add ions to neutralize. - Equilibration: Run minimization, NVT, and NPT simulations using

pmemd.cuda(AMBER) withff19SB/GAFF2force fields. - Collective Variable (CV) Definition: Define CVs: 1) Distance between ligand center and protein binding site centroid, 2) Protein binding pocket radius of gyration. Use

PLUMED. - Well-Tempered Metadynamics: Deposit Gaussian hills (height=1.0 kJ/mol, width=0.1 nm, pace=500 steps) on the defined CVs. Run a 500 ns biased simulation to encourage exploration of the full CV space.

- Configuration Clustering: From the metaD trajectory, cluster frames based on CVs and backbone RMSD using

cpptraj. Select 50 representative frames from major clusters. - QM/MM Labeling: For each selected frame, perform QM/MM energy/force calculations using

sander(AMBER) with the DFTB3 method for the ligand and binding site residues (5-7 Ã… cutoff). Use theDFTB3module inAmberTools. - Training Set Assembly: Assemble QM/MM labeled structures into the final MLIP (

ANI-2x,TorchANI) training set. Validate by comparing MLIP-predicted vs. QM/MM free energy profiles.

Diagrams

Title: MLIP Training Set Generation Workflow

Title: Key Sampling Methods for Different Domains

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Cross-Domain MLIP Training Set Generation

| Item / Solution | Domain | Function in Protocol | Example/Supplier |

|---|---|---|---|

| DFT Software | Materials | Provides high-quality energy/force labels for initial and queried configurations. | VASP, Quantum ESPRESSO |

| Classical MD Engine | Both | Performs large-scale exploration (high-T MD) and equilibration. | LAMMPS (Mat), AMBER/GROMACS (Bio) |

| Enhanced Sampling Plugin | Biomolecules | Drives sampling along collective variables to overcome high barriers. | PLUMED |

| QM/MM Interface | Biomolecules | Enables high-quality electronic structure calculations for solvated biomolecules. | sander/pmemd with DFTB3 (AMBER) |

| MLIP Framework | Both | Provides model architecture, training, and uncertainty quantification capabilities. | M3GNet, AMPtorch, TorchANI |

| Clustering/Analysis Tool | Both | Analyzes simulation trajectories to select representative configurations. | scikit-learn (PCA/t-SNE), MDTraj, cpptraj |

| Automation & Workflow Manager | Both | Orchestrates iterative active learning loops. | FAST, signac, custom Python scripts |

| 2-Hydroxy 5'-Methyl benzophenone | 2-Hydroxy 5'-Methyl benzophenone, MF:C14H12O2, MW:212.24 g/mol | Chemical Reagent | Bench Chemicals |

| Famotidine hydrochloride | Famotidine hydrochloride, CAS:108885-67-2, MF:C8H16ClN7O2S3, MW:373.9 g/mol | Chemical Reagent | Bench Chemicals |

A Step-by-Step Guide to Generating Effective MLIP Training Sets

1. Introduction

Within the context of machine-learned interatomic potential (MLIP) training set generation research, constructing a representative configuration space is paramount. This workflow details the protocol from acquiring an initial molecular structure to producing a finalized, curated dataset suitable for MLIP training, emphasizing robustness and thermodynamic sampling.

2. Initial Structure Acquisition & Preparation

Protocol 2.1: Initial Structure Sourcing and Validation

- Objective: Obtain a reliable starting conformation for the target molecule (e.g., a drug-like compound or protein).

- Materials:

- Source Databases: Protein Data Bank (PDB), Cambridge Structural Database (CSD), PubChem.

- Software: Open Babel, RDKit, PyMOL, or Maestro.

- Methodology:

- Search relevant databases using the compound name or canonical SMILES string.

- Select the highest-resolution crystal structure available. For small molecules, prioritize structures without significant disorder.

- Isolate the target molecule, removing co-crystallized solvents, ions, and co-factors unless they are functionally relevant.

- Add missing hydrogen atoms using the protonation state appropriate for the target physiological pH (e.g., pH 7.4) using software like RDKit or Maestro's Protein Preparation Wizard.

- Perform a brief geometry minimization (≤ 50 steps, MMFF94 or similar force field) to relieve severe steric clashes introduced during hydrogen addition.

3. Configuration Space Exploration via Molecular Dynamics

Protocol 3.1: Explicit Solvent MD for Conformational Sampling

- Objective: Generate an ensemble of thermally accessible conformations.

- Materials:

- Software: GROMACS, AMBER, or OpenMM.

- Force Field: GAFF2 (small molecules), CHARMM36 or AMBER ff19SB (proteins), TIP3P or SPC/E water model.

- Computing Resource: High-Performance Computing (HPC) cluster with GPU acceleration.

- Methodology:

- System Setup: Solvate the prepared structure in a cubic water box with a minimum 1.2 nm margin from the solute. Add ions to neutralize the system and achieve a physiological salt concentration (e.g., 0.15 M NaCl).

- Energy Minimization: Use the steepest descent algorithm (≤ 5000 steps) to remove residual steric clashes.

- Equilibration:

- NVT: Heat the system from 0 K to 300 K over 100 ps using a velocity rescale thermostat (coupling constant = 0.1 ps).

- NPT: Stabilize pressure at 1 bar for 100 ps using a Parrinello-Rahman barostat (coupling constant = 2.0 ps).

- Production Run: Perform an unbiased MD simulation for a duration sufficient to observe relevant conformational transitions (typically 100 ns - 1 µs). Save trajectories every 10 ps.

4. Dataset Curation and Ab-Initio Reference Calculation

Protocol 4.2: Clustering and Frame Selection for DFT Calculation

- Objective: Select a diverse, non-redundant subset of configurations for high-precision ab-initio calculation.

- Materials: MD trajectory, clustering software (GROMACS

cluster, MDTraj, scikit-learn). - Methodology:

- Superimpose all trajectory frames to a reference (e.g., the initial structure) based on the solute's heavy atoms to remove rotational/translational drift.

- Calculate the pairwise Root Mean Square Deviation (RMSD) matrix for the solute's heavy atoms.

- Apply a clustering algorithm (e.g., linkage clustering with a cutoff of 0.15-0.3 nm RMSD) to group geometrically similar conformations.

- Select the central member (closest to the cluster centroid) of the n largest clusters, plus additional random samples from smaller clusters to ensure coverage. Aim for a final selection of 500-5000 frames, balancing diversity and computational cost.

Protocol 4.3: Ab-Initio Single-Point Energy and Force Calculation

- Objective: Generate the reference ab-initio data (energy, forces, stress) for the selected configurations.

- Materials: Quantum Chemistry Software (VASP, Quantum ESPRESSO, Gaussian, CP2K), high-throughput workflow manager (ASE, pymatgen).

- Methodology:

- For each selected snapshot, extract the coordinates of the solute and all solvent/ions within a defined cutoff (e.g., 0.6 nm) from the solute.

- Set up the ab-initio calculation. A typical balanced protocol for organic molecules is:

- Functional: ωB97M-D3(BJ) (for high accuracy) or PBE-D3 (for efficiency).

- Basis Set: def2-TZVP for main-group elements.

- Task: Single-point energy and analytic force calculation.

- Submit calculations via a workflow manager to ensure consistency and error handling.

- Parse outputs to collect total energy (in eV), atomic forces (in eV/Ã…), and the cell vectors (if periodic).

5. Final Dataset Assembly for MLIP Training

Protocol 5.1: Data Formatting and Splitting

- Objective: Assemble the final dataset in a standard format and split it for MLIP training/validation/testing.

- Materials: Parsed ab-initio data, data formatting scripts (e.g., using ASE or custom Python).

- Methodology:

- Compile each configuration into a standard format (e.g., extended XYZ, HDF5). Each entry must contain:

- Atomic numbers and positions.

- The reference total energy.

- The reference per-atom forces.

- The cell (if periodic) and optional periodic boundary conditions.

- Apply a global energy offset (e.g., shift the minimum energy in the set to zero) to improve numerical stability during training.

- Randomly shuffle the dataset and split it into training (∼80%), validation (∼10%), and test (∼10%) sets. Ensure no temporal leakage from the MD trajectory by shuffling across all clusters.

- Compile each configuration into a standard format (e.g., extended XYZ, HDF5). Each entry must contain:

6. Data Presentation

Table 1: Typical Quantitative Parameters for MLIP Dataset Generation Workflow

| Stage | Key Parameter | Typical Value / Method | Purpose |

|---|---|---|---|

| MD Setup | Water Box Margin | 1.2 nm | Minimize periodic image interactions |

| Salt Concentration | 0.15 M NaCl | Mimic physiological conditions | |

| MD Run | Production Time | 100 ns - 1 µs | Sample relevant conformational space |

| Trajectory Save Frequency | 10 ps | Balance detail and storage | |

| Clustering | RMSD Cutoff | 0.15 - 0.3 nm | Define conformational similarity |

| DFT Ref. | Density Functional | ωB97M-D3(BJ) / PBE-D3 | Accuracy vs. efficiency trade-off |

| Basis Set | def2-TZVP | Good accuracy for main-group elements | |

| Data Split | Training/Validation/Test | 80/10/10 % | Standard split for model development |

7. Visualization

Title: MLIP Training Set Generation Workflow

8. The Scientist's Toolkit

Table 2: Essential Research Reagents & Solutions for Configuration Space Sampling

| Item / Resource | Category | Function / Purpose |

|---|---|---|

| GROMACS / AMBER / OpenMM | Software Suite | Molecular dynamics simulation engines for conformational sampling. |

| GAFF2 / CHARMM36 Force Fields | Parameter Set | Provides classical interaction potentials for organic molecules and biomolecules. |

| VASP / Quantum ESPRESSO / CP2K | Software Suite | Performs density functional theory (DFT) calculations for reference ab-initio data. |

| ωB97M-D3(BJ) / PBE-D3 | DFT Functional | Exchange-correlation functionals; the former for high accuracy, the latter for efficiency. |

| def2-TZVP Basis Set | Basis Set | A balanced triple-zeta basis set for accurate energy/force calculations on main-group elements. |

| RDKit / Open Babel | Cheminformatics Library | Handles molecular format conversion, SMILES parsing, and basic structure manipulation. |

| ASE (Atomic Simulation Environment) | Python Library | Manages high-throughput DFT workflows and data formatting for MLIP inputs. |

| HPC Cluster with GPU Nodes | Computing Resource | Provides the necessary computational power for MD (GPUs) and DFT (CPUs) calculations. |

In the pursuit of accurate and transferable Machine Learning Interatomic Potentials (MLIPs), the generation of a comprehensive training dataset is paramount. The foundational layer of this dataset originates from ab initio quantum mechanical calculations, primarily Density Functional Theory (DFT) and higher-level quantum chemistry methods. These calculations provide the essential "ground truth" energies, forces, and stress tensors for atomic configurations that span the relevant chemical space. The fidelity of the subsequent MLIP is intrinsically bounded by the quality, diversity, and thermodynamic relevance of this ab initio reference data. This document details the application notes and standardized protocols for generating such foundational data, specifically architected to support robust MLIP training.

Quantitative Comparison ofAb InitioMethods for MLIP Datasets

Table 1: Comparison of Quantum Computational Methods for Reference Data Generation

| Method | Typical Accuracy (Energy) | Computational Cost (Relative) | Key Strengths for MLIP | Key Limitations for MLIP |

|---|---|---|---|---|

| DFT (GGA/PBE) | ~5-10 kcal/mol | 1x (Baseline) | Excellent cost/accuracy balance; solid-state materials; periodic systems. | Systematic errors for dispersion, strongly correlated systems. |

| DFT+U | Improves on GGA for d/f electrons | 1.1x | Corrects on-site Coulomb interaction in transition metal oxides. | U parameter is empirical; not a universal fix. |

| DFT-D3/D4 | ~1-3 kcal/mol (for non-covalent) | 1.05x | Adds van der Waals dispersion corrections crucial for molecular & layered systems. | Post-hoc correction; non-self-consistent. |

| Hybrid DFT (HSE06) | ~2-5 kcal/mol | 10-100x | Improved band gaps, reaction barriers; more accurate electronic structure. | High cost limits system size and sampling breadth. |

| MP2 | ~1-3 kcal/mol (for small gaps) | 100-1000x | Good for non-covalent interactions; gold standard for molecular clusters. | Very high cost; not for periodic metals; basis set sensitive. |

| CCSD(T) | <1 kcal/mol (Chemical Accuracy) | 1000-10,000x | Ultimate accuracy for validation & small "gold standard" subsets. | Prohibitive cost; only for tiny systems (<20 atoms). |

| r²SCAN | ~2-5 kcal/mol | 1.5-2x | Modern meta-GGA; often better across properties without hybrids. | Higher cost than GGA; still under evaluation for diverse solids. |

Core Protocols forAb InitioDataset Generation

Protocol 3.1: Multi-Fidelity Dataset Construction for a Binary Alloy System (A$x$B${1-x}$)

Objective: Generate a training set for an MLIP describing a binary alloy across compositions, phases, and defect states.

Materials/Software:

- VASP/Quantum ESPRESSO/ABINIT (DFT engine)

- ASE (Atomic Simulation Environment) or pymatgen for structure manipulation

- ICET or ATAT for cluster expansion and prototype generation

- High-Performance Computing (HPC) cluster

Procedure:

- Configuration Space Sampling:

- Phase Space: Generate pristine unit cells for all known bulk phases (FCC, BCC, HCP, intermetallics) via materials databases.

- Supercells: Create 2x2x2, 3x3x3, and 4x4x4 supercells for each primary phase.

- Chemical Disorder: For each supercell and target composition x, generate 10-50 distinct atomic decorrelations using Special Quasi-random Structures (SQS) via the

mcsqstool (ATAT). - Point Defects: Introduce vacancies, antisite defects, and interstitial candidates (using the

dopedpackage) into select ordered supercells. - Displaced Configurations: From a subset of the above, generate 50-100 slightly perturbed configurations (atomic displacements ~0.05 Ã…) via molecular dynamics at 50K (10 fs timestep) or random displacements.

Multi-Fidelity DFT Calculations:

- Tier 1 (Broad Sampling, Lower Cost): Perform single-point energy/force calculations on all generated structures using a GGA-PBE functional with semi-empirical D3 dispersion, medium plane-wave cutoff (e.g., 450 eV), and standard k-point density. This yields ~50,000 data points.

- Tier 2 (Refined Accuracy): Select ~5,000 diverse structures from Tier 1 (using farthest-point sampling). Recalculate using a more accurate functional (e.g., r²SCAN or PBEsol) with tighter convergence parameters.

- Tier 3 (Validation/High-Accuracy): Select ~100 critical configurations (e.g., transition states, key defect formations, dilute compositions). Compute using hybrid HSE06 functional or, for molecular clusters, CCSD(T)/CBS benchmarks.

Data Curation & Formatting:

- Extract total energy, atomic forces, stress tensors, and virials for each calculation.

- Assemble into standardized MLIP-ready format (e.g., extended XYZ, .hdf5). Annotate each entry with metadata: functional, k-grid, convergence, composition.

- Perform sanity checks: energy vs. volume (EOS) fits for pure phases, defect formation energies should be physically plausible.

Protocol 3.2: Molecular Cluster Dataset for Reactive Drug-Like Fragments

Objective: Create a dataset for training a reactive MLIP for ligand-protein interaction simulations.

Materials/Software:

- Gaussian 16/ORCA/Psi4 (Quantum Chemistry engine)

- CREST/GFN-FF for conformer and reaction coordinate sampling

- Auto-FOX or CheMSM for reaction network exploration

- QM7-X/TM databases as starting points

Procedure:

- Conformational & Torsional Sampling:

- For each target molecule (e.g., drug fragment), generate an ensemble of low-energy conformers using CREST (GFN-FF).

- Perform systematic or stochastic scans of key dihedral angles (increment 15-30°) to map torsional potentials.

Reactive Pathway Sampling:

- Define plausible reactive encounters between fragments and a model amino acid sidechain (e.g., proton transfer, nucleophilic attack, bond formation/cleavage).

- Use the Nudged Elastic Band (NEB) method or heuristic methods in Auto-FOX to identify approximate transition states (TS) and minimum energy paths (MEP).

High-Level Quantum Chemistry Calculations:

- Optimize all minima (reactants, products, conformers) and confirmed transition states at the DLPNO-CCSD(T)/def2-TZVPP level of theory, following geometry optimization at ωB97X-D/def2-SVP.

- Perform frequency calculations to confirm minima (0 imaginary frequencies) and TS (1 imaginary frequency) and obtain zero-point energy corrections.

- Compute single-point energies for the entire set at an even higher level (e.g., CCSD(T)/CBS extrapolation) for a critical subset to establish a correction map.

Dataset Assembly:

- Extract Cartesian coordinates, energies (including ZPE-corrected), atomic forces, and partial charges (e.g., from Hirshfeld or CM5 analysis).

- Include dipole moments and polarizabilities if the MLIP architecture supports them.

- Structure the data hierarchically: conformational, torsional, and reactive subsets.

Visualization of Workflows

Diagram 1: Multi-Fidelity MLIP Training Set Generation Workflow

Diagram 2: Molecular Reactive Pathway Sampling Logic

The Scientist's Toolkit: Essential Research Reagents & Software

Table 2: Key Computational Reagents for Ab Initio Dataset Generation

| Item / Software | Category | Primary Function in MLIP Data Generation |

|---|---|---|

| VASP | DFT Code | Industry-standard periodic DFT code for solid-state and surface systems. Provides highly accurate forces and stresses. |

| Quantum ESPRESSO | DFT Code | Open-source, plane-wave pseudopotential suite. Excellent for large-scale sampling and workflow automation. |

| Gaussian 16 / ORCA | Quantum Chemistry Code | High-accuracy molecular quantum chemistry for CCSD(T), DLPNO, and hybrid DFT calculations on clusters. |

| ASE (Atomic Simulation Environment) | Python Library | Universal toolkit for manipulating atoms, interfacing with calculators, building workflows, and analyzing results. |

| pymatgen | Python Library | Materials analysis and phase diagram generation. Critical for generating and analyzing bulk crystal prototypes. |

| ICET / ATAT | Sampling Toolkit | Tools for generating Special Quasi-random Structures (SQS) and cluster expansions for alloy configurational sampling. |

| CREST (GFN-FF) | Conformer Sampler | Efficient, force-field based conformational and protoner rotor sampling for molecules and molecular clusters. |

| Nudged Elastic Band (NEB) | Pathway Finder | Algorithm for locating minimum energy paths and transition states between known reactant and product states. |

| LOBSTER | Bonding Analysis | Computes crystal orbital Hamilton populations (COHP) for bond analysis, validating electronic structure data. |

| XCrySDen / VESTA | Visualization | Real-space visualization of crystal structures, electron densities, and atomic trajectories for quality control. |

| Magnesium benzene bromide | Magnesium Benzene Bromide | Phenylmagnesium Bromide Supplier | |

| Cycloheptane-1,4-diol | Cycloheptane-1,4-diol, CAS:100948-92-3, MF:C7H14O2, MW:130.18 g/mol | Chemical Reagent |

This document provides detailed application notes and protocols for active learning (AL) strategies, specifically iterative sampling, within the broader research context of configuring training sets for Machine Learning Interatomic Potentials (MLIPs). Efficient exploration of the chemical and structural configuration space is paramount for developing robust, transferable, and computationally efficient MLIPs used in materials science and drug development.

Core Active Learning Cycles for MLIPs

Active learning for MLIPs operates through a closed-loop cycle, iteratively selecting the most informative data points from a vast, unlabeled configuration space (e.g., from molecular dynamics trajectories) for first-principles calculation and subsequent model retraining.

Quantitative Comparison of Query Strategies

The performance of AL strategies is quantitatively assessed by their data efficiency and final model error. The following table summarizes key metrics from recent studies.

Table 1: Comparison of Active Learning Query Strategies for MLIP Training

| Strategy | Core Principle | Typical Acquisition Function | Data Efficiency Gain* (%) | Typical Final RMSE Reduction* (%) | Computational Overhead |

|---|---|---|---|---|---|

| Uncertainty Sampling | Select configurations where model prediction is most uncertain. | Predictive variance, entropy | 40-60 | 20-40 | Low |

| Query-by-Committee | Select points where committee of models disagrees most. | Disagreement variance (e.g., STD) | 50-70 | 25-45 | Medium (Multiple Models) |

| D-optimality / Greedy | Maximize diversity in the selected subset. | Determinant of covariance matrix | 30-50 | 15-30 | High (Matrix Operations) |

| Expected Model Change | Select points that would change the model most. | Gradient of loss w.r.t. candidate | 45-65 | 20-40 | High (Gradient Calc.) |

| Bayesian Optimization | Maximize an acquisition function balancing exploration/exploitation. | Expected Improvement, UCB | 55-75 | 30-50 | High (Surrogate Model) |

*Gains are relative to random sampling baselines. Actual values are system-dependent.

Protocol: Iterative AL Workflow for MLIP Configuration Space Generation

Protocol Title: Closed-Loop Active Learning for Ab Initio Dataset Curation.

Objective: To generate a minimal yet comprehensive training set of atomic configurations with associated ab initio energies and forces for a target molecular or materials system.

Materials & Initial Setup:

- Initial Seed Dataset: A small set (50-200) of diverse atomic configurations with pre-computed ab initio reference data (energy, forces, stresses).

- Candidate Pool: A large, unlabeled pool of configurations (10^4 - 10^7) generated via methods in Step 1 of the workflow diagram.

- MLIP Architecture: Choose a model (e.g., Neural Network Potential, Gaussian Approximation Potential, Moment Tensor Potential).

- High-Performance Computing (HPC) Resources: For ab initio calculations and parallel model training.

Procedure:

- Train Initial Model: Train the MLIP on the current labeled dataset.

- Evaluate on Candidate Pool: Use the trained model to predict energies/forces for all configurations in the unlabeled candidate pool.

- Compute Acquisition Scores: Apply the chosen acquisition function (see Table 1) to rank candidates by "informativeness."

- Query & Label: Select the top N (batch size) configurations from the ranked pool. Submit these configurations for ab initio calculation (e.g., DFT) to obtain accurate labels.

- Augment & Retrain: Add the newly labeled configurations to the training set. Retrain the MLIP from scratch or using a warm start.

- Convergence Check: Monitor the model's performance on a fixed, independent validation set. Convergence criteria may include:

- Validation error plateauing over several AL cycles.

- Acquisition scores for the top candidates falling below a threshold.

- Maximum cycle or computational budget reached.

- Iterate: Repeat steps 1-6 until convergence is achieved.

Validation: The final model must be validated on a completely held-out test set comprising diverse configurations not seen during the entire AL cycle.

Visualization of Workflows and Relationships

Diagram 1: MLIP Active Learning Workflow

Diagram 2: Acquisition Functions & Objectives

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Active Learning in MLIP Development

| Tool / Resource | Category | Primary Function in AL Workflow | Examples / Notes |

|---|---|---|---|

| Atomic Simulation Environment (ASE) | Software Library | Interface for atoms, calculators, MD, and coupling MLIPs with DFT codes. | Core platform for scripting AL loops. |

| Density Functional Theory (DFT) Code | Electronic Structure | High-fidelity label generator for selected configurations. | VASP, Quantum ESPRESSO, GPAW, CP2K. |

| MLIP Training Framework | Machine Learning | Provides model architectures and training routines. | AMP, SchNetPack, MACE, Allegro, DEEPMD. |

| Candidate Pool Generator | Sampling Software | Creates the initial unlabeled configuration space for querying. | RASPA (for adsorption), pymatgen (structures), custom MD scripts. |

| Acquisition Function Library | AL Software | Implements strategies for scoring and ranking candidates. | modAL (Python), custom implementations in PyTorch/TensorFlow. |

| High-Throughput Workflow Manager | Compute Management | Automates job submission for DFT labeling and model retraining across cycles. | AiiDA, FireWorks, Nextflow. |

| Reference Datasets | Benchmark Data | Provides standardized systems for comparing AL strategy performance. | QM9, MD17, rMD17, OC20. |

| N-Methyl-n-propylaniline | N-Methyl-n-propylaniline, CAS:13395-54-5, MF:C10H15N, MW:149.23 g/mol | Chemical Reagent | Bench Chemicals |

| 1,3-Bis(2-chloroethylthio)propane | 1,3-Bis(2-chloroethylthio)propane|CAS 63905-10-2 | 1,3-Bis(2-chloroethylthio)propane is a chemical intermediate and crosslinking agent for research. This product is For Research Use Only. Not for human or veterinary use. | Bench Chemicals |

Application Notes

Thesis Context: MLIP Training Set Generation

The development of robust Machine Learning Interatomic Potentials (MLIPs) requires training sets that comprehensively sample the relevant chemical and configurational space. This involves capturing atomic environments across diverse conditions—equilibrium structures, finite-temperature dynamics, transition states, and soft vibrational modes. The specialized techniques of Molecular Dynamics (MD) snapshots, phonon displacements, and the Nudged Elastic Band (NEB) method are critical for generating such a representative and efficient ab initio dataset. These methods systematically target distinct but complementary regions of the potential energy surface (PES), ensuring the MLIP can accurately predict energies, forces, and vibrational properties for use in materials science and drug development (e.g., for ligand-protein binding dynamics).

MD Snapshots for Thermodynamic Sampling

Purpose: To capture the configurational space accessible at finite temperatures, including anharmonic effects and rare events. Protocol: Perform ab initio molecular dynamics (AIMD) simulations using DFT (e.g., VASP, CP2K) at relevant temperatures (e.g., 300K, 600K). Use an NVT ensemble with a Nosé-Hoover thermostat. For a 100-atom system, a 20-50 ps simulation is typical. Extract uncorrelated snapshots by saving frames at intervals exceeding the correlation time (e.g., every 100 fs for a 20 ps trajectory yields ~200 snapshots). Each snapshot provides atomic coordinates, DFT-calculated total energy, atomic forces, and the stress tensor. Data Contribution: Introduces thermal noise, bond stretching/compression, and liquid-state or amorphous phase configurations into the training set.

Phonon Displacements for Vibrational Properties

Purpose: To ensure the MLIP reproduces harmonic and anharmonic vibrational (phonon) spectra, crucial for calculating thermodynamic properties. Protocol: 1. Harmonic Generation: After optimizing a structure to its ground state, compute the force constant matrix via density functional perturbation theory (DFPT) or finite displacements. 2. Displacement Creation: Diagonalize the dynamical matrix to obtain normal modes (eigenvectors) and frequencies (eigenvalues). 3. Sampling: For each normal mode i, generate displaced configurations: ( R{i}^{\pm} = R{0} \pm A \cdot \epsilon{i} ), where ( \epsilon{i} ) is the eigenvector and A is an amplitude (e.g., 0.01–0.05 Å). Use a stochastic sampler to create random linear combinations of mode displacements at specific temperatures. Data Contribution: Provides precise data on the curvature of the PES around minima, essential for predicting correct vibrational densities of states and phonon dispersion curves.

Nudged Elastic Band for Transition Pathways

Purpose: To sample the saddle points and minimum energy paths (MEPs) between metastable states, which are critical for diffusion and reaction barrier calculations. Protocol: 1. Endpoint Optimization: Fully optimize the initial and final states (e.g., reactant and product, two bulk diffusion sites). 2. Band Initialization: Construct an initial guess for the path (e.g., via linear interpolation) with 5-20 images. 3. NEB Calculation: Use an implementation (e.g., in ASE, LAMMPS) with the "nudging" forces to ensure images converge to the MEP. Employ a climbing image (CI-NEB) to refine the saddle point. 4. Data Extraction: From the converged NEB calculation, extract atomic coordinates, energies, and forces for all images along the MEP, with particular emphasis on the saddle point (highest-energy image). Data Contribution: Directly samples transition states and regions of negative curvature, which are rarely visited in MD but vital for kinetic studies.

Table 1: Comparison of Configuration Space Generation Techniques

| Technique | Target PES Region | Primary Outputs per Frame | Typical # Configs for a 50-atom System | Key MLIP Property Ensured |

|---|---|---|---|---|

| MD Snapshots | Equilibrium & non-equilibrium thermal states | Coords, Energy, Forces, Stress | 200-500 | Thermodynamic consistency, phase stability |

| Phonon Displacements | Harmonic basin near minima | Coords, Energy, Forces | 100-300 (from ~10-20 modes) | Vibrational spectra, heat capacity |

| Nudged Elastic Band | Saddle points & reaction paths | Coords, Energy, Forces (along path) | 5-20 (images per path) | Reaction barriers, diffusion rates |

Table 2: Typical Computational Parameters for Protocols

| Parameter | MD Snapshots (AIMD) | Phonon Displacements | NEB (DFT-based) |

|---|---|---|---|

| Software Example | VASP, CP2K | Phonopy + VASP/Quantum ESPRESSO | ASE + VASP/CP2K |

| Energy/Force Method | DFT (PBE, SCAN) | DFT (PBE) | DFT (PBE) |

| System Size | 50-200 atoms | 1-100 atom unit cell | 50-150 atoms |

| Sampling Duration/Scope | 20-50 ps trajectory | ± 0.03 Å displacement amplitude | 5-20 images per path |

| Avg. Wall Time per Config | 100-500 CPU-hrs (for trajectory) | 10-50 CPU-hrs (for matrix calc + displacements) | 50-200 CPU-hrs (full path) |

Detailed Experimental Protocols

Protocol A: Generating MD Snapshots for MLIP Training

- System Preparation: Build initial structure (e.g., crystal, surface, molecule in box) in VESTA/Pymatgen. Ensure appropriate cell size and vacuum.

- DFT Relaxation: Perform full ionic + cell relaxation until forces < 0.01 eV/Ã….

- AIMD Setup: Choose NVT ensemble. Set timestep to 1 fs. Select Thermostat (Nosé-Hoover, τ=100 fs). Heat system to target T over 2-5 ps.

- Production Run: Run AIMD for 20-50 ps, saving trajectory every 10 fs.

- Correlation & Extraction: Compute velocity autocorrelation function to determine decorrelation time (Ï„). Extract snapshots at intervals > Ï„ (e.g., every 100 fs).

- Single-Point Calculation: Perform a high-accuracy DFT calculation on each extracted snapshot to obtain energy/forces for training.

Protocol B: Creating Displaced Configurations via Phonon Analysis

- Ground State: Optimize primitive cell to forces < 0.001 eV/Ã….

- Supercell Creation: Use Phonopy to generate a 2x2x2 or larger supercell.

- Force Constant Matrix: Run DFT finite displacements (e.g., Phonopy

disp.yamlgenerated displacements) or use DFPT. - Post-Process: Run Phonopy to obtain force constants, diagonalize dynamical matrix, and output normal modes (eigenvectors) and frequencies.

- Generate Displacements: Use custom script to create configurations: ( R = R0 + \sumi ci * Ai * \epsiloni ), where ( ci ) is a random coefficient from a normal distribution scaled by ( \sqrt{kT} / \omegai ), and ( Ai ) is a scaling factor.

- DFT Calculation: Compute energy and forces for each displaced configuration.

Protocol C: Running a Climbing Image NEB Calculation

- Endpoints: Fully relax initial and final states.

- Interpolation: Use the ASE

NEBfunction with IDPP (image dependent pair potential) to generate 7 initial intermediate images. - NEB Setup: Employ the CI-NEB method. Set spring constant between images to 5.0 eV/Ų. Use a force optimizer (FIRE or BFGS).

- Solver: Use ASE's

NEBmodule coupled to a DFT calculator (e.g., VASP). Set convergence criterion for max force < 0.05 eV/Ã…. - Climbing Image: Enable the climbing image flag for the highest-energy image after ~50 optimization steps to push it to the saddle.

- Data Harvesting: Upon convergence, extract coordinates, energies, and forces for all images. The saddle point is the highest-energy image.

Visualizations

Workflow for MLIP Training Set Generation

PES Regions Targeted by Each Sampling Technique

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools & Materials

| Item/Category | Specific Examples | Function in Configuration Generation |

|---|---|---|

| Electronic Structure Code | VASP, CP2K, Quantum ESPRESSO, GPAW | Performs ab initio calculations (DFT) to provide reference energies, forces, and stresses for extracted configurations. |

| Atomistic Simulation Environment | ASE (Atomic Simulation Environment) | Python framework for setting up, running, and analyzing MD, phonon, and NEB calculations. Essential for workflow automation. |

| Phonon Analysis Software | Phonopy, ALM, PHON | Calculates force constants, normal modes, and generates displaced supercells for harmonic sampling. |

| NEB Implementation | ASE NEB, VTST-Tools (for VASP), LAMMPS NEB | Solves for the minimum energy path and saddle points between defined endpoints. |

| Force Optimizer | FIRE, BFGS, L-BFGS | Used in geometry optimization and NEB image relaxation to efficiently converge to minima or saddle points. |

| High-Performance Computing (HPC) | SLURM/PBS job schedulers, MPI parallelization | Enables computationally intensive AIMD and NEB calculations on clusters. |

| Data Curation & MLIP Framework | PyTorch Geometric, DGL, AMPTorch, MACE | Libraries for converting atomic configuration data into graph representations and training the MLIP models. |

| Visualization & Analysis | OVITO, VMD, Matplotlib, Pymatgen | For analyzing trajectories, phonon bands, NEB paths, and validating training set coverage. |

| Hydrazine perchlorate | Hydrazine perchlorate, CAS:13762-80-6, MF:ClH5N2O4, MW:132.50 g/mol | Chemical Reagent |

| Strontium;chloride;hexahydrate | Strontium;chloride;hexahydrate, MF:ClH12O6Sr+, MW:231.16 g/mol | Chemical Reagent |

The development of robust Machine Learning Interatomic Potentials (MLIPs) hinges on the generation of comprehensive training sets that span the relevant configuration space of a material or molecular system. This process requires automated, high-throughput workflows for first-principles calculations, classical molecular dynamics, and active learning. The Atomic Simulation Environment (ASE), the Large-scale Atomic/Molecular Massively Parallel Simulator (LAMMPS), and modern MLIP frameworks form an integrated toolkit essential for this research. This document provides application notes and protocols for leveraging these tools within the context of automated training set generation for MLIPs.

Core Tools: Functions and Integration

Table 1: Core Software Tools for MLIP Automation

| Tool | Primary Function | Role in MLIP Training Set Generation |

|---|---|---|

| ASE | Python scripting interface for atomistic simulations. | Primary orchestrator. Handles I/O, structure manipulation, calculator setup (DFT), and workflow automation. |

| LAMMPS | High-performance classical MD simulator. | Explores configuration space via classical potentials, performs initial screening, and is a primary platform for MLIP deployment/inference. |

| MLIP Framework (e.g., MACE, NequIP, Allegro) | Provides models and training code for MLIPs. | Defines the MLIP architecture, manages training on quantum mechanical data, and provides interfaces for ASE/LAMMPS. |

| Quantum Espresso/VASP | First-Principles (DFT) Calculator. | Generates the target ab initio data (energies, forces, stresses) for training and validation. |

Protocol 1: Automated Initial Training Set Generation

Objective: Create a diverse initial dataset from a small set of primitive structures.

Materials & Workflow:

- Input: Primitive unit cells, a classical interatomic potential (e.g., EAM, ReaxFF).

- Protocol:

a. Phase Space Sampling: Use ASE's

calculatorinterface to deploy a classical potential. Run a series of LAMMPS molecular dynamics simulations viaase.calculators.lammpsrun. Key simulations include: * NVT MD at varying temperatures (300K, 600K, 900K). * NPT MD at varying pressures. * Deformation simulations (shear, tensile). b. Configuration Extraction: Periodically sample uncorrelated atomic configurations (snapshots) from the MD trajectories using ASE. c. High-Throughput DFT Single-Point Calculations: For each snapshot, use ASE to write input files, submit a DFT calculation (e.g., viaase.calculators.espresso.Espresso), and parse the resulting energy, forces, and stress. d. Dataset Assembly: Compile structures and their DFT-calculated properties into an ASE-readable database (e.g.,ase.db).

Diagram 1: Workflow for generating an initial training set.

Research Reagent Solutions Table

| Item | Function in Protocol |

|---|---|

ASE Atoms object |

Central data structure for representing and manipulating atomic configurations. |

ASE DB (Database) |

SQLite-based storage for structures and calculated properties, enabling easy querying and retrieval. |

ASE LAMMPSrun Calculator |

Interface to execute LAMMPS simulations directly from an ASE script. |

ASE Espresso/Vasp Calculator |

Interface to set up and parse results from DFT software, abstracting file handling. |

Protocol 2: Active Learning Loop for Configuration Space Exploration

Objective: Iteratively improve MLIP accuracy and robustness by selectively querying DFT for configurations where the MLIP is uncertain.

Materials & Workflow:

- Input: Initial training database, an MLIP framework (e.g., MACE), a query strategy (e.g., D-optimal, committee-based uncertainty).

- Protocol:

a. MLIP Training: Train an MLIP model on the current database using the chosen framework.

b. Exploratory Sampling: Use the newly trained MLIP within LAMMPS (via its

mliapinterface) to perform extended, biased MD simulations (e.g., at very high temperature) to probe unexplored regions of configuration space. c. Candidate Selection: From the exploratory MD, extract many new candidate structures. Use the query strategy to select the N most "informative" candidates (e.g., those with highest predictive variance from a committee of MLIPs). d. DFT Query & Database Augmentation: Perform DFT calculations on the selected candidates and add them to the training database. e. Validation & Convergence Check: Evaluate MLIP error metrics (see Table 2) on a held-out test set. Repeat from step (a) until errors converge below a target threshold.

Diagram 2: Active learning loop for iterative dataset improvement.

Data Presentation and Performance Metrics

Table 2: Quantitative Error Metrics for MLIP Validation

| Metric | Formula (per atom/component) | Target Threshold (Typical) | ||

|---|---|---|---|---|

| Energy MAE | $\frac{1}{N}\sum_{i=1}^{N} | E{i}^{\text{DFT}} - E{i}^{\text{MLIP}} | $ | < 10 meV/atom |

| Force MAE | $\frac{1}{3N{\text{atoms}}}\sum{i=1}^{N{\text{atoms}}} \sum{\alpha} | F{i,\alpha}^{\text{DFT}} - F{i,\alpha}^{\text{MLIP}} | $ | < 100 meV/Ã… |

| Force RMSE | $\sqrt{\frac{1}{3N{\text{atoms}}}\sum{i,\alpha} (F{i,\alpha}^{\text{DFT}} - F{i,\alpha}^{\text{MLIP}})^2}$ | < 150 meV/Ã… |

Table 3: Example Performance of an MACE Model for NiMo Alloy

| Training Set Size | Energy MAE (meV/atom) | Force MAE (meV/Ã…) | Active Learning Cycle |

|---|---|---|---|

| 500 configurations | 8.2 | 112 | Initial |

| +100 queried | 5.1 | 78 | 1 |

| +80 queried | 3.7 | 62 | 2 |

| Target | < 5 | < 70 | Converged |

Protocol 3: High-Throughput Validation and Deployment

Objective: Systematically validate the final MLIP and prepare it for production MD simulations.

Materials & Workflow:

- Input: Final trained MLIP model, held-out test set of DFT data.

- Validation Protocol: a. Property Prediction: Use ASE to compute the MLIP-predicted energy, forces, and stress for all structures in the test set. b. Error Analysis: Calculate metrics from Table 2. Generate parity plots (DFT vs. MLIP) for energies and forces. c. Phonon & Elastic Constant Validation: Use ASE phonons and elastic constants modules with the MLIP as the calculator. Compare results to benchmark DFT calculations.

- Deployment Protocol:

a. LAMMPS Interface: Convert the native MLIP model to the required format (e.g.,

.yamlformliapor.ptforpair_style nequip). b. Production Run Script: Write a LAMMPS input script that loads the MLIP viapair_style mliaporpair_style nequipand specifies the model file. c. Automated Analysis: Use ASE'sreadfunction to parse LAMMPS output trajectories for further analysis.

Research Reagent Solutions Table

| Item | Function in Protocol |

|---|---|

ASE Phonons Class |

Sets up force calculations for finite-displacement phonon analysis using any attached calculator (MLIP or DFT). |

ASE ElasticConstant Class |

Calculates elastic constants by applying strain and evaluating stress. |

LAMMPS pair_style mliap |

Generic interface for MLIPs, requiring a model file and a descriptor (e.g., SO3, SO4). |

LAMMPS pair_style nequip/allegro |

Native, optimized interfaces for specific modern MLIP architectures. |

This protocol provides a detailed case study on constructing a training dataset for a machine-learned interatomic potential (MLIP) focused on protein-ligand interactions. This work is framed within a broader thesis exploring systematic methodologies for generating representative configuration spaces for MLIP training. The central hypothesis is that the predictive accuracy and transferability of an MLIP are directly governed by the diversity and thermodynamic/kinetic relevance of the atomic configurations in its training set. This case study implements and validates a multi-fidelity, active learning-driven workflow for sampling the complex, high-dimensional energy landscape of a protein-ligand binding pocket.

Foundational Data and Motivation

Recent benchmarks highlight the performance gap between specialized scoring functions and general-purpose MLIPs on protein-ligand binding affinity prediction. The curated data in Table 1 underscores the need for training sets that capture the subtleties of non-covalent interactions.

Table 1: Benchmark Performance on Protein-Ligand Binding Affinity (ΔG) Prediction

| Method Type | Representative Model | PDBbind Core Set RMSE (kcal/mol) | Key Limitation |

|---|---|---|---|

| Classical Scoring Function | AutoDock Vina | ~3.0 | Simplified physics, fixed functional form |

| End-to-End Deep Learning | Pafnucy | ~1.4 | Black-box, limited extrapolation |

| General MLIP | ANI-2x | >4.0* | Trained on small molecules, lacks protein environment data |

| Target (This Study) | Specialized PL-MLIP | <1.2 (Goal) | Requires specialized, diverse training set |

*Estimated performance when applied directly to protein-ligand systems without retraining.

Protocol: Multi-Stage Training Set Construction

Stage 1: Initial Configurational Sampling via Enhanced MD

Objective: Generate a physically diverse set of protein-ligand conformations and complexes.

Materials & Reagents:

- Protein System: Target protein (e.g., Trypsin, PDB: 3PTB), prepared with protonation states assigned at pH 7.4.

- Ligand Set: 5-10 congeneric ligands with known binding affinities to the target.

- Software: GROMACS 2024.1 or OpenMM for MD simulation; PLUMED for enhanced sampling.

- Force Field: CHARMM36m for protein; CGenFF for ligands.

- Solvent Model: TIP3P water in a rhombic dodecahedron box, 1.2 nm minimum distance to box edge.

- Ions: 0.15 M NaCl for physiological ionic strength.

Procedure:

- System Preparation: For each ligand, generate initial pose via molecular docking (using Vina) into the protein's crystal structure binding site.