Force Fields in Molecular Dynamics: Calculating Atomic Forces from Fundamentals to Drug Discovery Applications

This article provides a comprehensive overview of the critical role force fields play in calculating atomic forces for molecular dynamics (MD) simulations.

Force Fields in Molecular Dynamics: Calculating Atomic Forces from Fundamentals to Drug Discovery Applications

Abstract

This article provides a comprehensive overview of the critical role force fields play in calculating atomic forces for molecular dynamics (MD) simulations. Tailored for researchers, scientists, and drug development professionals, it explores the foundational principles of potential energy surfaces and the classification of classical, reactive, and machine learning force fields. It delves into methodological applications in biomolecular simulations and computer-aided drug design, addresses common troubleshooting and optimization strategies, and systematically reviews validation protocols and comparative assessments of modern force fields. The content synthesizes current challenges and future directions, highlighting the impact of advancing force field accuracy on biomedical and clinical research.

The Foundation of Atomic Forces: Understanding Potential Energy Surfaces and Force Field Principles

The Concept of the Potential Energy Surface (PES) in Computational Simulations

The Potential Energy Surface (PES) is a fundamental concept in computational chemistry and molecular physics that describes the energy of a system of atoms as a function of their positions [1] [2]. Conceptually, a PES can be visualized as a multidimensional landscape where the coordinates represent atomic positions and the "height" corresponds to the system's potential energy at that specific configuration [3]. For systems with only one coordinate, this is referred to as a potential energy curve or energy profile, while systems with more degrees of freedom form complex multidimensional surfaces [1]. This landscape analogy provides an intuitive framework for understanding molecular stability and reactivity: energy minima correspond to stable molecular structures such as reactants, products, or intermediates, while saddle points represent transition states that form the highest energy point along the reaction pathway [1] [4].

The PES serves as the foundational bedrock upon which computational simulations are built, providing the relationship between molecular geometry and energy that determines system behavior at the atomic level [3]. Since its first suggestion by French physicist René Marcelin in 1913, the PES concept has become indispensable for theoretically exploring molecular properties, predicting reaction pathways, and understanding chemical reaction dynamics [1]. In pharmaceutical research and drug development, accurate PES descriptions enable researchers to explore protein-ligand interactions, predict binding affinities, and understand conformational changes in biomolecules, making it a critical tool in rational drug design.

Mathematical Formulation of PES

The geometry of a collection of atoms can be described by a vector r, whose elements represent the atom positions in either Cartesian coordinates or internal coordinates such as interatomic distances and angles [1]. The potential energy surface defines the energy as a function of these positions, E(r), across all configurations of interest [1] [2]. For most chemical systems, obtaining a complete analytical representation of the PES is computationally prohibitive, leading to the use of approximations and interpolation methods for practical applications [1].

In the vicinity of energy minima, the PES for larger molecules is often described using a Taylor series expansion about the minimum point [4]:

U(R) = U~e~ + ½ ∑~i~ ∑~j~ k~ij~ q~i~ q~j~ + ½ ∑~i~ ∑~j~ ∑~k~ k~ijk~ q~i~ q~j~ q~k~ + ½ ∑~i~ ∑~j~ ∑~k~ ∑~l~ k~ijkl~ q~i~ q~j~ q~k~ q~l~ + ⋯

where U~e~ is the energy at the equilibrium geometry, k~ij~, k~ijk~, and k~ijkl~ are force constants, and {q~j~} are internal coordinates displacement from equilibrium [4]. This formulation, known as an anharmonic force field, provides a mathematical foundation for understanding molecular vibrations and reaction pathways. When cross terms are eliminated and the expansion is truncated after quadratic terms, this becomes a harmonic force field or normal-mode expansion, which offers computational advantages while maintaining physical relevance for small displacements [4].

The dimensionality of a PES presents significant computational challenges. For a system of N atoms, 3N-6 internal coordinates are required to define the configuration (3N-5 for linear molecules) [2]. This high dimensionality makes complete mapping of the PES infeasible for all but the smallest molecular systems, necessitating focused exploration of relevant regions such as reaction coordinates connecting reactants to products.

The Role of Force Fields in Calculating PES and Atomic Forces

Fundamental Principles of Force Fields

Force fields provide the computational machinery to translate atomic configurations into energy values and forces, essentially operationalizing the PES concept for practical simulations [5]. In the context of chemistry and molecular modeling, a force field is a computational model that describes the forces between atoms within molecules or between molecules through empirical potential energy functions and corresponding parameter sets [5]. These mathematical models calculate the potential energy of a system at the atomistic level, with the acting forces on every particle derived as the gradient of the potential energy with respect to particle coordinates [5].

The basic functional form for molecular mechanics force fields decomposes the total energy into bonded and nonbonded components [5]:

[ E{\text{total}} = E{\text{bonded}} + E_{\text{nonbonded}} ]

where ( E{\text{bonded}} = E{\text{bond}} + E{\text{angle}} + E{\text{dihedral}} ) accounts for covalent interactions, and ( E{\text{nonbonded}} = E{\text{electrostatic}} + E_{\text{van der Waals}} ) describes noncovalent interactions between atoms [5]. This additive approach allows for computationally efficient evaluation of complex molecular systems while maintaining physical relevance through careful parameterization.

Force Field Components and Their Physical Significance

The individual components of force fields represent specific physical interactions that collectively describe the complete PES:

Bond stretching: Typically modeled using a harmonic potential approximating Hooke's law: ( E{\text{bond}} = \frac{k{ij}}{2}(l{ij} - l{0,ij})^2 ), where ( k{ij} ) is the bond force constant, ( l{ij} ) is the actual bond length, and ( l_{0,ij} ) is the equilibrium bond length [5]. For greater accuracy in describing bond dissociation, the more computationally expensive Morse potential may be employed.

Angle bending: Represented by quadratic energy functions that maintain the appropriate geometric arrangement between triplets of bonded atoms.

Dihedral terms: Describe the energy associated with rotation around bonds, with functional forms varying between different force fields. Additional "improper torsional" terms may enforce planarity in aromatic rings and conjugated systems [5].

Van der Waals interactions: Typically computed using a Lennard-Jones potential or Mie potential to account for attractive dispersion forces and Pauli repulsion at short ranges [5].

Electrostatic interactions: Represented by Coulomb's law: ( E{\text{Coulomb}} = \frac{1}{4\pi\varepsilon0}\frac{qi qj}{r{ij}} ), where ( qi ) and ( qj ) are atomic partial charges, and ( r{ij} ) is the distance between atoms [5]. These terms often dominate interactions in polar molecules and ionic compounds.

Force Field Parameterization Strategies

The accuracy and transferability of force fields depend critically on their parameter sets, which are derived through various empirical and theoretical approaches [5]. Parameterization strategies can be broadly categorized as:

Component-specific parametrization: Developed solely for describing a single substance (e.g., water) [5].

Transferable parametrization: Parameters designed as building blocks applicable to different substances (e.g., methyl groups in alkane force fields) [5].

Parameters may be derived from classical laboratory experiment data, quantum mechanical calculations, or a combination of both [5]. Modern parameterization often utilizes quantum mechanical calculations for intramolecular interactions and parametrizes dispersive interactions using macroscopic properties such as liquid densities [5]. The assignment of atomic charges represents a particularly challenging aspect, often following quantum mechanical protocols with heuristic adjustments [5].

Recent efforts have focused on systematic parameterization approaches to address limitations in earlier force fields. As demonstrated in studies of AMBER force fields, improper balancing of dihedral terms can lead to biased conformational sampling, such as overstabilization of α-helices [6]. Improved parameter sets like ff99SB achieve better balance of secondary structure elements through careful fitting of φ/ψ dihedral terms to quantum mechanical calculations of glycine and alanine tetrapeptides [6].

Practical Implementation in Drug Development and Research

Force Field Selection and Validation for Biomolecular Systems

The selection of appropriate force fields is critical for reliable computational predictions in pharmaceutical research. Recent comparative studies evaluate force field performance across diverse biomolecular systems:

Table 1: Comparison of Force Field Performance for β-Peptides [7]

| Force Field | Parametrization Approach | Systems Accurately Modeled | Key Strengths | Limitations |

|---|---|---|---|---|

| CHARMM | Torsional energy path matching against QM calculations [7] | All 7 test peptides (monomeric and oligomeric) [7] | Reproduced experimental structures accurately; correct description of oligomeric systems [7] | Requires specific extension for β-peptides [7] |

| AMBER | Multiple variants with different parametrization strategies [7] [6] | 4 of 7 test peptides (those containing cyclic β-amino acids) [7] | Maintained pre-formed associates; reasonable for specific structural types [7] | Unable to yield spontaneous oligomer formation; inconsistent glycine preferences in some variants [7] [6] |

| GROMOS | Native support "out of the box" [7] | 4 of 7 test peptides [7] | No additional parametrization needed for supported residues [7] | Lowest performance in reproducing experimental secondary structures [7] |

This comparative analysis highlights how force field performance varies significantly across different molecular systems, emphasizing the importance of selecting force fields with demonstrated accuracy for specific applications, particularly when studying non-natural peptidomimetics with potential pharmaceutical applications [7].

Advanced Applications in Pharmaceutical Development

Potential energy surfaces and force field simulations enable critical applications throughout the drug development pipeline:

Peptidomimetic Drug Design: Molecular dynamics simulations based on accurate PES descriptions facilitate the design of β-peptides and other peptidomimetics with stable secondary structures (helices, sheets, hairpins) and specific oligomerization behavior [7]. These non-natural compounds show promise in diverse applications including nanotechnology, biomedical fields, catalysis, and biotechnology [7].

Oral Peptide Formulation: Computational studies of peptide conformation and stability on PES contribute to strategies for improving oral bioavailability of peptide-based drugs, including amino acid modification, cyclization, and nanoparticle encapsulation [8]. With the global peptide-based drug market expected to reach approximately $80 billion by 2032, these applications have significant commercial and therapeutic implications [8].

Polyelectrolyte Complexes for Drug Delivery: PES analyses inform the development of polyelectrolyte complexes (PECs) formed through electrostatic interactions between oppositely charged polyions [9]. These systems enable environmentally friendly preparation methods and protect therapeutic agents including small synthetic drugs and biomacromolecules [9].

Pickering Emulsions in Pharmaceutical Formulations: Molecular simulations guide the design of particle-stabilized Pickering emulsions for drug delivery applications, enhancing stability, reducing toxicity, and enabling controlled drug release patterns [10].

Computational Methodologies and Protocols

Practical implementation of PES-based simulations follows rigorous computational protocols:

Table 2: Key Methodological Components for PES Studies of β-Peptides [7]

| Methodological Aspect | Implementation Details | Purpose/Rationale |

|---|---|---|

| System Preparation | Built using PyMOL with specialized extensions for β-peptides; correct termini applied as reported in literature [7] | Ensure accurate initial structures matching experimental conditions |

| Simulation Software | GROMACS as common simulation engine [7] | Avoid effects due to differences in algorithms across force-field-specific codes [7] |

| Solvation | Placed in center of cubic box with at least 1.4 nm peptide-wall distance; solvated with pre-equilibrated solvent [7] | Create physiologically relevant environment while maintaining computational efficiency |

| Dynamics Protocol | Steepest descent energy minimization; NVT ensemble MD run with position restraints; 500 ns production simulations [7] | Remove steric clashes; equilibrate temperature; sufficient sampling for conformational analysis |

| Analysis Methods | Custom Python packages (e.g., "gmxbatch") for trajectory analysis [7] | Standardized, reproducible analysis of multiple simulation systems |

The simulation workflow typically begins with system preparation and energy minimization, followed by gradual equilibration of temperature and pressure, and finally production simulations sufficient to capture the relevant dynamics [7]. For association studies, multiple copies of the solvated peptide may be assembled in simulation boxes to observe spontaneous oligomerization behavior [7].

Computational Tools for PES Exploration

Table 3: Key Research Reagent Solutions for PES Studies

| Tool/Resource | Type | Function/Purpose | Examples/Notes |

|---|---|---|---|

| Molecular Dynamics Engines | Software | Simulate temporal evolution of molecular systems using force fields | GROMACS [7], AMBER [6], CHARMM [7] |

| Force Field Databases | Data Repository | Provide validated parameter sets for specific molecular classes | OpenKIM [5], TraPPE [5], MolMod [5] |

| Quantum Chemistry Packages | Software | Provide reference data for force field parametrization and validation | Used for calculating high-level reference data for dihedral parameter fitting [6] |

| System Building Tools | Software | Prepare initial molecular structures and simulation systems | PyMOL with specialized extensions [7] |

| Trajectory Analysis Tools | Software | Analyze simulation outputs for structural and energetic properties | Custom Python packages (e.g., "gmxbatch") [7] |

Specialized Force Fields for Pharmaceutical Applications

The development of specialized force fields has expanded the scope of PES applications in pharmaceutical research:

Protein Force Fields: Continuous refinement of parameters for biological macromolecules, with improvements focusing on better balancing secondary structure preferences and correcting systematic biases [6]. Modern versions address limitations in earlier force fields that over-stabilized specific conformations like α-helices [6].

Peptidomimetic Force Fields: Specialized parameters for non-natural amino acids and structural motifs enable accurate simulation of peptide foldamers with applications in drug design [7]. These include extensions for β-amino acids that accurately reproduce experimental structures and association behavior [7].

Coarse-Grained Models: Reduced-complexity force fields that sacrifice atomic detail for longer timescales and larger systems, particularly useful for studying macromolecular complexes and aggregation processes [5].

Emerging Trends and Methodological Advances

The field of PES exploration and force field development continues to evolve through several key trends:

Machine Learning Potentials: Emerging approaches use machine learning algorithms to construct highly accurate PES with quantum mechanical fidelity at computational costs comparable to classical force fields [4]. Kernel methods and neural networks are among the dominating machine learning algorithms applied for learning PES, resulting in potentials suitable for molecular dynamics and vibrational spectroscopy [4].

Multidimensional Free Energy Simulations: Advanced sampling techniques enable construction of multidimensional potentials of mean force (PMF) along multiple reaction coordinates simultaneously [4]. These approaches provide more complete descriptions of complex conformational changes and reaction pathways, such as the demonstrated 3-D PMF simulation of the ene reaction between singlet oxygen and tetramethylethylene [4].

Automated Parameterization: Efforts to develop open source codes and methods for semi-automated or fully automated force field parametrization aim to increase reproducibility and reduce subjectivity in parameter development [5].

The Potential Energy Surface represents the fundamental connection between molecular structure and energetic properties that dictate chemical behavior and reactivity. Through the computational framework provided by force fields, researchers can navigate these complex multidimensional landscapes to predict molecular properties, understand reaction mechanisms, and design novel therapeutic compounds. The continued refinement of force field parameters and simulation methodologies ensures increasingly accurate representation of PES for complex biomolecular systems, further strengthening the role of computational simulations in pharmaceutical research and drug development.

As force field methodologies evolve toward more automated parameterization and machine learning approaches, and as computational resources continue to grow, the resolution and accuracy of PES exploration will further improve, enabling more reliable predictions and expanding the boundaries of computational drug design. The integration of these advanced computational approaches with experimental validation creates a powerful framework for addressing complex challenges in modern pharmaceutical development.

Calculating atomic forces is a fundamental task in computational chemistry, essential for predicting molecular behavior, reaction mechanisms, and material properties. The central challenge in this field lies in navigating the inherent trade-off between computational accuracy and efficiency. On one end of the spectrum, quantum mechanical (QM) methods provide high accuracy by explicitly solving the electronic structure problem, while on the other, molecular mechanics (MM) force fields offer computational efficiency through simplified physical potential functions [11] [12]. The role of force fields in this landscape is to enable the simulation of large molecular systems over biologically and materially relevant timescales, bridging the gap between theoretical accuracy and practical applicability in drug discovery and materials science [13] [14].

Theoretical Foundations: QM and Force Fields

Quantum Mechanical Methods

Quantum mechanics serves as the theoretical bedrock of computational chemistry, providing a rigorous framework for understanding molecular structure and reactivity at the atomic level. QM methods explicitly describe electrons by solving the Schrödinger equation, yielding highly accurate potential energy surfaces [11] [12].

Key QM Methodologies:

- Density Functional Theory (DFT): Balances computational cost with reasonable accuracy for many chemical systems, with enhancements like empirical dispersion corrections (DFT-D3, DFT-D4) improving performance for non-covalent interactions [11].

- Coupled Cluster Theory (CCSD(T)): Widely regarded as the "gold standard" for quantum chemical accuracy but computationally demanding, scaling approximately as N^7 with system size [15] [12].

- Quantum Monte Carlo (QMC): Provides an alternative high-accuracy approach, with recent benchmarks showing agreement within 0.5 kcal/mol with CCSD(T) for non-covalent interactions [15].

Molecular Mechanics Force Fields

Force fields calculate a system's energy using simplified interatomic potential functions based on the Born-Oppenheimer approximation, which separates nuclear and electronic motions. The general functional form includes terms for bonded interactions (bonds, angles, dihedrals) and non-bonded interactions (electrostatics, van der Waals) [13] [12].

Force Field Classification:

- Classical Force Fields: Use fixed bonding patterns and harmonic potentials, suitable for simulating biomolecules and materials where bond breaking/formation does not occur [12].

- Reactive Force Fields: Incorporate bond-order formalism to enable dynamic bond formation and breaking during simulations [12].

- Machine Learning Force Fields: Employ neural networks to learn potential energy surfaces from QM data, offering near-QM accuracy with significantly reduced computational cost [13] [16].

Quantitative Comparison: Accuracy vs. Computational Cost

The table below summarizes the key trade-offs between different methods for calculating atomic forces:

Table 1: Comparison of Methods for Calculating Atomic Forces and Energies

| Method | Computational Scaling | Typical System Size | Time Scale | Accuracy (Energy) | Key Applications |

|---|---|---|---|---|---|

| CCSD(T)/QMC | O(N^7) / Exponential | 10-100 atoms | N/A | 0.1-1 kcal/mol | Benchmarking, Small molecule precision [15] [12] |

| DFT | O(N^3)-O(N^4) | 100-1000 atoms | Picoseconds | 1-5 kcal/mol | Reaction mechanisms, Materials design [11] [12] |

| ML Force Fields | O(N) (after training) | 1000-100,000 atoms | Nanoseconds | 1-3 kcal/mol | Molecular dynamics, Materials screening [13] [16] |

| Classical FF | O(N)-O(N^2) | 10^4-10^8 atoms | Nanoseconds to microseconds | 3-10 kcal/mol | Protein folding, Drug binding [13] [12] |

Table 2: Characteristics of Different Force Field Types

| Force Field Type | Number of Parameters | Interpretability | Reactive Capability | Representative Examples |

|---|---|---|---|---|

| Classical | 10-100 | High | No | AMBER, CHARMM, OPLS [12] |

| Reactive | 100-1000 | Medium | Yes | ReaxFF [12] |

| Machine Learning | 10^4-10^8 | Low to Medium | Possible | Grappa, E2GNN, NequIP [13] [16] |

Methodological Approaches and Workflows

High-Accuracy Quantum Mechanical Benchmarking

The QUID ("QUantum Interacting Dimer") framework exemplifies rigorous benchmark development for biological ligand-pocket interactions [15]:

System Selection:

- Large Monomers: Nine chemically diverse drug-like molecules (~50 atoms) with flexible chain-like geometry from the Aquamarine dataset

- Small Monomers: Benzene (C6H6) and imidazole (C3H4N2) representing common ligand motifs

- Interaction Types: Covers aliphatic-aromatic, H-bonding, and π-stacking interactions prevalent in protein-ligand systems

Conformation Generation:

- Equilibrium Dimers: Initial alignment of aromatic rings at 3.55±0.05 Å distance, followed by optimization at PBE0+MBD theory level

- Classification: Categorization as 'Linear,' 'Semi-Folded,' or 'Folded' based on large monomer geometry

- Non-Equilibrium Dimers: Generation along dissociation pathway (8 distances from 0.90 to 2.00 times equilibrium distance)

Benchmarking Protocol:

- Platinum Standard Establishment: Achieving tight agreement (<0.5 kcal/mol) between complementary methods (LNO-CCSD(T) and FN-DMC)

- Component Analysis: Using symmetry-adapted perturbation theory to decompose interaction energies

- Method Evaluation: Assessing performance of DFT, semiempirical, and force field methods across equilibrium and non-equilibrium geometries

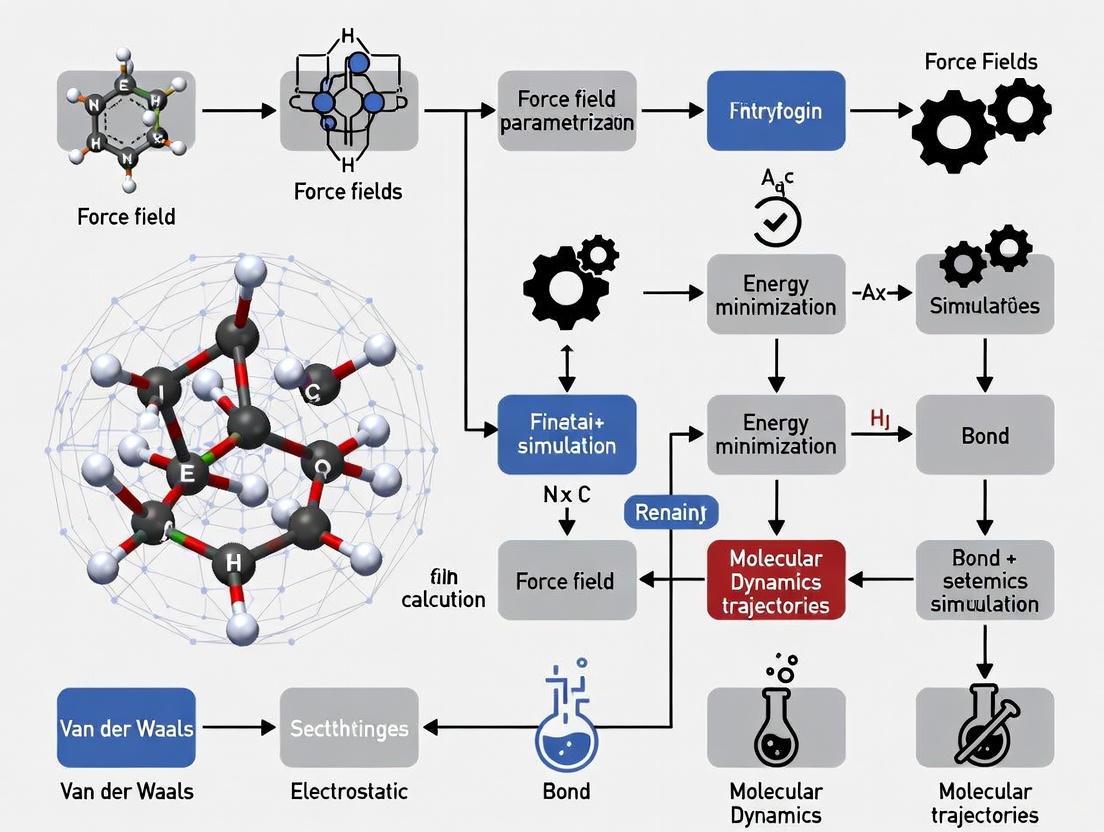

Figure 1: QM Benchmarking Workflow for Ligand-Pocket Interactions

Machine Learning Force Field Development

Grappa represents a modern approach to machine-learned molecular mechanics force fields [13]:

Architecture Components:

- Graph Representation: Molecular graph with atoms as nodes and bonds as edges

- Atom Embeddings: d-dimensional features generated using graph attentional neural network

- Parameter Prediction: Transformer with symmetry-preserving positional encoding predicts MM parameters from atom embeddings

- Symmetry Enforcement: Model respects permutation symmetries of MM energy contributions

Training Methodology:

- End-to-End Optimization: Differentiable mapping from molecular graph to energy enables optimization on QM energies and forces

- Data Efficiency: Simple input features (molecular graph) eliminate need for hand-crafted chemical features

- Transfer Learning: Demonstrated extension to peptide radicals and transferability to macromolecules

Validation Protocols:

- Energy/Force Accuracy: Evaluation on >14,000 molecules with >1 million conformations (Espaloma dataset)

- Dihedral Landscapes: Assessment of peptide dihedral angle potential energy surfaces

- Experimental Validation: Reproduction of measured J-couplings and protein folding behavior

Figure 2: Machine Learning Force Field Development Pipeline

Research Reagents: Essential Computational Tools

Table 3: Key Computational Tools for Force Field Development and Application

| Tool Category | Representative Examples | Primary Function | Application Context |

|---|---|---|---|

| QM Benchmarking | LNO-CCSD(T), FN-DMC, PBE0+MBD | High-accuracy reference data | Benchmark development, Method validation [15] |

| ML Force Fields | Grappa, E2GNN, Espaloma | Learn potential energy surfaces | Molecular dynamics with near-QM accuracy [13] [16] |

| Classical FF | AMBER, CHARMM, OpenFF | Biomolecular simulation | Protein folding, Drug binding [12] [17] |

| MD Engines | GROMACS, OpenMM | Molecular dynamics simulation | Biomolecular sampling, Property calculation [13] |

| Free Energy | FEP, ABFE, RBFE | Binding affinity calculation | Drug discovery, Lead optimization [17] |

Applications in Drug Discovery and Materials Science

Free Energy Calculations in Drug Discovery

Free Energy Perturbation (FEP) methods rely heavily on accurate force fields for predicting binding affinities in drug discovery [17]:

Recent FEP Advancements:

- Lambda Window Optimization: Automated scheduling algorithms determine optimal number of intermediate states for transformation pathways

- Advanced Hydration Methods: Techniques like Grand Canonical Non-equilibrium Candidate Monte-Carlo (GCNCMC) ensure consistent hydration environments

- Covalent Inhibitor Modeling: Ongoing development of parameters for protein-ligand covalent bond formation

- Active Learning FEP: Combines accurate FEP with rapid QSAR methods for efficient chemical space exploration

Absolute Binding Free Energy (ABFE):

- Advantages: Enables independent ligand calculation without reference molecules, suitable for diverse virtual screening hits

- Challenges: Requires ~10x more GPU hours than relative binding free energy (RBFE) and may exhibit systematic offsets due to unaccounted protein conformational changes

Biomolecular and Materials Simulations

Protein Folding and Dynamics:

- Grappa demonstrates transferability from small molecules to macromolecular assemblies, recovering experimental folding structures starting from unfolded states [13]

- Achieves simulation of million-atom systems on single GPU with performance comparable to highly optimized E(3) equivariant neural networks

Heterogeneous Catalysis:

- Force fields enable modeling of catalyst structures, adsorption, diffusion, and reaction processes at scales inaccessible to QM methods [12]

- Reactive force fields (ReaxFF) extend applicability to bond-breaking/formation events in catalytic systems

The role of force fields in calculating atomic forces continues to evolve, with machine learning approaches narrowing the accuracy gap with quantum mechanics while maintaining computational efficiency. Modern ML force fields like Grappa and E2GNN demonstrate that it is possible to achieve near-quantum accuracy for molecular energies and forces while enabling simulations of large systems on practical hardware [13] [16]. The development of benchmark datasets like QUID provides rigorous validation standards that drive method improvements, particularly for complex interactions like those in protein-ligand systems [15].

Future advancements will likely focus on several key areas: improved description of non-covalent interactions and electronic polarization in classical force fields, increased data and computational efficiency for ML force fields, and better integration across different accuracy scales through multi-scale modeling. As these methods mature, force fields will play an increasingly important role in enabling reliable simulation of biological and materials systems at experimentally relevant scales, further bridging the gap between computational prediction and experimental observation in chemical research.

In computational chemistry and molecular modeling, force fields serve as the fundamental engine for calculating the forces that govern atomic and molecular behavior. They provide an empirical model that describes the potential energy of a system as a function of the nuclear coordinates, enabling the study of molecular structures, dynamics, and interactions at an atomistic level [12]. The acting forces on every particle are derived as the negative gradient of this potential energy with respect to the particle coordinates (Fᵢ = -∂E/∂rᵢ), forming the basis for molecular dynamics simulations and energy minimization techniques [5]. By offering a computationally efficient alternative to quantum mechanical methods, force fields make it feasible to simulate large biomolecular systems—such as proteins, nucleic acids, and lipid membranes—over microsecond timescales, which are essential for understanding biological processes and aiding drug discovery efforts [18] [12].

Core Architecture of Classical Force Fields

The Fundamental Energy Equation

Classical force fields compute the total potential energy of a system through an additive combination of bonded and non-bonded interaction terms. The general form for a Class I force field, which represents the most widely used type for biomolecular simulations, can be expressed as [18] [19]:

U(ð«) = Σ U_bonded(ð«) + Σ U_non-bonded(ð«)

This comprehensive energy function expands to include specific components:

U_total = Σ_bonds K_b(r - râ‚€)² + Σ_angles K_θ(θ - θ₀)² + Σ_dihedrals K_φ[1 + cos(nφ - δ)] + Σ_nonbonded {4ε[(σ/r)¹² - (σ/r)â¶] + (q_iq_j)/(4πε₀r)}

The parameters for these functions (force constants, equilibrium values, atomic charges, etc.) are specifically assigned to different atom types, which classify atoms based on their element and chemical environment [5]. This modular approach provides the foundation for modeling diverse molecular systems with a manageable set of parameters.

Classification of Force Fields

Force fields are categorized based on the complexity of their functional forms and parameterization strategies [18]:

- Class I: Utilizes simple harmonic potentials for bonds and angles, with no cross-terms. This class includes widely adopted force fields such as AMBER, CHARMM, GROMOS, and OPLS, offering an optimal balance between computational efficiency and accuracy for biomolecular simulations.

- Class II: Incorporates anharmonic terms for bonds and angles along with cross-terms that describe couplings between internal coordinates. Examples include MMFF94 and UFF, providing improved accuracy for small molecules at increased computational cost.

- Class III: Explicitly includes polarization effects and other electronic phenomena. Force fields like AMOEBA and DRUDE fall into this category, offering higher physical fidelity but requiring significantly more computational resources.

Table 1: Comparison of Force Field Classes

| Class | Representative Examples | Key Features | Primary Applications |

|---|---|---|---|

| Class I | AMBER, CHARMM, GROMOS, OPLS | Harmonic bonds/angles; No cross-terms | Biomolecular simulations (proteins, nucleic acids) |

| Class II | MMFF94, UFF | Anharmonic terms; Cross-terms | Small molecule modeling; Drug-like molecules |

| Class III | AMOEBA, DRUDE | Explicit polarization; Charge transfer | Systems where electronic polarization is critical |

Bonded Interaction Functional Forms

Bonded interactions describe the energy associated with the covalent bond structure of molecules, connecting atoms that are directly linked through chemical bonds.

Bond Stretching

The energy required to stretch or compress a chemical bond from its equilibrium length is typically modeled using a harmonic potential approximating Hooke's law [18] [5]:

E_bond = K_b(r - r₀)²

where K_b represents the force constant governing the bond stiffness, r is the actual bond length, and râ‚€ is the equilibrium bond length. This quadratic function provides a reasonable approximation for bond vibrations near equilibrium geometry, with accuracy on the order of 0.001 Ã… for typical biological simulations at moderate temperatures [5].

Angle Bending

The energy associated with bending the angle between three consecutively bonded atoms is similarly described by a harmonic potential [18]:

E_angle = K_θ(θ - θ₀)²

Here, K_θ represents the angular force constant, θ is the actual bond angle, and θ₀ is the equilibrium bond angle. The force constants for angle deformation are typically about five times smaller than those for bond stretching, reflecting the lower energy required for angular distortion compared to bond length changes [18].

Torsional Dihedral Angles

Torsional potentials describe the energy barrier for rotation around a central bond connecting four sequentially bonded atoms. This term is typically modeled using a periodic cosine function [18]:

E_dihedral = K_φ[1 + cos(nφ - δ)]

where K_φ represents the torsional force constant, n is the periodicity (multiplicity) of the function, φ is the torsional angle, and δ is the phase shift angle. Multiple periodic functions can be summed to create complex torsional profiles, such as reproducing the cis/trans and trans/gauche energy differences in molecules like ethylene glycol [18].

Improper Dihedrals

Improper dihedral terms are used primarily to enforce planarity in aromatic rings, carbonyl groups, and other conjugated systems [18]. They are typically modeled using a harmonic function:

E_improper = K_ω(ω - ω₀)²

where K_ω is the force constant, ω is the improper dihedral angle, and ω₀ is the equilibrium value (typically 0° for planar arrangements). This term is defined for a central atom connected to three peripheral atoms, where the dihedral angle represents the angle between the planes formed by the central atom with two peripheral atoms and the central atom with the third peripheral atom [18].

Table 2: Bonded Interaction Parameters in Classical Force Fields

| Interaction Type | Functional Form | Key Parameters | Physical Interpretation |

|---|---|---|---|

| Bond Stretching | K_b(r - r₀)² |

K_b (force constant), râ‚€ (equilibrium length) |

Resistance to bond length deformation |

| Angle Bending | K_θ(θ - θ₀)² |

K_θ (force constant), θ₀ (equilibrium angle) |

Resistance to bond angle deformation |

| Proper Dihedral | K_φ[1 + cos(nφ - δ)] |

K_φ (barrier height), n (periodicity), δ (phase) |

Barrier to internal rotation |

| Improper Dihedral | K_ω(ω - ω₀)² |

K_ω (force constant), ω₀ (equilibrium angle) |

Enforcement of molecular planarity |

Non-Bonded Interaction Functional Forms

Non-bonded interactions occur between atoms that are not directly connected by covalent bonds, representing both attractive and repulsive forces.

Van der Waals Interactions

Van der Waals interactions describe the weak, short-range attractive and repulsive forces between atoms. The Lennard-Jones potential is the most commonly used function to model these interactions [18] [5]:

E_LJ = 4ε[(σ/r)¹² - (σ/r)â¶]

The râ»Â¹Â² term represents the short-range Pauli repulsion due to overlapping electron orbitals, while the râ»â¶ term describes the longer-range attractive London dispersion forces. The parameter σ represents the finite distance at which the inter-particle potential is zero, while ε represents the depth of the potential well [18].

An alternative to the Lennard-Jones potential is the Buckingham potential, which replaces the repulsive râ»Â¹Â² term with an exponential function [18]:

E_Buckingham = A·exp(-Br) - C/râ¶

This provides a more physically realistic description of electron density at short distances but carries a risk of the "Buckingham catastrophe" where the potential approaches -∞ as r approaches 0 [18].

Electrostatic Interactions

Electrostatic interactions between charged atoms are modeled using Coulomb's law [18] [5]:

E_elec = (q_iq_j)/(4πε₀ε_r r)

where q_i and q_j are the partial atomic charges, ε₀ is the vacuum permittivity, ε_r is the relative dielectric constant, and r is the distance between atoms. The assignment of atomic partial charges is critical for accurately representing the molecular electrostatic potential and is typically derived from quantum mechanical calculations or heuristic approaches [5].

Combining Rules

To avoid parameter explosion, force fields use combining rules to determine interaction parameters between different atom types. Common approaches include [18]:

- Lorentz-Berthelot:

σ_ij = (σ_ii + σ_jj)/2,ε_ij = √(ε_ii · ε_jj)(Used in CHARMM, AMBER) - Geometric Mean:

σ_ij = √(σ_ii · σ_jj),ε_ij = √(ε_ii · ε_jj)(Used in GROMOS, OPLS)

These rules provide a systematic method for generating pairwise parameters from individual atom type parameters, though the Lorentz-Berthelot rules are known to sometimes overestimate the well depth for mixed interactions [18].

Table 3: Non-Bonded Interaction Parameters in Classical Force Fields

| Interaction Type | Functional Form | Key Parameters | Range |

|---|---|---|---|

| Lennard-Jones | 4ε[(σ/r)¹² - (σ/r)â¶] |

ε (well depth), σ (vdW radius) |

Short-range (~râ»â¶) |

| Buckingham | A·exp(-Br) - C/rⶠ|

A, B (repulsion), C (attraction) |

Short-range |

| Electrostatic | (q_iq_j)/(4πε₀r) |

q_i, q_j (atomic charges) |

Long-range (~râ»Â¹) |

Parameterization Methodologies

Traditional Parameterization Approaches

The development of accurate force field parameters traditionally requires extensive quantum mechanical calculations and experimental data. Parameterization strategies typically involve [5]:

- Quantum Mechanical Calculations: High-level QM methods (DFT, MP2, or CCSD(T)) provide target data for bond lengths, angles, vibrational frequencies, and conformational energies.

- Experimental Data: Thermodynamic properties (enthalpies of vaporization, free energies of solvation), liquid densities, and spectroscopic measurements serve as validation targets.

- Iterative Optimization: Parameters are adjusted systematically to minimize differences between force field predictions and reference QM/experimental data.

This process represents a challenging mixed discrete-continuous optimization problem that has historically required significant expert knowledge and manual refinement [19].

Emerging Machine Learning Approaches

Recent advances have introduced machine learning methods to automate and improve force field parameterization. The Espaloma framework, for instance, utilizes graph neural networks to assign parameters based on chemical environment [19]:

Diagram 1: ML Force Field Parameterization

This approach can be trained on massive quantum chemical datasets (e.g., over 1.1 million energy and force calculations) to produce highly accurate parameters while maintaining the computational efficiency of Class I force field functional forms [19].

Experimental Protocols and Validation

Validation Methodologies

Force field validation involves rigorous comparison with both quantum mechanical calculations and experimental observations:

Quantum Mechanical Validation:

- Conformational energy comparisons across torsion scans

- Vibrational frequency matching for bonds and angles

- Interaction energy calculations for molecular complexes

- Geometry optimization comparisons for equilibrium structures

Experimental Validation:

- Liquid densities and enthalpies of vaporization

- Free energies of solvation in various solvents

- NMR J-coupling constants and chemical shifts

- X-ray crystallographic data for bond lengths and angles

- Thermodynamic properties from calorimetry

Biomolecular Simulation Protocols

For drug discovery applications, force fields undergo additional validation through specific simulation protocols [19]:

- Protein-Ligand Binding Free Energy Calculations: Alchemical free energy perturbations to predict binding affinities

- Protein Folding Stability: Simulations to assess native state stability and folding pathways

- Membrane Permeability Predictions: Simulations of small molecule transport across lipid bilayers

- Solvation Free Energy Calculations: Determination of transfer free energies between different environments

These protocols ensure that force fields can reliably predict the key physicochemical properties relevant to pharmaceutical development.

Research Reagent Solutions

Table 4: Essential Software Tools for Force Field Development and Application

| Tool Name | Type | Primary Function | Application Context |

|---|---|---|---|

| OpenMM | Software Library | High-performance MD simulation | GPU-accelerated molecular dynamics |

| AMBER | Software Suite | Biomolecular simulation | MD simulation with AMBER force fields |

| CHARMM | Software Suite | Biomolecular simulation | MD simulation with CHARMM force fields |

| GROMACS | Software Suite | Biomolecular MD simulation | High-performance MD with various force fields |

| Open Force Field | Initiative | Force field development | Modern, open-source small molecule force fields |

| Wannier90 | Software Tool | Electronic structure analysis | Wannier function generation for parameterization |

| ESPaloma | ML Framework | Force field parameterization | Graph neural network-based parameter assignment |

| BespokeFit | Parameter Tool | Custom parameter generation | Molecule-specific parameter optimization |

Classical force fields provide an essential framework for calculating atomic forces in molecular systems through their functional forms for bonded and non-bonded interactions. The harmonic approximations for bonds and angles, combined with periodic torsions and Lennard-Jones/Coulomb potentials for non-bonded interactions, create a computationally efficient yet physically meaningful representation of molecular potential energy surfaces. While traditional parameterization approaches have served the field well for decades, emerging machine learning methodologies promise to address long-standing challenges in chemical transferability and systematic accuracy. As force fields continue to evolve, their role in enabling accurate molecular simulations will remain indispensable for advancing research in drug discovery, materials science, and structural biology.

Within computational chemistry and materials science, force fields provide the fundamental mathematical framework for calculating the potential energy of a system and the resulting forces acting on every atom. These calculations enable molecular dynamics (MD) simulations, which solve Newton's equations of motion to predict the temporal evolution of atomic systems. The core role of any force field is to define how potential energy (E_total) depends on atomic coordinates, typically decomposing it into bonded interactions (within covalently connected atoms) and non-bonded interactions (between non-bonded atoms) [5]. The general form for an additive force field is:

Etotal = Ebonded + E_nonbonded

where Ebonded = Ebond + Eangle + Edihedral and Enonbonded = Eelectrostatic + E_van der Waals [5].

Traditional, non-reactive force fields utilize simple harmonic potentials for bonds (Ebond = kbond(r - r_0)^2) and angles, with fixed atomic connectivity [5] [20]. This formulation is computationally efficient and accurate for simulating molecular configurations near equilibrium, but it possesses a critical limitation: the predefined bonding topology prevents the simulation of chemical reactions, as bonds cannot break or form. This restriction excludes computational investigations of catalytic processes, combustion, corrosion, and many other technologically crucial phenomena involving reactive chemistry. Reactive force fields were developed specifically to bridge this gap between quantum mechanical (QM) methods, which can describe reactions but are computationally prohibitive for large systems and long timescales, and efficient but non-reactive classical molecular dynamics [21] [22].

The Fundamental Principles of Reactive Force Fields

Reactive force fields overcome the limitations of fixed bonding topology by replacing the concept of permanent bonds with a bond-order formalism. In this approach, the bond order between two atoms is empirically calculated based on their interatomic distance. This bond order is continuous and differentiable, allowing for smooth transitions as bonds break and form during a simulation [21] [23].

The most widely used and developed reactive force field is ReaxFF (Reactive Force Field), originally developed by van Duin, Goddard, and co-workers [21] [23]. In ReaxFF, the total system energy is described by a complex sum of terms:

Esystem = Ebond + Eover + Eangle + Etors + EvdWaals + ECoulomb + ESpecific [21]

The critical distinction from traditional force fields lies in the Ebond term and the introduction of Eover, an over-coordination penalty. The bond order between atoms i and j (BOij) is calculated directly from interatomic distance (r_ij) using an empirical formula that accounts for sigma, pi, and double pi bonds:

BOij = exp[pbo1*(rij / r0^σ)^pbo2] + exp[pbo3*(rij / r0^π)^pbo4] + exp[pbo5*(rij / r_0^ππ)^pbo6] [21]

This bond order is continuously updated at every simulation step. All other bond-order-dependent energy terms, such as angle (Eangle) and torsion (Etors) strains, are calculated from this instantaneous bond order. This dynamic description of connectivity allows ReaxFF to naturally model bond dissociation and formation, as well as the associated changes in molecular geometry and hybridization. Non-bonded interactions (van der Waals and Coulomb) are calculated between all atom pairs, but are shielded to prevent excessive repulsion at short distances [21].

Table 1: Comparison of Traditional and Reactive Force Fields

| Feature | Traditional Force Field (e.g., CHARMM, AMBER) | Reactive Force Field (ReaxFF) |

|---|---|---|

| Bond Representation | Fixed, harmonic potential | Dynamic, based on bond order |

| Bond Breaking/Formation | Not possible | Core capability |

| Computational Cost | Low | High (but lower than QM) |

| Primary Applications | Protein folding, material structure | Combustion, catalysis, corrosion, fracture |

| Electrostatics | Fixed partial charges | Polarizable charge equilibration (QEq) |

Methodologies and Parameterization of Reactive Force Fields

The ReaxFF Parameterization Workflow

The accuracy of a ReaxFF simulation is entirely dependent on the quality of its parameter set. Developing these parameters is a complex, iterative process designed to ensure the force field reproduces data from high-fidelity quantum mechanical (QM) calculations and/or experimental results [21] [23]. The following diagram illustrates the key stages of the parameterization workflow, which involves continuous refinement to minimize the difference between ReaxFF predictions and the training data.

The training set must be comprehensive, covering the relevant chemical space, including bond and angle deformations, reaction energies and barriers, equation of state (EOS) data for crystals, and surface energies [21] [23]. Recent advances have introduced frameworks like JAX-ReaxFF, which utilizes gradient descent algorithms to systematically optimize parameters, as demonstrated in the development of a Mg/O/H force field for studying magnesium nanoparticles in water [24].

Key Experimental and Simulation Protocols

To illustrate a specific application, the following is a detailed methodology for a ReaxFF MD study of hydrogen production from magnesium nanoparticles and water, as presented in recent research [24].

Objective: To elucidate the reaction mechanisms and structural evolution during the interaction of Mg nanoparticles with an Hâ‚‚O atmosphere at high temperatures.

Simulation Setup:

- System Construction: A magnesium nanoparticle is placed in a simulation box filled with water vapor. The density of H₂O is a controlled variable (e.g., 0.07 g/cm³).

- Force Field: The newly developed Mg/O/H ReaxFF parameter set is used, which was trained against a QM-derived training set including interactions between Mg/O/H and the EOS of MgHâ‚‚ and Mg(OH)â‚‚ crystals.

- Simulation Parameters:

- Software: ReaxFF is implemented in codes like LAMMPS or the specialized PuReMD.

- Ensemble: NVT (constant Number of atoms, Volume, and Temperature) or NVE.

- Temperatures: Simulations are run at high temperatures (e.g., 1500 K, 2000 K) to accelerate reactive events within feasible simulation timescales.

- Duration: Simulations are typically run for nanoseconds.

Data Analysis:

- Structural Evolution: Track the diffusion of H and O atoms into the Mg nanoparticle and the outward diffusion of Mg atoms.

- Chemical Bonding: Perform bond order analysis to identify the formation of key species like Mg-H, Mg-OH, and Mg-O bonds over time.

- Reaction Kinetics: Quantify the rates of Hâ‚‚O consumption and Hâ‚‚ formation, noting the temporal correlation between these two processes.

Critical Reagents and Computational Tools for ReaxFF Research

Table 2: Essential "Research Reagent" Solutions for Reactive Force Field Simulations

| Item / Software | Type | Primary Function | Key Features |

|---|---|---|---|

| ReaxFF Parameter Set | Data/Model | Defines interatomic interactions for a specific chemical system. | Trained against QM/experimental data; system-specific (e.g., Mg/O/H, C/H/O). |

| LAMMPS | Software | A highly versatile and widely used open-source MD simulator. | Integrated ReaxFF module; optimized for high-performance parallel computing. |

| PuReMD | Software | Purdue Reactive Molecular Dynamics code. | Specialized and highly optimized for ReaxFF simulations. |

| ADF / Amsterdam Modeling Suite | Software | Commercial software suite from SCM. | User-friendly GUI, advanced (re)parametrization tools, integrated workflows. |

| JAX-ReaxFF | Framework | A framework for force field parameterization. | Uses gradient descent algorithms for systematic parameter optimization [24]. |

| QM Reference Data (e.g., from DFT) | Data | The training set for force field development. | Includes reaction energies, barriers, EOS; used for parameterization and validation. |

Applications and Findings: Insights from Reactive Simulations

ReaxFF has been successfully applied to a vast range of complex chemical phenomena. The following table summarizes key quantitative findings from a study on magnesium nanoparticles reacting with water, highlighting the atomic-level mechanisms revealed by the simulation [24].

Table 3: Key Quantitative Findings from a ReaxFF MD Study of Mg + Hâ‚‚O [24]

| Process / Observation | Condition | Finding | Atomic-Level Mechanism |

|---|---|---|---|

| Hâ‚‚O Dissociation | 1500 K | Hâ‚‚O dissociates on Mg nanoparticle surface. | Forms Mg-H, Mg-OH, and Mg-O bonds. |

| Structural Evolution | Varies with temperature and Hâ‚‚O density. | Inward H diffusion > Inward O diffusion. | Leads to a Mg hydride core and a Mg oxide shell. |

| Hâ‚‚ Production Kinetics | -- | Hâ‚‚ formation lags behind Hâ‚‚O consumption. | Hâ‚‚ is released from the magnesium hydride as O diffuses inward and Mg diffuses outward. |

| Nanoparticle Dynamics | High Temperature | Surface Mg atoms become unstable. | Leads to migration and restructuring of the nanoparticle. |

Beyond this specific system, ReaxFF has provided fundamental insights into:

- Combustion Chemistry: Unraveling complex reaction pathways in hydrocarbon oxidation and pyrolysis [22].

- Materials Failure: Simulating crack propagation in brittle materials like silicon at the atomic scale [21] [23].

- Catalysis: Modeling the catalytic formation of carbon nanotubes and surface reactions on transition metal catalysts [21] [23].

- Electrochemical Systems: Investigating processes in batteries, such as lithium-ion reduction at electrode surfaces, with extensions like eReaxFF allowing for explicit treatment of electrons [25].

Reactive force fields, principally ReaxFF, represent a pivotal advancement in the toolkit for calculating atomic forces. By moving beyond fixed bonding topologies to a dynamic bond-order formalism, they enable large-scale molecular dynamics simulations to directly probe chemical reactions and complex reactive processes. This capability bridges a critical gap between the high accuracy but limited scale of quantum mechanical methods and the vast scale but chemical rigidity of traditional classical force fields. As parameterization methods become more robust and computational power grows, reactive force fields are poised to play an increasingly vital role in accelerating the discovery and design of new materials, catalysts, and drugs by providing an atomic-level movie of chemistry in action.

Force fields form the foundational framework for calculating atomic forces and energies in molecular systems, enabling the computational study of biological processes, material properties, and chemical reactions. Traditional molecular mechanics (MM) force fields, while computationally efficient, face significant challenges in achieving quantum-level accuracy due to their limited functional forms and dependence on empirical parameterization. With the rapid expansion of synthetically accessible chemical space in drug discovery, these limitations have become increasingly problematic, creating an urgent need for more accurate and transferable models [26]. The emergence of machine learning force fields (MLFFs) represents a paradigm shift in computational molecular science, offering a pathway to bridge this critical gap between computational efficiency and quantum accuracy.

MLFFs leverage advanced machine learning architectures to learn the complex relationship between atomic configurations and potential energies directly from quantum mechanical data. These models have demonstrated remarkable capability to serve as efficient surrogates for expensive quantum mechanical calculations, achieving density functional theory (DFT) accuracy at a fraction of the computational cost [27]. This technical guide examines the core architectures, methodologies, and applications of modern MLFFs, providing researchers with a comprehensive framework for understanding and implementing these transformative technologies in computational drug discovery and materials science.

Core Architectures of Machine Learning Force Fields

Hybrid Physics-ML Approaches: Residual Learning Frameworks

The ResFF (Residual Learning Force Field) architecture introduces a sophisticated hybrid approach that integrates physics-based models with machine learning corrections. This framework employs deep residual learning to combine physics-based learnable molecular mechanics covalent terms with residual corrections from a lightweight equivariant neural network. Through a three-stage joint optimization process, these two components are trained in a complementary manner to achieve optimal performance [28]. This design allows the model to leverage the physical interpretability and computational efficiency of traditional force fields while capturing complex quantum mechanical effects through the neural network component.

Benchmark results demonstrate that ResFF significantly outperforms both classical and neural network force fields across multiple metrics, achieving a mean absolute error (MAE) of 1.16 kcal/mol on the Gen2-Opt dataset and 0.90 kcal/mol on DES370K [28]. The architecture particularly excels in modeling torsional profiles (MAE: 0.45/0.48 kcal/mol on TorsionNet-500 and Torsion Scan) and intermolecular interactions (MAE: 0.32 kcal/mol on S66×8), enabling precise reproduction of energy minima and stable molecular dynamics simulations of biological systems [28]. This hybrid approach effectively merges physical constraints with neural expressiveness, offering a robust tool for accurate and efficient molecular simulation.

Graph Neural Networks for Chemical Space Coverage

ByteFF exemplifies the data-driven parameterization approach using an edge-augmented, symmetry-preserving molecular graph neural network (GNN). This architecture simultaneously predicts all bonded and non-bonded MM force field parameters for drug-like molecules across expansive chemical spaces [26]. The model was trained on an extensive and highly diverse molecular dataset comprising 2.4 million optimized molecular fragment geometries with analytical Hessian matrices and 3.2 million torsion profiles generated at the B3LYP-D3(BJ)/DZVP level of theory [26].

The graph-based representation naturally captures molecular symmetries and invariances, while the edge-augmentation enables effective message passing between connected atoms. This approach demonstrates state-of-the-art performance in predicting relaxed geometries, torsional energy profiles, and conformational energies and forces [26]. The exceptional accuracy and broad chemical space coverage make this architecture particularly valuable for multiple stages of computational drug discovery, where handling diverse molecular structures is essential.

Equivariant Architectures with Explicit Electrostatics

Schrödinger's MPNICE (Message Passing Network with Iterative Charge Equilibration) architecture incorporates explicit electrostatics through charge equilibration approaches, enabling accurate representations of multiple charge states, ionic systems, and electronic response properties [27]. This equivariant graph neural network includes long-range interactions while maintaining computational speeds an order of magnitude faster than comparable models [27].

The MPNICE framework employs a Euclidean transformer architecture that respects physical symmetries and incorporates atomic partial charges explicitly. This design has enabled the development of pre-trained models covering the entire periodic table (89 elements), prioritizing efficient throughput for atomistic simulations at previously inaccessible length and time scales without sacrificing accuracy [27]. The architecture's capability to handle diverse material systems, including general organic, inorganic, and hybrid (organic and inorganic) models, makes it suitable for addressing industry-relevant needs across drug discovery and materials science.

Quantitative Performance Benchmarking

Accuracy Metrics Across Molecular Systems

Table 1: Performance comparison of MLFF architectures across key benchmarks. MAE values are in kcal/mol.

| Architecture | Gen2-Opt (MAE) | DES370K (MAE) | TorsionNet-500 (MAE) | S66×8 (MAE) | Chemical Coverage |

|---|---|---|---|---|---|

| ResFF | 1.16 | 0.90 | 0.45 | 0.32 | Drug-like molecules |

| ByteFF | - | - | State-of-the-art | - | Expansive drug-like space |

| MPNICE | - | - | - | - | 89 elements (periodic table) |

| NPLS | - | - | - | - | Alkanes & polyolefins |

Specialized Capabilities and Applications

Table 2: Specialized performance characteristics and application domains of MLFF architectures.

| Architecture | Key Innovation | Sampling Efficiency | Application Strengths |

|---|---|---|---|

| ResFF | Residual learning physics-ML integration | Three-stage joint optimization | Torsional profiles, intermolecular interactions, biological MD |

| ByteFF | Data-driven GNN parameterization | 2.4M geometries + 3.2M torsion profiles | Drug-like molecules, conformational analysis |

| MPNICE | Explicit electrostatics with charge equilibration | Order of magnitude faster than comparable models | Materials science, ionic systems, electronic properties |

| NPLS | Dual-space active learning for liquids | Efficient configurational & chemical space sampling | Organic liquids, thermodynamic properties, phase transitions |

The benchmarking data reveals distinct strengths across different MLFF architectures. ResFF demonstrates exceptional accuracy across standard benchmarks, particularly for torsional profiles and intermolecular interactions critical for drug discovery applications [28]. ByteFF provides comprehensive coverage across expansive chemical spaces, making it particularly valuable for early-stage drug discovery where chemical diversity is essential [26]. MPNICE stands out for its breadth of element coverage and computational efficiency, enabling applications across diverse material systems [27]. The systematic benchmarking illustrates how each architecture addresses specific limitations of traditional force fields while maintaining computational tractability for practical applications.

Experimental Protocols and Methodologies

Data Generation and Training Workflows

The development of accurate MLFFs requires carefully designed data generation and training protocols. For ByteFF, researchers created an expansive dataset of 2.4 million optimized molecular fragment geometries with analytical Hessian matrices and 3.2 million torsion profiles, all computed at the B3LYP-D3(BJ)/DZVP level of theory [26]. This comprehensive data generation strategy ensures broad coverage of chemical space and conformational diversity, providing the necessary foundation for training transferable models.

The NPLS framework employs a novel dual-space active learning strategy that enables efficient sampling across both configurational and chemical space. This approach couples a query-by-committee method with an explicitly constructed target chemical space generated using computationally inexpensive classical methods [29]. The active learning workflow iteratively identifies regions of chemical and configurational space where model uncertainty is high, targeting additional quantum mechanical calculations to refine the model in these underrepresented areas. This data-efficient strategy is particularly valuable for complex systems like organic liquids, where comprehensive sampling would be prohibitively expensive.

Training Strategies and Optimization Techniques

ResFF implements a sophisticated three-stage joint optimization process that trains the physics-based molecular mechanics components and neural network corrections in a complementary manner [28]. This approach ensures that each component specializes in capturing the interactions for which it is best suited, with the MM terms handling well-understood covalent interactions and the neural network providing corrections for more complex electronic effects. The staged optimization prevents interference between components and promotes stable convergence.

For equivariant graph neural networks, special considerations are necessary for free energy calculations. Research indicates that the accuracy of these models in reproducing free energy surfaces depends critically on the distribution of collective variables in the training data [30]. Models trained on configurations from classical simulations struggle to extrapolate to high-free-energy regions, while those trained on ab initio data show significantly improved extrapolation accuracy [30]. This highlights the importance of incorporating sufficient sampling of transition states and other rare configurations during training, particularly for applications requiring accurate thermodynamic properties.

The Scientist's Toolkit: Essential Research Reagents

Table 3: Essential computational tools and resources for MLFF development and application.

| Resource Category | Specific Tools/Approaches | Function & Application |

|---|---|---|

| Reference Data | B3LYP-D3(BJ)/DZVP calculations, TorsionNet-500, DES370K, S66×8 | Provide high-quality quantum mechanical reference data for training and benchmarking [26] |

| Software Architectures | MPNICE, Euclidean Transformers, Equivariant GNNs | Core ML algorithms for constructing accurate and transferable force fields [29] [27] |

| Sampling Methods | Dual-space Active Learning, Query-by-Committee, Path-Integral MD | Enable efficient configurational and chemical space exploration [29] |

| Validation Benchmarks | Gen2-Opt, Torsion Scan, Thermodynamic Properties | Standardized metrics for assessing performance across diverse molecular properties [28] [29] |

| Specialized Techniques | Nuclear Quantum Fluctuation Corrections, Charge Equilibration Methods | Address specific physical phenomena neglected in classical approaches [29] [27] |

| 7-Methylguanosine | 7-Methylguanosine (m7G) | |

| 1-Stearoyl-sn-glycero-3-phosphocholine | 1-Stearoyl-sn-glycero-3-phosphocholine, CAS:19420-57-6, MF:C26H54NO7P, MW:523.7 g/mol | Chemical Reagent |

This toolkit provides researchers with essential resources for developing, validating, and applying MLFFs to challenging scientific problems. The combination of high-quality reference data, advanced machine learning architectures, efficient sampling strategies, and comprehensive validation benchmarks enables the creation of models that successfully bridge the gap between quantum accuracy and computational feasibility.

Challenges and Future Directions

Despite significant progress, several challenges remain in the widespread adoption of MLFFs. The accurate prediction of free energy surfaces requires careful attention to the distribution of collective variables in training data, as models can struggle to extrapolate to high-free-energy regions when trained solely on classical simulation data [30]. For condensed-phase systems, systematic deviations in properties like liquid density have been observed, which researchers have attributed to non-negligible nuclear quantum fluctuations not captured by classical molecular dynamics sampling [29].

Future developments will likely focus on improving the data efficiency of training, enhancing model transferability across broader chemical spaces, and better integration of physical constraints and symmetries. The incorporation of nuclear quantum effects through path-integral molecular dynamics has already demonstrated significantly improved agreement with experimental measurements [29], suggesting a promising direction for increasing the physical fidelity of MLFFs. As these models continue to mature, they are poised to become standard tools in computational drug discovery and materials science, enabling accurate simulations of complex molecular processes at quantum mechanical level of theory with dramatically reduced computational cost.

Machine learning force fields represent a transformative advancement in computational molecular science, successfully bridging the historical gap between quantum mechanical accuracy and molecular mechanics efficiency. Through innovative architectures like ResFF's residual learning framework, ByteFF's graph neural network parameterization, and MPNICE's equivariant networks with explicit electrostatics, MLFFs now achieve state-of-the-art performance across diverse molecular systems. The rigorous benchmarking, sophisticated training methodologies, and specialized toolkits presented in this technical guide provide researchers with a comprehensive foundation for leveraging these powerful technologies. As MLFF methodologies continue to evolve, they will undoubtedly expand the frontiers of computational science, enabling accurate simulation of increasingly complex molecular processes with profound implications for drug discovery, materials design, and fundamental scientific understanding.

From Theory to Practice: Methodologies and Applications in Biomolecular Simulation and Drug Design

Force Fields as the Engine for Molecular Dynamics Simulations

In Molecular Dynamics (MD) simulations, force fields serve as the fundamental engine that determines the accuracy, reliability, and predictive power of the computational model. A force field is a mathematical model comprising a set of empirical parameters and analytical functions that collectively describe the potential energy surface (PES) of a molecular system as a function of atomic coordinates [31]. The fidelity with which this PES is approximated governs the quality of the computed forces and the subsequent simulation of atomic motion over time. For researchers, scientists, and drug development professionals, the selection and application of an appropriate force field is therefore not merely a technical step, but a critical strategic decision that directly impacts the validity of scientific conclusions.

The functional form of a classical molecular mechanics force field decomposes the complex potential energy into simpler, computationally tractable terms representing bonded and non-bonded interactions [32]:

E_total = E_bond + E_angle + E_torsion + E_van_der_Waals + E_electrostatic

This decomposition enables the efficient calculation of forces for systems ranging from small molecules to massive biomolecular complexes, though it introduces approximations that must be carefully parameterized. The development and continuous refinement of these force fields represents an ongoing endeavor to bridge the gap between computational efficiency and quantum mechanical accuracy.

Force Field Fundamentals: Functional Forms and Parameterization

Core Energy Components

The potential energy function in standard force fields is composed of several key components [32] [31]:

Bonded Interactions: These describe the energy associated with the covalent structure of molecules.

- Bond Stretching: Typically modeled as a harmonic potential,

E_bond = ∑ k_r(r - r_0)², wherek_ris the force constant andr_0is the equilibrium bond length. - Angle Bending: Also often harmonic,

E_angle = ∑ k_θ(θ - θ_0)², wherek_θis the angle force constant andθ_0is the equilibrium angle. - Torsional Dihedrals: Described by a periodic function,

E_dihedral = ∑ [V_n/2][1 + cos(nφ - γ)], whereV_nis the barrier height,nis the periodicity, andγis the phase angle.

- Bond Stretching: Typically modeled as a harmonic potential,

Non-Bonded Interactions: These describe interactions between atoms not directly connected by covalent bonds.

- Van der Waals Forces: Typically modeled using the Lennard-Jones 12-6 potential,

E_vdW = ∑ [(A_ij)/(r_ij¹²) - (B_ij)/(r_ijâ¶)], which accounts for both Pauli repulsion at short distances and London dispersion attraction at intermediate distances. - Electrostatics: Calculated using Coulomb's law,

E_electrostatic = ∑ (q_iq_j)/(4πε_0εr_ij), whereq_iandq_jare partial atomic charges, andεis the dielectric constant.

- Van der Waals Forces: Typically modeled using the Lennard-Jones 12-6 potential,

Parameterization Strategies and Challenges

The accuracy of a force field hinges on the careful parameterization of these energy terms. Traditional parameterization involves a complex optimization process that aims to reproduce experimental data (such as densities, enthalpies of vaporization, and free energies of solvation) and quantum mechanical calculations [32]. Key aspects include:

Partial Charge Derivation: Atomic partial charges are typically derived from quantum mechanical calculations using methods such as the Restrained Electrostatic Potential (RESP) approach, which fits atomic charges to reproduce the molecular electrostatic potential [33] [32].

Torsion Parameter Optimization: Dihedral parameters are particularly important as they govern conformational preferences. These are often optimized to match torsion potential energy scans computed using high-level quantum mechanical methods [33] [31].

Van der Waals Parameterization: Lennard-Jones parameters are traditionally derived from reproducing liquid properties and crystal structures of small molecule analogs, though they may not be optimal for all chemical environments, such as nucleic acid base stacking [32].

The parameterization process is complicated by the need to balance transferability—the ability to accurately describe molecules beyond those used in training—with specificity. Additionally, parameters for different energy terms are often correlated, requiring iterative refinement to avoid overfitting.

Current State of Biomolecular Force Fields

Specialized Force Fields for Unique Biological Systems

Standard general-purpose force fields face challenges when applied to chemically unique biological systems, necessitating the development of specialized parameters. A prime example is the mycobacterial membrane of Mycobacterium tuberculosis, which contains exceptionally complex lipids like phthiocerol dimycocerosate (PDIM) and mycolic acids [33]. These lipids feature structural motifs such as cyclopropane rings, very long alkyl chains (C60-C90), and glycosylated headgroups that are poorly described by standard force field parameters.

The recently developed BLipidFF (Bacteria Lipid Force Fields) addresses this gap through a rigorous, quantum mechanics-based parameterization strategy [33]. Key developments include:

- Specialized Atom Typing: Implementation of chemically distinct atom categories (e.g.,

cXfor cyclopropane carbons,cGfor trehalose carbons) to capture stereoelectronic effects in mycobacterial-specific motifs [33]. - Modular Parameterization: Large, complex lipids are divided into manageable segments for quantum mechanical calculations, with charges and torsion parameters derived for each segment before reassembly into the complete molecule [33].

- Validation Against Biophysical Data: BLipidFF successfully reproduces experimental measurements of membrane rigidity and lateral diffusion rates in α-mycolic acid bilayers, properties that are poorly captured by general force fields like GAFF, CGenFF, and OPLS [33].

Comparative Performance of General Force Fields

The selection of an appropriate force field is system-dependent, as different parameter sets exhibit strengths and weaknesses across various chemical environments. Recent benchmarking studies provide guidance for researchers:

Table 1: Comparison of Force Field Performance for Different Molecular Systems

| Force Field | Chemical System | Performance Highlights | Key Limitations |

|---|---|---|---|

| BLipidFF [33] | Mycobacterial membrane lipids (PDIM, TDM, α-MA, SL-1) | Accurately captures membrane rigidity and diffusion; consistent with FRAP experiments | Specialized for bacterial lipids; limited validation beyond Mtb systems |

| CHARMM36 [34] | Liquid ether membranes (Diisopropyl ether) | Accurate density (≈1% error) and viscosity (≈10% error); good for mutual solubility | Slightly overestimates interfacial tension at higher temperatures |

| OPLS-AA/CM1A [34] | Liquid ether membranes | Reasonable density prediction (3-5% overestimation) | Poor viscosity prediction (60-130% overestimation) |

| GAFF [34] | Liquid ether membranes | - | Overestimates density by 3-5% and viscosity by 60-130% |

| COMPASS [34] | Liquid ether membranes | Accurate density prediction (<1% error) | Overestimates viscosity by ~50%; underestimates mutual solubility |

| OL-series (ol15, ol21) [32] | DNA double helix | Corrects undertwisting present in bsc0; improved description of BI/BII states | Sugar-puckering description remains challenging |

| bsc1 [32] | DNA double helix | Improved global elasticities vs bsc0; better for topologically constrained DNA | Allows artificial β-state distortions; base stacking overstabilized |

Table 2: Performance of Force Fields for Biomolecular Simulations

| Force Field | Biomolecular Application | Key Strengths | Notable Weaknesses |

|---|---|---|---|

| OPLS-AA [35] | SARS-CoV-2 PLpro protease | Maintains native fold in long simulations; minimal local unfolding | Performance dependent on water model (works best with TIP3P) |

| CHARMM27/36 [35] | SARS-CoV-2 PLpro protease | Maintains native fold over short timescales (100s of ns) | Exhibits local unfolding of N-terminal domain in longer simulations |

| AMBER03 [35] | SARS-CoV-2 PLpro protease | Maintains catalytic domain structure | Shows local unfolding in extended simulations |

| CUFIX [32] | Protein-DNA interactions | Correctly reproduces PCNA sliding diffusion along DNA | Modified nonbonded parameters specific to nucleic acid interactions |

| AMBER Cornell et al. [32] | Nucleic acids (foundation) | Basis for most modern DNA/RNA force fields | Underestimates base pairing; overestimates base stacking |