Essential Dynamics with PCA: Extracting Biological Insights from Molecular Dynamics Trajectories

Principal Component Analysis (PCA) is a fundamental statistical technique for reducing the complexity of Molecular Dynamics (MD) trajectories to reveal their essential collective motions.

Essential Dynamics with PCA: Extracting Biological Insights from Molecular Dynamics Trajectories

Abstract

Principal Component Analysis (PCA) is a fundamental statistical technique for reducing the complexity of Molecular Dynamics (MD) trajectories to reveal their essential collective motions. This article provides a comprehensive guide for researchers and drug development professionals, covering the foundational theory of PCA in the context of protein dynamics, practical implementation steps using common analysis tools, and critical troubleshooting for robust results. It further explores advanced validation techniques to assess sampling quality and significance, compares PCA with related methods like Normal Mode Analysis, and discusses its practical application in areas such as drug discovery and conformational analysis, providing a complete framework for interpreting MD simulations.

Unlocking Protein Motions: The Core Principles of PCA in Essential Dynamics

Principal Component Analysis (PCA) is a foundational linear dimensionality reduction technique with extensive applications in exploratory data analysis, visualization, and data preprocessing [1]. By performing an orthogonal linear transformation, PCA projects high-dimensional data onto a new coordinate system where the newly constructed variables, termed principal components (PCs), are uncorrelated and ordered such that the first few retain most of the variation present in the original dataset [1] [2] [3]. This characteristic makes PCA particularly valuable for simplifying complex data structures while maintaining essential informational content, especially in scientific domains where interpreting high-dimensional data poses significant challenges.

The mathematical foundation of PCA rests on eigenvalue decomposition of the data's covariance matrix or singular value decomposition (SVD) of the data matrix itself [1] [2]. For a data matrix ( X ) with ( n ) observations and ( p ) variables, the first principal component direction ( w_{(1)} ) is defined as the unit vector that maximizes the variance of the projected data:

[ w{(1)} = \arg \max{\|w\| = 1} \left{ \sumi (x{(i)} \cdot w)^2 \right} = \arg \max_{\|w\| = 1} \left{ w^T X^T X w \right} ]

Subsequent components are obtained iteratively by subtracting the first ( k-1 ) components from ( X ) and repeating the variance maximization process on the residual matrix ( \hat{X}_k ) [1]. The full PCA decomposition can be represented in matrix form as ( T = XW ), where ( W ) is a ( p \times p ) matrix whose columns are the eigenvectors of ( X^TX ), and ( T ) contains the PC scores [1].

Application of PCA to Molecular Dynamics Trajectories

In the context of molecular dynamics (MD) simulations, PCA serves as a crucial tool for analyzing protein trajectories through an approach known as Essential Dynamics (ED) [4]. MD simulations generate vast amounts of high-dimensional data representing atomic coordinates over time, making dimensionality reduction essential for extracting biologically relevant motions. PCA applied to MD trajectories systematically reduces the number of dimensions needed to describe protein dynamics by filtering observed motions from largest to smallest spatial scales [4].

The process begins by constructing a covariance matrix of atomic coordinates from the trajectory data. For a protein trajectory, this typically involves using alpha carbon atoms as representative points for each residue, resulting in a ( 3m \times 3m ) real symmetric covariance matrix where ( m ) is the number of residues [4]. Eigenvalue decomposition of this matrix yields orthogonal collective modes (eigenvectors) with corresponding eigenvalues representing the variance captured by each mode. Typically, the first 20 modes sufficiently define an "essential space" that captures motions governing biological function, achieving tremendous dimensionality reduction from thousands of degrees of freedom to a manageable subset [4].

Table 1: Key Metrics for Interpreting PCA Results in MD Simulations

| Metric | Calculation | Interpretation in MD Context |

|---|---|---|

| Eigenvalues | ( \lambdak ) from ( \mathbf{S}ak = \lambdak ak ) | Quantifies variance along each PC; larger values indicate more biologically significant motions [4] |

| Scree Plot | Eigenvalues ( \lambda_k ) vs. mode index ( k ) | Identifies "kink" indicating optimal number of components to retain (Cattell criterion) [4] |

| Cumulative Variance | ( \sum{i=1}^k \lambdai / \sum{j=1}^p \lambdaj ) | Measures total variance captured by first ( k ) components; >80% often sufficient [4] |

| Factor Loadings | Elements of eigenvectors ( a_k ) | Indicates contribution of original variables to each PC [1] |

| PC Scores | ( tk = Xak ) | Projection of original data onto principal components [1] |

Unlike Normal Mode Analysis (NMA), which assumes harmonic motions near energy minima, PCA makes no assumption of harmonicity and can capture anharmonic protein behavior, making it particularly valuable for studying large-scale conformational changes relevant to drug development [4].

Experimental Protocol for PCA in Essential Dynamics Analysis

Trajectory Preprocessing and Covariance Matrix Construction

Protocol Steps:

- Trajectory Alignment: Superimpose all MD simulation frames to a reference structure (usually the first frame or average structure) using rotational and translational fitting to remove global rotation and translation [4].

- Coordinate Extraction: Select atoms for analysis, typically alpha carbons for coarse-grained representation, extracting ( 3m ) coordinates for each of ( n ) frames [4].

- Mean Centering: Calculate the average structure across all frames and subtract from each frame to obtain deviation vectors: ( \vec{r}i(t) - \langle \vec{r}i \rangle ) [4].

- Covariance Matrix Calculation: Construct the ( 3m \times 3m ) covariance matrix ( C ):

[ C{ij} = \langle (\vec{r}i - \langle \vec{r}i \rangle) \cdot (\vec{r}j - \langle \vec{r}_j \rangle) \rangle ]

where angle brackets denote time average over all frames [4].

Considerations:

- For variables with significantly different variances, use the correlation matrix instead of covariance to prevent variables with large displacements from dominating the analysis [4].

- Ensure sufficient sampling: the number of observations should substantially exceed the number of degrees of freedom to obtain reliable results [4].

Eigenvalue Decomposition and Projection

Protocol Steps:

- Diagonalization: Perform eigenvalue decomposition of the covariance matrix:

[ \mathbf{C} = \mathbf{U} \mathbf{\Lambda} \mathbf{U}^T ]

where ( \mathbf{U} ) contains eigenvectors and ( \mathbf{\Lambda} ) is a diagonal matrix of eigenvalues [4].

- Sorting: Sort eigenvalues in descending order ( \lambda1 \geq \lambda2 \geq \ldots \geq \lambda_{3m} ) with corresponding eigenvectors [4].

- Projection: Project the centered trajectory onto the principal components to obtain PC scores:

[ \mathbf{PC}k(t) = \mathbf{U}k^T \cdot (\mathbf{r}(t) - \langle \mathbf{r} \rangle) ]

where ( \mathbf{U}_k ) is the ( k)-th eigenvector [4].

- Subspace Selection: Choose the number of components ( q ) to retain using scree plot analysis or cumulative variance threshold (typically 70-90%) [4].

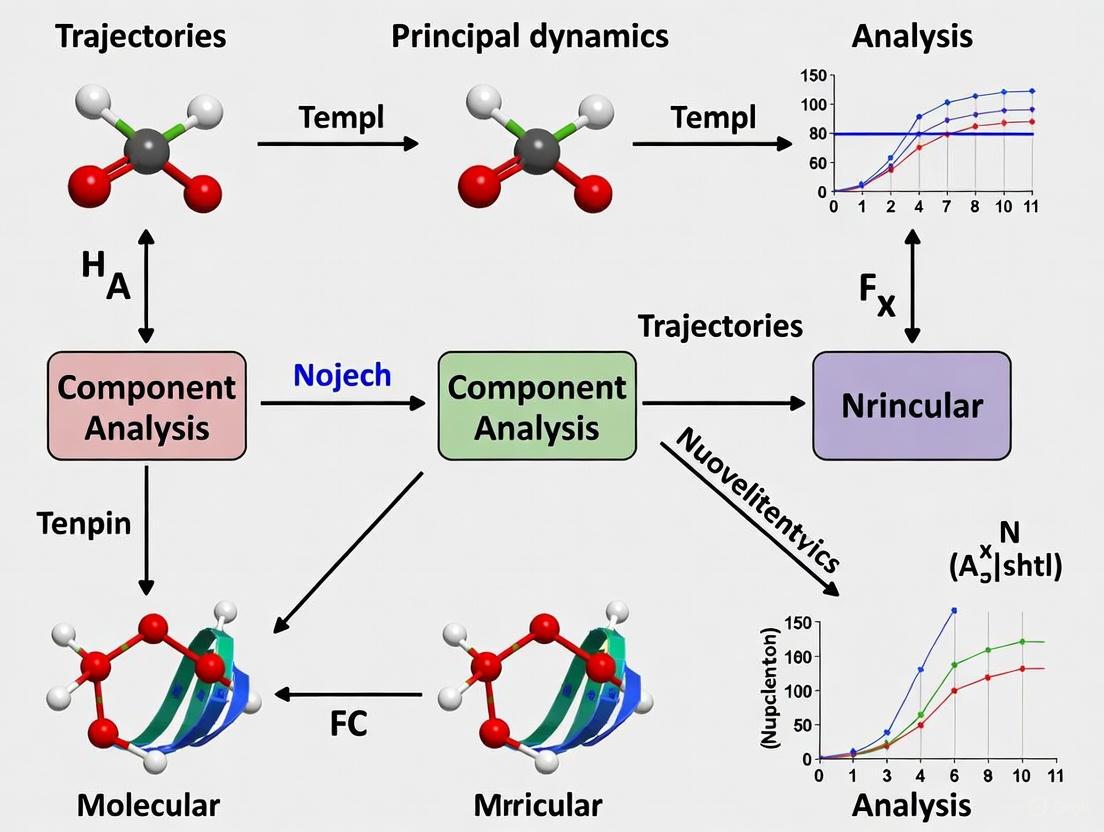

Diagram Title: PCA Workflow for MD Trajectory Analysis

Advanced Applications and Validation in Drug Development

Training Set Design for Deep Learning Potentials

Recent advances integrate PCA with machine learning for developing accurate interatomic potentials. In a 2024 study, PCA enabled the design of deep learning potentials precisely capturing phase transitions in solid-state electrolyte materials (LLZO) [5]. Researchers used PCA to evaluate the coverage of local structural features in training and test sets, defining coverage rate as the percentage of configurations in the test dataset with similar representations in the training dataset [5].

Iterative Refinement Protocol:

- Generate initial training set from crystal database and DFT calculations

- Train potential function and run molecular dynamics simulations

- Apply PCA to calculate coverage of local structural features in dynamics trajectories

- If coverage insufficient (<99%), supplement training set with structures showing high energy errors

- Repeat until convergence (coverage >99.5%) [5]

This approach resulted in an accurate interatomic potential that described structural and dynamical properties while greatly reducing computational costs compared to pure DFT calculations [5].

Comparison with Alternative Dimensionality Reduction Methods

While PCA remains dominant in MD analysis, understanding its position relative to emerging techniques is valuable for researchers. A 2025 benchmarking study compared PCA with Random Projection (RP) methods for single-cell RNA sequencing data, revealing that RP methods not only offered computational advantages but also rivaled PCA in preserving data structure for downstream analyses [6].

Table 2: Comparison of Dimensionality Reduction Techniques for Scientific Data

| Method | Key Mechanism | Advantages | Limitations | Suitability for MD |

|---|---|---|---|---|

| Standard PCA | Eigen-decomposition of covariance matrix [2] | Mathematically elegant, interpretable components [3] | Linear assumptions, sensitive to outliers [6] | Excellent for large-scale collective motions [4] |

| Randomized PCA | Randomized SVD for approximation [6] | Computational efficiency for large datasets [6] | Approximation error, less precise | Suitable for very large MD systems |

| Kernel PCA | Nonlinear mapping to feature space [4] | Captures nonlinear relationships | Choice of kernel problematic, difficult interpretation [4] | Limited use in MD |

| Random Projection | Johnson-Lindenstrauss lemma [6] | Computational speed, distance preservation | Random variability, less established | Emerging application |

Research Reagent Solutions for PCA in MD Studies

Table 3: Essential Computational Tools for PCA in Molecular Dynamics Research

| Tool Category | Specific Software/Packages | Primary Function | Application Context |

|---|---|---|---|

| MD Simulation Engines | GROMACS, AMBER, NAMD, CHARMM | Generate trajectory data | Produce raw atomic coordinates for PCA input [4] |

| PCA Specialized Tools | Bio3D (R), MDTraj (Python), Carma | Trajectory analysis & PCA | Perform essential dynamics analysis [4] |

| General Statistical Platforms | R (FactoMineR, psych), XLSTAT (Excel), Python (scikit-learn) | General PCA implementation | Flexible analysis and visualization [3] [7] |

| Visualization Software | VMD, PyMOL, ggplot2 | Result visualization | Create publication-quality plots of essential subspaces |

| Deep Learning Integration | DeePMD-kit | Neural network potentials | Combine with PCA for training set design [5] |

Validation and Significance Assessment Protocols

Robustness Evaluation

Protocol Steps:

- Trajectory Splitting: Divide MD trajectory into multiple non-overlapping segments [4]

- Subsampling PCA: Perform PCA on each segment independently [4]

- Mode Comparison: Compare principal modes across segments using inner product or subspace angles [4]

- Consistency Assessment: Identify modes stable across multiple segments as biologically relevant [4]

Interpretation Guidelines:

- Modes with consistent directions across segments represent robust collective motions

- Inconsistent modes may indicate insufficient sampling or lack of biological significance

- For significant results, the core subspace should stabilize against sampling noise [4]

Comparison with Experimental Data

Validation Approaches:

- Compare PC projections with experimental conformational distributions from cryo-EM or NMR ensembles [4]

- Validate collective motions against known functional mechanisms from mutagenesis studies

- Correlate PC space transitions with experimentally observed conformational changes

Diagram Title: Validation Strategy for PCA Results

Through the systematic application of these protocols and validation frameworks, PCA provides researchers and drug development professionals with a powerful approach to extract essential dynamical information from complex molecular dynamics trajectories, facilitating insights into molecular mechanisms and supporting structure-based drug design efforts.

Principal Component Analysis (PCA), known as Essential Dynamics (ED) in molecular dynamics (MD) simulations, is a fundamental statistical technique for extracting the most significant collective motions from MD trajectories [8] [4]. By diagonalizing the covariance matrix of atomic coordinates, PCA reduces the immense complexity of simulation data—often involving thousands of degrees of freedom—to a manageable set of motions that capture the essential behavior of the biological system [9] [4]. This dimensionality reduction is crucial for understanding large-scale conformational changes in proteins and other biomolecules that underlie their biological function [4]. In drug discovery research, these collective motions provide critical insights into molecular mechanisms that can be targeted for therapeutic intervention [10] [11].

The power of PCA lies in its ability to systematically filter observed motions from the largest to smallest spatial scales through eigenvalue decomposition [4]. When applied to macromolecular simulations, the method requires careful removal of overall rotational and translational motion prior to analysis to focus exclusively on internal dynamics [9] [12]. For researchers investigating protein dynamics and drug-target interactions, PCA offers a robust framework for identifying functionally relevant motions that might otherwise be obscured in the high-dimensional noise of full trajectory data [4].

Mathematical Foundations

The Covariance Matrix Construction

The foundation of essential dynamics begins with constructing the covariance matrix of atomic coordinates. For a molecular system with N atoms, the covariance matrix C is a symmetric 3N × 3N matrix whose elements represent the pairwise correlations between atomic fluctuations [9] [4]. The matrix is defined as:

C~ij~ = ⟨ M~ii~^½^ (x~i~ - ⟨x~i~⟩) M~jj~^½^ (x~j~ - ⟨x~j~⟩) ⟩

where x~i~ and x~j~ represent atomic coordinates, angle brackets denote averages over the trajectory, and M is a diagonal matrix containing atomic masses (for mass-weighted analysis) or the identity matrix (for non-mass weighted analysis) [9]. In protein studies, the analysis is often coarse-grained at the residue level using C-α atoms to represent each residue's position, resulting in a more manageable 3m × 3m matrix where m is the number of residues [4].

Table 1: Covariance Matrix Types and Applications in MD Analysis

| Matrix Type | Construction | Application Context | Advantages |

|---|---|---|---|

| Mass-Weighted Covariance | Incorporates atomic masses in diagonal matrix M | Essential dynamics comparing motions of atoms with different masses | Physical relevance to molecular vibrations |

| Non-Mass-Weighted Covariance | Uses identity matrix for M | Analyzing geometric conformational changes | Simplifies interpretation of geometric relationships |

| Correlation Matrix | Normalizes variables to unit variance | Comparing motions of atoms with different fluctuation magnitudes | Prevents large atomic displacements from skewing results |

Eigenvalue Decomposition

The core mathematical operation in PCA is the diagonalization of the covariance matrix through eigenvalue decomposition [9] [12]. This process solves the equation:

R^T^ C R = diag(λ~1~, λ~2~, ..., λ~3N~)

where R is an orthonormal transformation matrix whose columns are the eigenvectors (principal components), and λ~1~ ≥ λ~2~ ≥ ... ≥ λ~3N~ are the eigenvalues [9]. Each eigenvector represents a collective mode of motion, with its corresponding eigenvalue quantifying the mean-square fluctuation along that direction [9] [12]. The eigenvalues are proportional to the spatial scale of the motion, with larger eigenvalues corresponding to more extensive, collective displacements [4].

Table 2: Interpretation of Eigenvalues and Eigenvectors in PCA

| Mathematical Quantity | Physical Interpretation | Biological Significance |

|---|---|---|

| Eigenvectors | Directions of collective motions | Functionally relevant conformational changes |

| Eigenvalues | Mean-square fluctuation along eigenvector | Importance of each collective motion |

| Eigenvalue Spectrum | Relative contributions of different motion scales | Presence of large-scale concerted movements |

| Trace of C (∑λ~i~) | Total mean-square fluctuation | Overall flexibility of the molecular system |

Computational Protocol

Trajectory Preparation and Preprocessing

The initial critical step involves careful trajectory preparation to ensure meaningful results. The protocol typically includes:

Trajectory Alignment: Superimpose each frame onto a reference structure using least-squares fitting to remove overall rotational and translational motions [9] [12]. This isolates internal motions for analysis. The reference structure should be representative of the ensemble to avoid bias in the covariance matrix [9].

Atomic Selection: For protein systems, select C-α atoms to create a coarse-grained representation at the residue level [12] [4]. This reduces the dimensionality from approximately 3N~atoms~ to 3N~residues~ while retaining essential information about backbone motions.

Frame Selection: For efficiency, select a representative subset of frames (e.g., the last 1000 frames from a 100 ns simulation) while ensuring adequate sampling of conformational space [12].

Coordinate Saving: Ensure coordinates are saved at sufficient frequency to capture motions of interest, typically every 10-100 ps depending on the simulation timescale [4].

Covariance Matrix Calculation and Diagonalization

The core computational workflow implements the mathematical operations described in Section 2:

Figure 1: Computational workflow for covariance matrix diagonalization in essential dynamics analysis.

Matrix Construction: Build the covariance matrix from aligned coordinates using the mathematical formulation in Section 2.1. For a system with m C-α atoms, this generates a 3m × 3m symmetric matrix [12] [4].

Diagonalization: Perform eigenvalue decomposition using standard linear algebra libraries (LAPACK, ARPACK). This computationally intensive step scales with O(n^3^) for a matrix of size n × n, though efficient algorithms exist for sparse matrices [4].

Sorting Modes: Order eigenvectors by descending eigenvalues, as λ~1~ corresponds to the most significant motion, λ~2~ to the next most significant orthogonal motion, etc [9] [13].

Analysis and Interpretation

The final phase focuses on extracting biological insights from the mathematical results:

Dimensionality Reduction: Select the top k eigenvectors that capture ~80-90% of the total variance, defining the "essential subspace" [4]. This typically requires only 10-20 modes even for large proteins [4].

Projection Analysis: Project the original trajectory onto the principal components to create principal component trajectories: p(t) = R^T^ M^½^ (x(t) - ⟨x⟩) [9]. These projections reveal the temporal evolution along each collective mode.

Cluster Analysis: Apply k-means or hierarchical clustering to group similar conformations in the essential subspace [12]. This identifies metastable states and transition pathways.

Visualization: Generate porcupine plots to visualize collective motions by showing eigenvectors as arrows from atomic positions, with arrow length proportional to the component's contribution to the motion [4].

Research Reagent Solutions

Table 3: Essential Software Tools for Covariance Matrix Analysis in MD Research

| Tool Name | Application Context | Function in PCA | Access |

|---|---|---|---|

| GROMACS (gmx covar, gmx anaeig) | MD simulation and analysis | Covariance matrix construction, diagonalization, and projection analysis | Open source [9] |

| Bio3D R Package | Statistical analysis of biomolecular structures | PCA, clustering, and visualization of trajectory data | Open source [12] |

| MDAnalysis/MDTraj | Python library for MD analysis | Trajectory manipulation, RMSD calculation, and PCA | Open source [12] |

| Modeller | Homology modeling | Structure preparation for MD simulations | Academic use [11] |

| AutoDock Vina | Molecular docking | Binding site analysis and virtual screening | Open source [11] |

Application Notes for Drug Discovery

In structure-based drug design, PCA of MD trajectories identifies dynamic patterns associated with drug binding and resistance mechanisms. For example, studies on the βIII-tubulin isotype—overexpressed in various cancers and linked to anticancer drug resistance—utilized PCA to understand how collective motions influence Taxol-site binding [11]. Screening of 89,399 natural compounds combined with MD simulations and essential dynamics revealed structural stability changes in αβIII-tubulin upon ligand binding, guiding the selection of compounds with optimal binding affinity [11].

Similarly, research on quercetin analogues for neuroprotective applications employed PCA to identify molecular descriptors critical for blood-brain barrier permeability [14]. The analysis reduced dimensionality from numerous molecular descriptors to principal components representing intrinsic solubility and lipophilicity—key factors determining CNS distribution [14]. This approach enabled rational design of analogues with improved neuroprotective potential.

When applying PCA in drug discovery projects, these protocol adaptations are recommended:

Binding Site Analysis: Focus covariance matrix construction on binding site residues rather than global protein structure to enhance resolution of pharmaceutically relevant motions [11].

Comparative Essential Dynamics: Perform separate PCA on apo and ligand-bound trajectories, then compare essential subspaces to identify drug-induced changes in collective motions [11] [4].

Machine Learning Integration: Combine PCA with classification algorithms to identify active compounds based on chemical descriptors and binding affinities [11].

Figure 2: Drug discovery workflow integrating essential dynamics analysis for ligand design.

Validation and Significance Assessment

Robust interpretation of essential dynamics requires careful validation of the statistical significance of results:

Convergence Testing: Split the trajectory into halves and calculate the overlap between essential subspaces using metrics like cumulative subspace overlap or root mean square inner product [9] [4]. High overlap (>0.7) indicates sufficient sampling.

Cosine Content Analysis: Calculate the cosine content of principal components to detect random diffusion: (2/T) [∫cos(iπt/T)p~i~(t)dt]^2^ [∫p~i~^2^(t)dt]^−1^ [9]. Values near 1 suggest the motion may represent random diffusion rather than potential-directed dynamics.

Scree Plot Examination: Plot eigenvalues against mode index and look for a "kink" (Cattell criterion) where eigenvalues level off, indicating the transition from essential to near-constraint motions [4].

Collectivity Measurement: Compute the collectivity of each mode κ = (1/m) exp(-∑~i=1~^m^ m~i~^2^ ln m~i~^2^) where m~i~ are normalized components [4]. High collectivity (>0.5) indicates cooperative motions involving many atoms.

Properly validated PCA provides unprecedented insight into the collective motions governing biological function and molecular recognition, bridging the gap between static structural data and dynamic functional mechanisms in pharmaceutical research.

Principal Component Analysis (PCA) serves as a foundational technique in the analysis of Molecular Dynamics (MD) trajectories, a method often termed Essential Dynamics in this field. MD simulations of biological macromolecules, such as proteins, generate high-dimensional data representing the collective motion of thousands of atoms. PCA reduces this complexity by identifying a small set of collective coordinates that capture the most significant structural fluctuations, separating essential motion from localized, random vibrations [9] [15]. This dimensionality reduction is achieved by solving an eigenvalue problem for the covariance matrix of the atomic coordinates, yielding eigenvectors that define the directions of concerted motions (the principal components) and eigenvalues that quantify their variance [2] [16]. Within drug development, applying PCA to MD trajectories of protein-ligand complexes provides critical insights into the structural adaptations and dynamics that underpin molecular recognition, binding affinity, and allosteric mechanisms, thereby facilitating more rational drug design.

Mathematical Foundations: From Covariance Matrix to Principal Components

The Covariance Matrix

The starting point for PCA is the construction of the covariance matrix from the MD trajectory. After removing global translational and rotational motions by superimposing each frame onto a reference structure, the covariance matrix ( C ) is built from the atomic coordinates [16] [9]. For a system with ( N ) atoms, the mass-weighted covariance matrix is a ( 3N \times 3N ) symmetric matrix defined by its elements:

[C{ij} = \left \langle M{ii}^{\frac{1}{2}} (xi - \langle xi \rangle) M{jj}^{\frac{1}{2}} (xj - \langle x_j \rangle) \right \rangle]

Here, ( xi ) and ( xj ) represent atomic coordinates, ( \langle \rangle ) denotes the average over all trajectory frames, and ( M ) is a diagonal matrix containing the atomic masses (for mass-weighted analysis) or simply the identity matrix (for non-mass weighted analysis) [9]. This matrix encapsulates the correlations between the motions of every pair of atoms in the system.

The Eigenvalue Problem

The core of PCA lies in solving the eigenvalue problem for this covariance matrix:

[C \mathbf{a}k = \lambdak \mathbf{a}_k]

In this equation:

- ( \mathbf{a}_k ) is the ( k )-th eigenvector, a unit vector defining the direction of a collective mode in the ( 3N )-dimensional coordinate space [2].

- ( \lambdak ) is the corresponding eigenvalue, which is equal to the mean square fluctuation of the trajectory along that eigenvector [9]. The eigenvalues are always real and non-negative, and by convention, they are sorted in descending order: ( \lambda1 \geq \lambda2 \geq \ldots \geq \lambda{3N} ).

The set of eigenvectors ( {\mathbf{a}1, \mathbf{a}2, \ldots, \mathbf{a}{3N}} ) forms an orthonormal basis set, meaning they are mutually perpendicular (( \mathbf{a}i \cdot \mathbf{a}_j = 0 ) for ( i \neq j )) and each has unit length [2]. This orthogonality implies that the motions described by different principal components are uncorrelated.

Principal Components, Scores, and Variance

The eigenvectors ( \mathbf{a}_k ) are referred to as the principal components (PCs) or essential modes. They represent independent, collective motions of the atoms, such as domain movements or hinge-bending motions in a protein [9] [15].

Projecting the MD trajectory onto a principal component transforms the original coordinates into a one-dimensional time series called the principal component score:

[\mathbf{p}_k(t) = R^T M^{\frac{1}{2}} (\mathbf{x}(t) - \langle \mathbf{x} \rangle)]

Here, ( R ) is the matrix whose columns are the eigenvectors, and ( \mathbf{p}k(t) ) describes the evolution of the structure along the collective coordinate ( \mathbf{a}k ) at time ( t ) [9].

The importance of each principal component is directly given by its eigenvalue ( \lambda_k ). The total variance in the dataset is the sum of all eigenvalues. Therefore, the fraction of the total variance described by the ( k )-th PC is:

[\text{Fractional Variance} = \frac{\lambdak}{\sum{i=1}^{3N} \lambda_i}]

The first few PCs, associated with the largest eigenvalues, typically describe the large-scale, collective motions that are most biologically relevant [9] [15].

Table 1: Key Mathematical Quantities in PCA for Essential Dynamics

| Quantity | Symbol | Interpretation in Essential Dynamics |

|---|---|---|

| Covariance Matrix | ( C ) | A symmetric matrix capturing the pairwise correlations in atomic motions from the MD trajectory. |

| Eigenvector | ( \mathbf{a}_k ) | The ( k )-th principal component; defines a direction of collective atomic motion. |

| Eigenvalue | ( \lambda_k ) | The mean square fluctuation of the trajectory along ( \mathbf{a}_k ); quantifies the variance explained by that PC. |

| Projection (Score) | ( \mathbf{p}_k(t) ) | The time-dependent displacement of the structure along the principal component ( \mathbf{a}_k ). |

| Cumulative Variance | ( \sum{i=1}^k \lambdai / \sum \lambda ) | The total variance captured by the first ( k ) principal components. |

Quantitative Data on Variance and Dimensionality Reduction

A central tenet of PCA is that the data's essential dynamics are captured in a subspace of greatly reduced dimensionality. The eigenvalues are the direct quantitative measure that validates this.

Table 2: Typical Variance Distribution in PCA of a Protein MD Trajectory

| Principal Components | Cumulative Variance Explained | Biological Interpretation |

|---|---|---|

| PC1 (First Component) | Often 20-40% | Describes the dominant, large-amplitude collective motion (e.g., domain closure). |

| PC1 - PC2 | Often 30-50% | Captures the two most dominant modes, often defining a conformational subspace. |

| PC1 - PC10 | Often 70-90% | Describes the vast majority of collective, functionally relevant motions. |

| Remaining (PC11 - PC3N) | ~10-30% | Typically represents uncorrelated, localized noise and small-scale vibrations. |

As shown in Table 2, it is common for the first few principal components to account for the majority of the total structural variance [15]. This allows researchers to focus on a small number of collective variables, dramatically simplifying the analysis. The standard practice is to retain enough components to explain a high percentage (e.g., 90-95%) of the total variance, as judged by the cumulated variance [17]. This cumulative variance is a one-dimensional array where the value at the ( i )-th index is the sum of the fractional variances from the first to the ( i )-th component [17]. The number of components required to reach a cumulative variance of 0.95 is often significantly smaller than the total number of components, highlighting the power of PCA for data reduction [17].

Experimental Protocol for PCA of MD Trajectories

The following diagram illustrates the end-to-end protocol for performing Principal Component Analysis on a molecular dynamics trajectory.

Step-by-Step Methodology

Step 1: Trajectory Preprocessing and Alignment

- Objective: Remove global translations and rotations to focus on internal dynamics.

- Protocol: Using a tool like GROMACS's

gmx covaror MDAnalysis, least-squares fit every frame of the trajectory to a reference structure, typically the first frame or an average structure [9] [15]. This step is critical because the covariance matrix and the resulting principal components are sensitive to the choice of the reference structure [9]. - Software Commands (GROMACS example):

Step 2: Construction of the Covariance Matrix

- Objective: Compute the ( 3N \times 3N ) covariance matrix of atomic coordinates.

- Protocol: Calculate the covariance matrix using the aligned trajectory. The analysis can be mass-weighted or non-mass weighted. It is often performed on the backbone atoms (Cα, C, N) to reduce computational cost and focus on the protein's core dynamics [17] [16].

- Software Commands (GROMACS example):

Step 3: Diagonalization and Extraction of Eigenvectors and Eigenvalues

- Objective: Solve the eigenvalue problem to obtain the principal components and their variances.

- Protocol: This step is performed internally by the

gmx covaror equivalent software. The output includes:eigenvalues.xvg: A file listing all eigenvalues ( \lambdak ) in descending order.eigenvectors.trr: A trajectory file containing the eigenvectors ( \mathbf{a}k ) which can be visualized as pseudo-trajectories [9].

Step 4: Analysis of Variance

- Objective: Determine the significance of each principal component and the dimensionality of the essential subspace.

- Protocol: Calculate the fractional and cumulative variance for each PC from the eigenvalues. Plot the cumulative variance versus the component index. The number of components ( n ) required to explain a desired threshold (e.g., 80-90%) of the total variance is identified for subsequent analysis [17].

Step 5: Projection of the Trajectory

- Objective: Visualize the conformational sampling along the most important PCs.

- Protocol: Project the original trajectory onto the first 2 or 3 principal components to create a low-dimensional representation of the conformational landscape.

- Software Commands (GROMACS example):

The output

projection.xvgcontains the PC scores ( \mathbf{p}1(t) ) and ( \mathbf{p}2(t) ) for each frame, which can be plotted to reveal clusters of conformations and transitions between them.

Step 6: Visualization and Interpretation

- Objective: Understand the physical nature of the collective motions described by the PCs.

- Protocol:

- Porcupine Plots: Visualize an eigenvector by displacing the structure along the PC direction and drawing arrows from the original atomic positions to the displaced ones, showing the direction and magnitude of motion [15].

- Motion Animation: Create a movie of the motion by traversing the eigenvector as a coordinate.

- Software: Tools like VMD (with its Normal Mode Wizard plugin) or PyMOL are commonly used for this purpose [16].

The Scientist's Toolkit: Essential Research Reagents and Software

Table 3: Key Software Tools for PCA in Essential Dynamics

| Tool / "Reagent" | Function / Application | Reference |

|---|---|---|

| GROMACS | MD simulation suite; includes gmx covar for building/diagonalizing the covariance matrix and gmx anaeig for projection and analysis. |

[9] |

| MDAnalysis | Python library for MD analysis; provides a PCA class to perform PCA on trajectories, suitable for custom analysis workflows. |

[17] |

| CPPTRAJ | The analytical engine of AmberTools; powerful tool for trajectory analysis, including PCA. | [16] |

| VMD | Molecular visualization program; used to visualize eigenvectors (e.g., porcupine plots) and project trajectories via plugins like NMWiz. | [16] |

| Bio3D (R) | R package for comparative structural and sequence analysis; includes comprehensive tools for performing and visualizing PCA. | |

| Melarsomine Dihydrochloride | Melarsomine Dihydrochloride | Melarsomine dihydrochloride is an organoarsenical for veterinary parasitology research. This product is For Research Use Only and not for human or veterinary therapeutic use. |

| Serratamolide | Serratamolide, CAS:5285-25-6, MF:C26H46N2O8, MW:514.7 g/mol | Chemical Reagent |

Application Notes and Advanced Protocols

Validation of Principal Components

To ensure the identified essential motions are physically meaningful and not artifacts of random diffusion, validation is crucial.

Cosine Content Analysis: A key diagnostic test. The principal components of random diffusion are cosines. The cosine content of a PC score ( p_i(t) ) is calculated as:

[ \frac{2}{T} \left( \int0^T \cos\left(\frac{i \pi t}{T}\right) pi(t) \mbox{d} t \right)^2 \left( \int0^T pi^2(t) \mbox{d} t \right)^{-1} ]

Values close to 1 indicate that the motion along that PC resembles random diffusion, while low values suggest a motion governed by the internal potential of the protein [9].

Overlap Analysis between Simulation Halves: Split the trajectory into two halves (e.g., first and second) and perform PCA independently on each. A high overlap between the essential subspaces of both halves, calculated using a defined metric [9], indicates robust, well-sampled motions.

Advanced Application: Iterative Training Set Construction for Machine Learning

PCA has evolved into a key tool for developing modern machine-learning interatomic potentials. In a recent study, PCA was used to ensure the comprehensiveness of the training set for a Deep Potential (DP) model of the solid-state electrolyte LLZO [5].

Protocol:

- An initial DP model is trained on a limited dataset.

- This model is used to run MD simulations, generating a test set of new configurations.

- PCA is performed on the feature matrices of both the training set and the test set.

- The coverage rate—the percentage of test-set configurations that are similar to those in the training set—is calculated from the PCA results.

- If coverage is low (e.g., initial rate of 75%), configurations from the test set that are poorly represented are used to augment the training set.

- The process is iterated until the coverage rate is high (e.g., >99%), ensuring the DP model is accurate across a wide range of atomic environments [5].

This iterative feedback loop, guided by PCA, enables the creation of robust and precise simulation tools for studying complex materials, a methodology with clear potential for biomolecular simulations in drug discovery.

Proteins are not static entities; their internal motions and conformational changes are essential for biological functions such as substrate binding, catalysis, and allosteric regulation [18]. The central dogma of essential dynamics (ED) posits that the complex, high-dimensional fluctuations of proteins can be effectively described by a small number of collective coordinates that define an essential subspace [4]. This subspace captures the large-amplitude, concerted motions that are functionally relevant, while ignoring smaller, localized fluctuations that often represent noise. The method is founded on the observation that the conformational freedom of a protein in its folded state occupies a restricted region of the complete conformational space, and the geometry of this essential subspace can be extracted from molecular dynamics (MD) trajectories using principal component analysis (PCA) [4] [19].

The concept of the free energy landscape (FEL) is fundamental to understanding these dynamics [18]. A protein can exist in an ensemble of conformational substates, and the populations of these substates, as well as the kinetics of transitions between them, are governed by the topography of the FEL. The ruggedness of this landscape dictates the protein's dynamic personality. The large-scale concerted motions described by the essential subspace are the ones that enable the protein to sample different basins on this landscape, facilitating biological activity.

Theoretical Foundation: From PCA to the Essential Subspace

Principal Component Analysis of MD Trajectories

Principal Component Analysis (PCA) is a multivariate statistical technique that performs a linear transformation of the original high-dimensional data to a new coordinate system. When applied to an MD trajectory, PCA identifies the collective modes of atomic motion [4]. The process begins by considering the 3N-dimensional vector (where N is the number of atoms) representing the structure at each time step. After removing global translations and rotations, the covariance matrix of atomic positional fluctuations is constructed [19].

The covariance matrix (C-matrix) is a 3N x 3N real, symmetric matrix. For a coarse-grained analysis, often only Cα atoms are used, leading to a 3m x 3m matrix for m residues [4]. Mathematically, the elements of the covariance matrix C are given by:

( C{ij} = \langle (ri - \langle ri \rangle) (rj - \langle r_j \rangle) \rangle )

where ( ri ) and ( rj ) are atomic coordinates, and ( \langle \rangle ) denotes the average over the entire trajectory. An eigenvalue decomposition of C yields eigenvectors and eigenvalues [4]. The eigenvectors represent the independent collective motions (the principal components), while the corresponding eigenvalues quantify the variance (mean square fluctuation) captured by each mode [19]. The eigenvectors are ordered such that the first principal component (PC1) accounts for the largest variance in the data, the second (PC2) for the next largest, and so on.

Defining the Essential Subspace

The "essential subspace" is typically defined by the first few principal components (often between 2 and 20) that account for the majority of the global, collective motions of the protein [4]. A scree plot, which shows the eigenvalues sorted from largest to smallest, is used to identify a "kink" (the Cattell criterion). The modes before this kink are considered the essential modes [4]. Alternatively, one can select the top p modes that collectively capture a specific fraction (e.g., 80%) of the total variance [4].

The power of this approach lies in its drastic dimensionality reduction. A trajectory with millions of degrees of freedom can be described by a handful of essential modes, simplifying visualization and interpretation. Projecting the original trajectory onto these essential modes transforms the complex motion into a low-dimensional "projection" or "weight" over time, revealing the dominant conformational pathways [19].

Quantitative Analysis of the Essential Subspace

Variance Explained by Principal Components

Table 1: Typical Variance Distribution in a PCA of a Protein Trajectory

| Principal Component | Individual Variance (%) | Cumulative Variance (%) | Biological Interpretation |

|---|---|---|---|

| PC1 | 90.3 | 90.3 | Large-scale domain motion (e.g., Open/Closed transition) |

| PC2 | 4.1 | 94.4 | Torsional twist of domains |

| PC3 | 1.9 | 96.4 | Helical breathing motions |

| PC4-PC10 | <1.0 each | ~99.0 | Localized loop and side-chain rearrangements |

| Remaining Modes | Negligible | 100.0 | High-frequency atomic fluctuations |

Data adapted from an analysis of adenylate kinase (AdK), which undergoes a closed-to-open conformation transition [19]. The first three principal components alone captured 96.4% of the total trajectory variance, a common result for many globular proteins.

Comparative Analysis of Force Fields in the Essential Subspace

Table 2: Comparison of MD Force Fields Based on Essential Subspace Overlap and Experimental Agreement

| Force Field | Subspace Overlap with ff99SB-ILDN | Agreement with NMR Data | Notable Characteristics |

|---|---|---|---|

| Amber ff99SB-ILDN | (Reference) | Good | Balanced for stable, folded proteins [20] |

| Amber ff99SB*-ILDN | Very High | Good | Reparameterized for helix/coil balance; indistinguishable from ff99SB-ILDN in folded state simulations [20] |

| CHARMM22* | High | Good | Accurate description of local structure and dynamics [20] |

| CHARMM27 | High | Good | Comparable to CHARMM22* for essential motions [20] |

| Amber ff03/ff03* | Moderate | Intermediate | Distinct essential subspace compared to ff99SB-derived fields [20] |

| OPLS | Low | Poor (Conformational Drift) | Substantial conformational drift affects essential dynamics [20] |

| CHARMM22 | Low | Poor (Conformational Drift) | Similar drift issues as OPLS [20] |

This analysis demonstrates that while many modern force fields preserve large-scale, conserved motions, finer details in the essential subspace can distinguish their accuracy [20].

Protocols for Essential Subspace Analysis

Protocol 1: Performing PCA on an MD Trajectory Using MDAnalysis

This protocol details the steps for a standard PCA using the MDAnalysis Python package [19].

Step 1: Preparation and Alignment

- Select a representative atom group: Typically, the protein backbone (Cα, C, N) or Cα atoms only are used to reduce dimensionality and focus on the mainchain conformation.

- Align the trajectory: Superimpose every frame of the trajectory to a reference structure (e.g., the first frame or an average structure) using a rotational and translational fit on the selected atom group. This removes global motions, leaving only internal fluctuations.

Step 2: Running the Principal Component Analysis

- Instantiate and run the PCA object: The PCA class is called with the universe, the atom selection, and parameters. Note that the trajectory should be pre-aligned; setting

align=Truewithin the PCA class is not sufficient.

- The analysis outputs:

pc.p_components: Eigenvectors (principal components).pc.variance: Eigenvalues (variance along each component).pc.cumulated_variance: Cumulative variance.

Step 3: Projecting the Trajectory

- Transform the trajectory: Project the original coordinates into the essential subspace to obtain the weights (projections) for each frame.

Protocol 2: Validating and Comparing Essential Subspaces

Step 1: Assessing Sampling Convergence

- Check for stability: Split the trajectory into halves or thirds and perform PCA on each subset independently. Compare the dominant eigenvectors (e.g., PC1) from each subset to ensure they are similar in direction. Significant differences indicate insufficient sampling.

- Use a scree plot: Plot the eigenvalues against the mode index. A clear "elbow" or kink suggests a natural separation between essential and non-essential modes [4].

Step 2: Comparing Simulations or Force Fields

- Calculate subspace overlaps: The similarity between two essential subspaces (e.g., from different force fields) can be quantified by the root-mean-square inner product (RMSIP) or the principal component similarity (PCS) [20]. A high overlap indicates conserved large-scale motions.

- Project onto a common basis: Take the eigenvectors from one simulation and use them to project the trajectory from another simulation. This directly tests if the conformational space sampled in one trajectory can be described by the essential subspace of another.

Protocol 3: Visualizing the Essential Motions

Step 1: Visualizing Projections

- Create a projection plot: Plot the projection of the trajectory onto the first two or three principal components (e.g., PC1 vs. PC2). This scatter plot can reveal clusters of conformations and the major pathways of transition [19].

- Color by time or auxiliary variable: Coloring the points in the projection plot by simulation time or by a specific geometric parameter (e.g., radius of gyration) can provide mechanistic insights.

Step 2: Generating a "Porcupine Plot" for a Single Mode

- Visualize eigenvector displacements: The elements of an eigenvector are displacement vectors for each atom. A porcupine plot represents these displacements as arrows or lines emanating from the Cα atoms of the protein structure, showing the direction and magnitude of motion for that specific collective mode.

Step 3: Creating a Porcupine Plot and Projected Trajectory Movie

- Generate the projected trajectory: Create a new trajectory that represents the motion along a single principal component.

- Visualize the movie: Use visualization software like NGLView or VMD to animate the

proj_universe, showing the pure, large-amplitude motion described by the chosen principal component [19].

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools for Essential Dynamics

| Tool / Resource | Type | Primary Function | Application Note |

|---|---|---|---|

| MDAnalysis (Python Library) | Software Library | Trajectory analysis, including PCA [19] | Highly flexible; allows for custom analysis scripts and integration into larger workflows. |

GROMACS (gmx covar, gmx anaeig) |

MD Simulation Suite | Built-in PCA tools for trajectory analysis. | High performance and widely used; integrated with simulation workflow. |

| Amber ff19SB | Molecular Force Field | Provides the potential energy function for MD simulations. | Recent force field optimized for accurate backbone and side-chain dynamics [20]. |

| CHARMM36 | Molecular Force Field | Provides the potential energy function for MD simulations. | Another widely used and accurate force field; good performance in essential dynamics comparisons [20]. |

| NGLView (Python Library) | Visualization | Interactive visualization of structures and trajectories in Jupyter notebooks [19]. | Ideal for quickly viewing projected trajectories and essential motions. |

| VMD | Visualization Software | Advanced visualization and movie making for molecular structures and dynamics. | Powerful for creating publication-quality figures and animations of essential motions. |

| CONCOORD | Algorithm | Generates ensemble of conformers based on distance constraints [18]. | Efficiently samples conformational space when MD simulation is computationally infeasible. |

| Perphenazine dihydrochloride | Perphenazine Dihydrochloride | Perphenazine dihydrochloride is a dopamine receptor antagonist for neuroscience and psychiatric research. This product is for research use only (RUO). Not for human consumption. | Bench Chemicals |

| Hispaglabridin A | Hispaglabridin A, CAS:68978-03-0, MF:C25H28O4, MW:392.5 g/mol | Chemical Reagent | Bench Chemicals |

Workflow and Signaling Diagrams

Workflow of an Essential Dynamics Analysis

The following diagram illustrates the end-to-end protocol for performing an Essential Dynamics analysis, from trajectory preparation to the final interpretation of results.

Conceptual Relationship Between FEL, PCA, and the Essential Subspace

This diagram maps the conceptual relationship between the Free Energy Landscape, the PCA transformation, and the resulting low-dimensional Essential Subspace where biological function is interpreted.

Application Notes: Case Studies in Drug Discovery Context

The analysis of the essential subspace is not merely an academic exercise; it provides critical insights for rational drug design. The following case studies illustrate its practical utility.

Case Study 1: Substrate Binding in Proteinase K. PCA revealed that the substrate-binding site of proteinase K is highly flexible, supporting an induced-fit or conformational selection mechanism for substrate binding. Furthermore, simulations showed that Ca²⺠removal increases global flexibility but decreases local flexibility in the binding region, explaining the experimentally observed reduction in substrate affinity without a change in catalytic activity. This identifies global dynamics as a key regulator of function [18].

Case Study 2: Conformational Regulation of HIV-1 gp120. PCA was used to understand how mutations in the HIV-1 gp120 envelope glycoprotein affect its conformational dynamics, which is crucial for viral entry. Specific mutations (375 S/W and 423 I/P) were found to have distinct effects on molecular motions, predisposing the mutant structures toward either the CD4-bound or CD4-unbound state. This highlights how the essential subspace can be manipulated, offering a strategy for designing inhibitors that trap the protein in an inactive conformation [18].

Case Study 3: Force Field Selection for Drug Target Simulations. A comparative study of eight different force fields on the proteins ubiquitin and GB3 showed that while large-scale loop motions were often conserved across accurate force fields, finer details in the essential subspace differed [20]. For drug discovery projects targeting specific conformational states, this underscores the importance of selecting a force field (e.g., CHARMM22* or Amber ff99SB-ILDN) whose essential subspace aligns with experimental NMR data to ensure predictive accuracy.

Principal Component Analysis (PCA) serves as a foundational mathematical procedure in the analysis of Molecular Dynamics (MD) trajectories, where it is often termed Essential Dynamics (ED). This technique employs an orthogonal transformation to convert a set of observations of possibly correlated variables into a set of values of linearly uncorrelated variables called principal components [1] [21]. In the context of MD, the high-dimensional conformational space of a biopolymer is reduced to a minimal set of collective motions that describe the biologically relevant, large-scale movements of the structure [4] [22]. The power of PCA lies in its ability to systematically reduce the number of dimensions needed to describe protein dynamics through a decomposition process that filters observed motions from the largest to smallest spatial scales [4]. The application of PCA rests upon three critical mathematical assumptions: linearity of the underlying transformations, orthogonality of the derived components, and proper handling of the mean structure through data centering. These assumptions directly impact the interpretation of MD results and the validity of biological conclusions drawn from essential dynamics analysis.

Theoretical Foundations: The Mathematical Framework of PCA

The Linear Transformation Basis

PCA is fundamentally a linear transformation defined by a set of size (l) of (p)-dimensional vectors of weights (w(k)=(w1,...,wp){(k)}) that map each row vector (x(i)=(x1,...,xp){(i)}) of the data matrix (X) to a new vector of principal component scores (t(i)=(t1,...,tl)_{(i)}), given by: [ tk(i)=x(i)⋅w(k) \quad \text{for} \quad i=1,...,n \quad k=1,...,l ] In matrix form, this transformation is expressed as: [ T=XW ] where (W) is a (p)-by-(p) matrix whose columns are the eigenvectors of (X^TX) [1]. This linear mapping defines the core assumption of PCA – that the essential dynamics can be captured through linear combinations of the original variables. For protein dynamics, this means that the complex conformational changes are represented as linear displacements along the eigenvectors derived from the covariance matrix of atomic positions [4].

The Orthogonality Constraint

The orthogonality assumption in PCA dictates that principal components are mutually perpendicular axes. Mathematically, this is ensured because the eigenvectors of a real symmetric matrix (such as the covariance matrix) are orthogonal [1] [21]. Each subsequent component is calculated to be uncorrelated with (perpendicular to) all previous components and accounts for the next highest variance in the data [13] [23]. This orthogonal constraint means that the collective motions described by different principal components represent statistically independent modes of movement in the protein conformational space [4].

Mean Structure and Data Centering

The role of the mean structure is critical in PCA implementation. Prior to analysis, the data must be centered by subtracting the mean of each variable from all observed values [1] [13] [21]. For MD trajectories, this involves aligning all frames to a reference structure and subtracting the mean atomic coordinates [24] [22]. The mean-centered data matrix (A) is constructed as (A = { Xa - \langle Xa \rangle }), where (X_a) represents the aligned coordinates [22]. This centering ensures that the principal components describe variations about the mean structure rather than absolute positions, which is essential for capturing the functionally relevant fluctuations in protein structures [4].

Table 1: Core Mathematical Assumptions of PCA in Essential Dynamics

| Assumption | Mathematical Foundation | Implication for MD Analysis |

|---|---|---|

| Linearity | New basis is a linear combination of original variables: (PX=Y) [21] | Protein motions represented as linear displacements from mean structure [4] |

| Orthogonality | Eigenvectors of covariance matrix are orthogonal: (wi \cdot wj = 0) for (i \neq j) [1] | Collective motions are statistically independent [4] |

| Mean-Centering | Data centered to zero mean: (x1 = x - \mu_x) [21] | Analysis captures fluctuations about average structure [24] |

Practical Implementation in Molecular Dynamics

Workflow for Essential Dynamics Analysis

The application of PCA to MD trajectories follows a standardized workflow that operationalizes the core assumptions. The process begins with trajectory preprocessing, followed by covariance matrix construction, eigen decomposition, and finally projection and analysis [4] [12] [24]. For protein systems, a coarse-grained approach is typically employed where (C_{\alpha}) atoms represent residue positions, reducing the (3N) dimensional conformational space (where (N) is the number of atoms) to a more manageable size while retaining the essential structural dynamics [4] [24]. The following diagram illustrates the complete workflow for Essential Dynamics Analysis:

Protocol: Essential Dynamics Analysis of Protein Trajectories

Objective: To extract large-scale collective motions from MD trajectories using PCA.

Materials and Software Requirements:

- MD trajectory files (DCD format) and corresponding PDB structure file

- Analysis software: ProDy [24], JEDi [22], Bio3D [12], or MDAnalysis/MDTraj [12]

- Computing environment with sufficient memory for covariance matrix calculation

Step-by-Step Procedure:

Trajectory Preprocessing and Alignment

- Parse the reference PDB structure:

structure = parsePDB('mdm2.pdb')[24] - Load the trajectory file:

ensemble = parseDCD('mdm2.dcd')ordcd = DCDFile('mdm2.dcd')for large files [24] - Select Cα atoms:

ensemble.setAtoms(structure.calpha)[24] - Superpose all frames to the reference structure to remove global rotational and translational motions:

ensemble.superpose()[24]

- Parse the reference PDB structure:

Covariance Matrix Construction

- Construct the covariance matrix from the aligned coordinates using the (3N \times 3N) matrix, where N is the number of Cα atoms: [ Q = \frac{AA^T}{n-1} ] where (A) is the mean-centered coordinate matrix [22]

- Alternatively, use a correlation matrix if variables have significantly different variances [4]

Eigen Decomposition and Mode Calculation

Mode Selection and Validation

Trajectory Projection and Analysis

- Project the aligned trajectory onto principal components: [ t_k(i) = x(i) \cdot w(k) ] where (x(i)) is the coordinate vector of frame i and (w(k)) is the kth eigenvector [1]

- Analyze projections using clustering methods (k-means, hierarchical) to identify conformational states [12]

- Visualize essential motions using PyMol scripts or Normal Mode Wizard [24] [22]

Troubleshooting Notes:

- If rare events dominate the first few modes, consider applying outlier detection methods [22]

- For large proteins, use hierarchical PCA approaches to maintain spatial resolution [22]

- If sampling is inadequate (assessed via MSA/KMO statistics), extend simulation time or combine multiple trajectories [22]

Research Reagent Solutions

Table 2: Essential Software Tools for PCA in MD Analysis

| Tool/Software | Application Context | Key Functionality |

|---|---|---|

| ProDy [24] | Standard essential dynamics analysis | Covariance matrix calculation, mode extraction, trajectory projection |

| JEDi [22] | Advanced statistical analysis of ED | Multiple PCA variants, hierarchical PCA, subspace comparisons |

| Bio3D (R) [12] | Principal component analysis of MD | PCA, clustering, visualization of trajectories |

| MDAnalysis/MDTraj [12] | Trajectory preprocessing and analysis | Atom selection, trajectory slicing, RMSD calculations |

| PyMol [22] | Visualization of essential motions | Script generation for visualizing PCA mode shapes |

Validation and Significance Assessment

Statistical Validation of PCA Results

The validity of PCA results depends heavily on adequate sampling and statistical significance. Several metrics should be employed to assess result reliability:

Sampling Adequacy: Calculate the Measure of Sampling Adequacy (MSA) for each variable and the Kaiser-Meyer-Olkin (KMO) statistic to ensure sufficient data for stable covariance estimation [22].

Mode Stability: Compare essential subspaces from independent trajectories using overlap metrics:

High overlap values (+1 along diagonal) indicate reproducible modes [24].

Variance Distribution: Assess the scree plot for a visible "kink" (Cattell criterion) that separates essential motions from noise [4].

Limitations and Alternative Approaches

While powerful, PCA has limitations that researchers must acknowledge:

Linearity Constraint: PCA cannot capture nonlinear relationships in conformational dynamics. For systems with significant nonlinear motions, consider kernel PCA [4] [22] or other manifold learning techniques.

Orthogonality Artifacts: Biologically relevant motions may not be orthogonal, potentially splitting functionally coupled motions across multiple components [4].

Sampling Sensitivity: PCA results can be skewed by insufficient sampling or rare events. Robust PCA variants with shrinkage estimation or outlier detection should be employed for small datasets [4] [22].

Table 3: Validation Metrics for Essential Dynamics

| Validation Metric | Calculation Method | Interpretation |

|---|---|---|

| Sampling Adequacy | MSA/KMO statistics [22] | Values >0.5 indicate adequate sampling |

| Mode Overlap | Subspace comparisons between independent trajectories [24] | High overlap (>0.8) indicates robust modes |

| Variance Explained | Cumulative fraction of total variance [24] | Top 10-20 modes typically capture essential dynamics |

| Collectivity | Degree of uniform participation of atoms [4] | High collectivity indicates global motions |

Advanced Applications and Protocol Variations

Hierarchical and Specialized PCA Approaches

For complex systems, standard PCA may be extended through several advanced protocols:

Hierarchical PCA Protocol:

- Perform local PCA on individual residues to obtain eigenresidues

- Apply PCA on the eigenresidues to capture large-scale motions

- This approach maintains high spatial resolution while identifying global dynamics [22]

Distance-based PCA Protocol:

- Calculate pairwise distances between atoms instead of Cartesian coordinates

- Construct covariance matrix from distance vectors

- Perform standard eigen decomposition

- This approach is alignment-free and rotationally invariant [22]

Multiple Trajectory Analysis Protocol:

- Parse multiple trajectory files:

trajectory = Trajectory('mdm2.dcd')followed bytrajectory.addFile('mdm2sim2.dcd')[24] - Build combined covariance matrix across all trajectories

- Project individual trajectories onto common essential subspace

- Compare conformational sampling across different conditions [24]

Kernel PCA for Nonlinear Dynamics

When protein motions exhibit significant nonlinearity, kernel PCA can be employed:

- Kernel Selection: Choose an appropriate kernel function (e.g., radial basis function, polynomial)

- Kernel Matrix Computation: Calculate the kernel matrix from the original coordinates

- Centering: Center the kernel matrix in the feature space

- Eigen Decomposition: Perform eigen decomposition of the centered kernel matrix

- Projection: Project data onto the principal components in the feature space [4] [22]

This approach can capture nonlinear correlations while maintaining the dimensionality reduction benefits of standard PCA, though results may be more challenging to interpret geometrically [4].

The application of Principal Component Analysis to molecular dynamics trajectories provides a powerful framework for extracting essential biological motions from complex simulation data. The core assumptions of linearity, orthogonality, and proper mean centering form the mathematical foundation that enables dimensional reduction and interpretation of large-scale conformational changes. By following the standardized protocols outlined in this document—from trajectory preprocessing and covariance matrix construction through mode validation and advanced analysis—researchers can reliably identify the collective motions governing protein function. The continued development of specialized tools like JEDi and ProDy, coupled with robust statistical validation methods, ensures that Essential Dynamics analysis remains a cornerstone technique in computational biophysics and drug discovery, providing critical insights into the relationship between protein dynamics and biological function.

A Practical Pipeline: Implementing PCA on Your MD Trajectory from Start to Finish

Within the framework of principal component analysis (PCA) for essential dynamics in molecular dynamics (MD) research, the pre-processing of trajectories is a critical foundational step. The accuracy and interpretability of the subsequent essential dynamics analysis are contingent upon the proper preparation of the trajectory data. This protocol details the core pre-processing procedures of trajectory alignment, atom selection, and mean-centering, which are essential for eliminating extraneous motions and isolating the internal, biologically relevant conformational changes of biomolecules, such as proteins. These steps ensure that the PCA captures the essential modes of motion related to function, rather than artifacts of the simulation. The methodologies outlined herein are designed for researchers, scientists, and drug development professionals aiming to employ essential dynamics to study protein flexibility, ligand binding, and allosteric mechanisms [25] [26].

Core Concepts and Definitions

The following table defines the key concepts and explains their critical role in PCA for essential dynamics.

Table 1: Core Pre-processing Concepts for Essential Dynamics

| Concept | Definition | Role in PCA for Essential Dynamics |

|---|---|---|

| Trajectory Alignment | The process of spatially superimposing each frame of an MD trajectory onto a reference structure (e.g., the initial frame or an average structure) by minimizing the Root Mean Square Deviation (RMSD) of selected atomic coordinates [27]. | Removes global translational and rotational motions, which are not of interest in essential dynamics. This isolates the internal conformational fluctuations, allowing PCA to focus on the collective motions that define the protein's intrinsic dynamics [25]. |

| Atom Selection | The strategy of choosing a specific subset of atoms from the biomolecular system for analysis. Common selections include the protein backbone (Cα, C, N) or all Cα atoms [27] [26]. | Reduces the dimensionality and noise in the data. The backbone atoms often capture the large-scale, collective motions of the protein. Using a consistent set of atoms across all frames ensures a meaningful covariance matrix is built for diagonalization [25] [26]. |

| Mean-Centricering | The procedure of subtracting the average coordinates (the "mean structure") of the selected atoms from the coordinates in every frame of the aligned trajectory [26]. | Shifts the origin of the conformational space to the mean structure. The PCA is then performed on the fluctuations around this mean, ensuring that the resulting eigenvectors (principal components) describe the directions of maximum variance in the deviations from the average [26]. |

Experimental Protocols

Workflow for Trajectory Pre-processing

The following diagram illustrates the logical sequence of the core pre-processing steps for preparing an MD trajectory for Principal Component Analysis.

Protocol 1: Trajectory Alignment using MDAnalysis

This protocol uses the MDAnalysis Python library to align a trajectory to a reference structure based on a selected group of atoms [27].

Objective: To remove global rotation and translation from an MD trajectory by fitting each frame to a reference structure.

Procedure:

- Load the trajectory and reference: Create Universe objects for the trajectory and the reference structure (e.g., the first frame or a crystal structure).

Select atoms for fitting: Choose a group of atoms for the RMSD fitting. The protein backbone is a common and robust choice.

Run the alignment: Use the

AlignTrajclass to efficiently align the entire trajectory and write the output to a new file.

Technical Notes: The alignto() function can be used for aligning single frames. For systems with structural homologs, a dictionary mapping atoms based on a sequence alignment can be provided to the select parameter [27].

Protocol 2: Atom Selection and Mean-Centricering for PCA

This protocol details the selection of atoms and the mean-centering process, which are integral steps within the PCA procedure itself in MDAnalysis [26].

Objective: To prepare the aligned trajectory for covariance matrix construction by selecting relevant atoms and centering the data.

Procedure:

- Load the aligned trajectory.

Initialize the PCA class with selection and centering: The

PCAclass in MDAnalysis automatically handles atom selection and mean-centering. Thealign=Falseparameter is used because the trajectory is already aligned.Internal Process: During the

run()method, the PCA class:- Selects atoms: Extracts the coordinates of the specified atom selection (

'backbone') from every frame. - Calculates the mean structure: Computes the average 3D coordinates of every selected atom across all frames.

- Mean-centers the data: Subtracts this average structure from the coordinates in each frame to create a matrix of fluctuations.

- Selects atoms: Extracts the coordinates of the specified atom selection (

Technical Notes: The mean structure used for centering is calculated from the selected trajectory frames. For advanced users, a custom mean structure can be supplied via the mean parameter [26].

The Scientist's Toolkit

Table 2: Essential Research Reagents and Software Solutions

| Item | Function in Pre-processing | Example / Specification |

|---|---|---|

| MDAnalysis Library | A Python toolkit for analyzing MD trajectories. It provides high-level functions for trajectory alignment (align), atom selection (select_atoms), and PCA [27] [26]. |

align.alignto(), align.AlignTraj, analysis.pca.PCA |

GROMACS trjconv |

A powerful command-line utility within the GROMACS package for processing trajectories. It can be used for trajectory alignment, centering in the box, and making molecules whole across periodic boundaries [28]. | gmx trjconv -fit rot+trans |

| Backbone Atom Selection | A standard selection string to choose atoms that define the protein's backbone scaffold. This effectively reduces the number of degrees of freedom while capturing major structural motions [27] [26]. | Selection string: "backbone" or "name CA C N" |

| Cα Atoms Only | A more drastic reduction, using only the Cα atoms to represent each amino acid. This is common in very large systems or when focusing exclusively on large-scale backbone dynamics [25]. | Selection string: "name CA" |

| Fast QCP Algorithm | The underlying algorithm used by MDAnalysis to rapidly compute the RMSD and the optimal rotation matrix for alignment [27]. | Implemented in MDAnalysis.lib.qcprot |

| Agrobactin | Agrobactin, CAS:70393-50-9, MF:C32H36N4O10, MW:636.6 g/mol | Chemical Reagent |

| 2,4,6-Triphenylaniline | 2,4,6-Triphenylaniline|Antidiabetic Research|RUO | 2,4,6-Triphenylaniline is a research compound with demonstrated in vivo antidiabetic potential via AMPK activation. For Research Use Only. Not for human use. |

Data Presentation and Analysis

Table 3: Quantitative Impact of Pre-processing on a Model System This table presents hypothetical data illustrating how each pre-processing step improves the quality of the PCA on a 100 ns simulation of a globular protein (e.g., Cytochrome c [25]). The variance captured by the first principal component (PC1) is used as a metric for effective motion capture.

| Pre-processing Step | Total System Atoms | Atoms for PCA | RMSD to Reference (Å) [Mean ± SD] | Variance Captured by PC1 (%) | Key Observation |

|---|---|---|---|---|---|

| Raw Trajectory | ~20,000 | ~20,000 | 28.2 ± 5.1 [27] | < 10% | Dominated by global rotation/translation; covariance matrix is noisy. |

| After Alignment | ~20,000 | ~20,000 | 6.8 ± 1.5 [27] | ~25% | Internal motions are now visible; variance is more biologically meaningful. |

| Alignment + Cα Selection | ~20,000 | ~100 (Cα only) | 6.8 ± 1.5 (on Cα) | ~40% | Dimensionality reduced; signal from collective backbone motion is enhanced. |

| Full Pre-processing | ~20,000 | ~300 (Backbone) | 6.8 ± 1.5 (on backbone) | ~35% | Optimal balance, capturing concerted motion of the entire backbone scaffold. |

Principal Component Analysis (PCA) is a powerful statistical technique for simplifying complex, high-dimensional datasets by identifying their most important patterns of motion or variation. In the context of molecular dynamics (MD) simulations, this application is often termed Essential Dynamics (ED) [4]. When applied to MD trajectories, PCA systematically reduces the number of dimensions needed to describe protein dynamics through a decomposition process that filters observed motions from the largest to smallest spatial scales [4]. The method identifies new, uncorrelated variables—principal components—that are linear combinations of the original atomic coordinates and that capture the highest variance in the data [13]. This covariance matrix, constructed from atomic coordinates, is diagonalized to yield a set of orthogonal collective modes (eigenvectors), each with a corresponding eigenvalue that quantifies the portion of total motion variance it explains [4]. In practice, researchers have found that only a few of the largest principal components are able to accurately describe the dominant motion of the system, thus providing tremendous dimensionality reduction—often from thousands of degrees of freedom to just a handful of biologically relevant collective motions [29].

Theoretical Foundation and Mathematical Framework

Core Mathematical Principles

PCA is fundamentally an orthogonal linear transformation that transforms the data to a new coordinate system such that the greatest variance by some scalar projection of the data comes to lie on the first coordinate (called the first principal component), the second greatest variance on the second coordinate, and so on [1]. Mathematically, for a data matrix X with n observations and p variables (typically the 3N Cartesian coordinates of N atoms), the transformation is defined by a set of p-dimensional vectors of weights or loadings w=(wâ‚, wâ‚‚, ..., wâ‚š)â‚â‚–â‚Ž that map each row vector xâ‚ᵢ₎ of X to a new vector of principal component scores tâ‚ᵢ₎=(tâ‚, tâ‚‚, ..., tâ‚—)â‚ᵢ₎, given by (t{k(i)} = \mathbf{x}{(i)} \cdot \mathbf{w}_{(k)}) in such a way that the individual variables of t consider successively maximum variance [1].

The first weight vector wâ‚â‚â‚Ž thus must satisfy: [ \mathbf{w}{(1)} = \arg \max{\Vert \mathbf{w} \Vert = 1} \left{ \sumi (t1){(i)}^2 \right} = \arg \max{\Vert \mathbf{w} \Vert = 1} \left{ \sumi (\mathbf{x}{(i)} \cdot \mathbf{w})^2 \right} ] Equivalently, in matrix form: [ \mathbf{w}{(1)} = \arg \max{\left\|\mathbf{w}\right\|=1} \left{ \left\|\mathbf{Xw} \right\|^2 \right} = \arg \max \left{ \frac{\mathbf{w}^{\mathsf{T}}\mathbf{X}^{\mathsf{T}}\mathbf{Xw}}{\mathbf{w}^{\mathsf{T}}\mathbf{w}} \right} ] This is a Rayleigh quotient, whose maximum possible value is the largest eigenvalue of the matrix (\mathbf{X}^T\mathbf{X}), which occurs when w is the corresponding eigenvector [1].

Geometric Interpretation

Geometrically, PCA can be thought of as fitting a p-dimensional ellipsoid to the data, where each axis of the ellipsoid represents a principal component [1]. The principal components are then the axes of that ellipsoid. The magnitude of each axis is proportional to the variance of the data when projected onto that axis. If some axis of the ellipsoid is small, then the variance along that axis is also small [1]. In molecular terms, this means that the first few principal components often describe the large-scale collective motions essential to biological function, while the remaining components describe smaller-scale, localized motions that may be less functionally relevant.

Experimental Setup and Preprocessing

System Preparation and Trajectory Alignment

Before performing PCA, the MD trajectory must be properly prepared to eliminate global translations and rotations that could artificially inflate the variance [29]. This is typically achieved through a process called structural alignment, where each frame of the trajectory is optimally superimposed onto a reference structure (often the first frame or the average structure) by minimizing the root-mean-square deviation (RMSD) of selected atoms.

Table 1: Critical Preprocessing Steps for PCA of MD Trajectories

| Step | Description | Purpose | Common Tools/Commands |

|---|---|---|---|

| Trajectory Loading | Reading coordinate data from trajectory files | Input data for analysis | MDAnalysis.Universe(), pytraj.iterload() [19] [29] |

| Atom Selection | Choosing relevant atoms (e.g., backbone, Cα) | Focus on biologically relevant motions; reduce computational cost | select='backbone', mask=":860-898@O3',C3',C4',C5',O5',P" [19] [29] |

| Structural Alignment | Superimposing frames to reference | Remove global translations/rotations | align.AlignTraj() in MDAnalysis, autoimage() in pytraj [19] [29] |

| Coordinate Centering | Subtracting mean coordinates | Focus on fluctuations around mean structure | Implicit in covariance calculation [4] |