Comparative Analysis of Molecular Dynamics Integration Algorithms: From Foundational Principles to Advanced Applications in Drug Discovery

This article provides a comprehensive examination of molecular dynamics (MD) integration algorithms, exploring their foundational principles, methodological applications, optimization strategies, and validation frameworks.

Comparative Analysis of Molecular Dynamics Integration Algorithms: From Foundational Principles to Advanced Applications in Drug Discovery

Abstract

This article provides a comprehensive examination of molecular dynamics (MD) integration algorithms, exploring their foundational principles, methodological applications, optimization strategies, and validation frameworks. Tailored for researchers and drug development professionals, it synthesizes current technological advancements including quantum-AI integration, machine learning enhancement, and multi-omics data fusion. Through systematic comparison of classical, statistical, and deep learning-based approaches, we establish practical guidelines for algorithm selection based on dataset characteristics and computational requirements. The analysis addresses critical challenges in force field accuracy, computational scalability, and clinical translation while highlighting emerging opportunities in personalized cancer therapy and accelerated drug screening.

Understanding Molecular Dynamics Integration: Core Principles and Technological Evolution in Biomedical Research

Defining Molecular Dynamics Integration Algorithms in Computational Biology

Molecular dynamics (MD) simulations stand as a cornerstone technique in computational biology, enabling the exploration of biomolecular systems' structural and dynamic properties at an atomic level. The core of any MD simulation is its integration algorithm, a mathematical procedure that solves Newton's equations of motion to predict the trajectory of a system over time. The precise definition and implementation of these algorithms directly govern the simulation's numerical stability, computational efficiency, and physical accuracy. This guide provides a comparative analysis of prominent MD integration algorithms, framing them within the broader context of a rapidly evolving field where traditional physics-based simulations are increasingly integrated with, and enhanced by, artificial intelligence (AI)-driven approaches [1]. As the complexity of biological questions increases—particularly for challenging systems like Intrinsically Disordered Proteins (IDPs)—the limitations of conventional MD have become more apparent, spurring the development of innovative hybrid methodologies that leverage the strengths of multiple computational paradigms [2] [1].

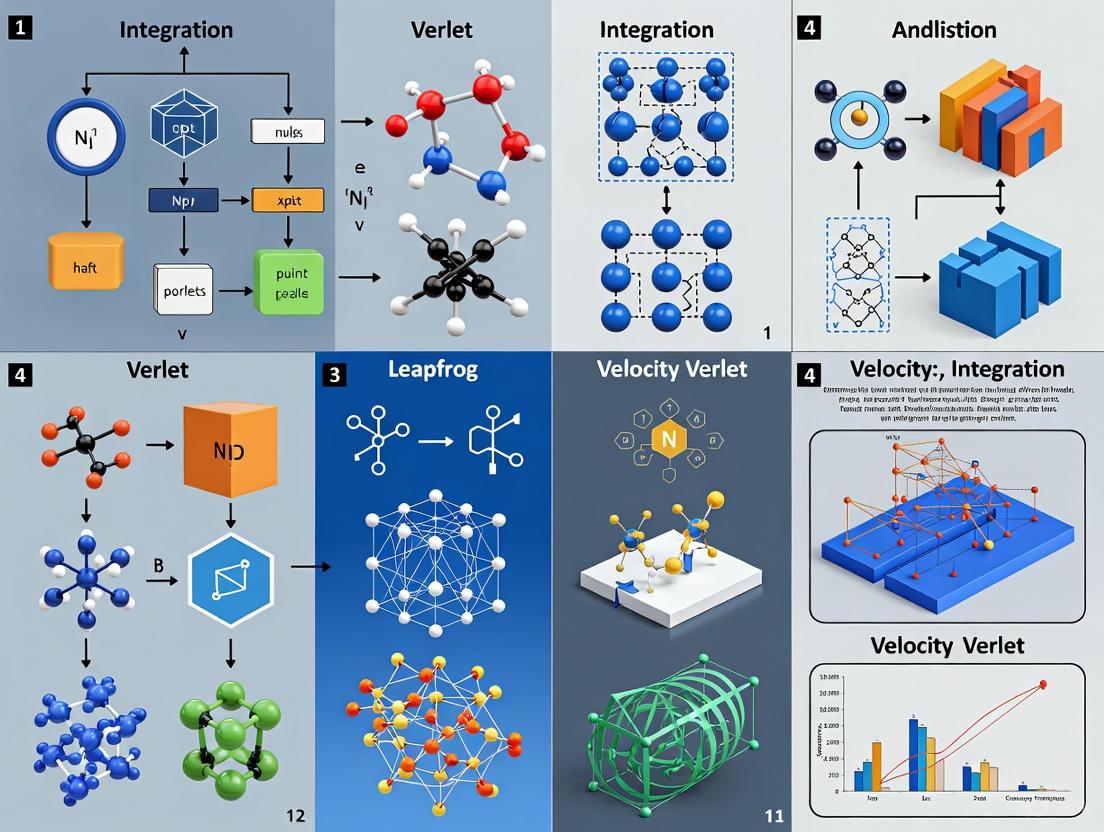

Comparative Analysis of MD Integration Algorithms

The following table summarizes the core characteristics, performance metrics, and ideal use cases for a selection of foundational and advanced MD integration algorithms.

Table 1: Performance Comparison of Key MD Integration Algorithms

| Algorithm | Theoretical Basis | Computational Efficiency | Numerical Stability | Key Advantages | Primary Limitations |

|---|---|---|---|---|---|

| Leapfrog Verlet | Second-order Taylor expansion; splits position and velocity updates. | High (minimal function evaluations per step). | Good for well-behaved biomolecular systems. | Time-reversible; symplectic (conserves energy well); simple to implement. | Lower accuracy for complex forces or large time steps. |

| Velocity Verlet | Integrates positions and velocities simultaneously. | High, comparable to Leapfrog. | Good. | Numerically stable; positions, velocities, and accelerations are synchronized at the same time point. | Slightly more complex implementation than Leapfrog. |

| Beeman's Algorithm | Uses higher-order approximations from Taylor expansion. | Moderate. | Good. | More accurate than Verlet variants for a given time step. | Computationally more expensive per step; less commonly used in modern software. |

| Gaussian Accelerated MD (GaMD) | Adds a harmonic boost potential to smooth the energy landscape. | Lower than standard MD due to added complexity. | Good when properly calibrated. | Enhances conformational sampling of rare events; no need for predefined reaction coordinates. | Requires careful parameter tuning to avoid distorting the underlying energy landscape. |

The Emergence of AI-Enhanced Sampling Methods

Driven by the need to sample larger and more complex conformational spaces, deep learning (DL) methods have emerged as a transformative alternative to traditional MD for specific applications. These AI-based approaches leverage large-scale datasets to learn complex, non-linear, sequence-to-structure relationships, allowing for the modeling of conformational ensembles without the direct computational cost of solving physics-based equations [1].

A 2023 study on the Hepatitis C virus core protein (HCVcp) provided a direct comparison of several neural network-based de novo modeling tools, which can be viewed as a form of initial structure generation that bypasses traditional MD-based folding. The study evaluated AlphaFold2 (AF2), Robetta-RoseTTAFold (Robetta), and transform-restrained Rosetta (trRosetta) [2].

Table 2: Performance of AI-Based Structure Prediction Tools from a Comparative Study

| Tool | Prediction Type | Reported Performance (HCVcp Study) | Key Methodology |

|---|---|---|---|

| AlphaFold2 (AF2) | De novo (template-free) | Outperformed by Robetta and trRosetta in this specific case. | Neural network trained on PDB structures; uses attention mechanisms. |

| Robetta-RoseTTAFold | De novo (template-free) | Outperformed AF2 in initial prediction quality. | Three-track neural network considering sequence, distance, and coordinates. |

| trRosetta | De novo (template-free) | Outperformed AF2 in initial prediction quality. | Predicts inter-residue distances and orientations as restraints for energy minimization. |

| Molecular Operating Environment (MOE) | Template-based (Homology Modeling) | Outperformed I-TASSER in template-based modeling. | Identifies templates via BLAST; constructs models through domain-based homology modeling. |

The study concluded that for the initial prediction of protein modeling, Robetta and trRosetta outperformed AF2 in this specific instance. However, it also highlighted that predicted structures often require refinement to achieve reliable structural models, for which MD simulation remains a promising tool [2]. This illustrates a key synergy: AI can generate plausible starting conformations, while MD provides the framework for refining and validating these structures under realistic thermodynamic conditions.

Experimental Protocols for Method Evaluation

To ensure a fair and reproducible comparison between different MD integration algorithms or between MD and AI methods, standardized experimental protocols are essential. Below is a detailed methodology adapted from recent comparative literature.

Protocol for Comparative Analysis of MD Integration Algorithms

- System Preparation: Select a well-characterized model system, such as a small globular protein (e.g., BPTI) or a short peptide. Place the system in a cubic water box with explicit solvent molecules and add ions to neutralize the system's charge.

- Energy Minimization: Use the steepest descent algorithm to remove any steric clashes and unfavorable contacts in the initial structure, typically for 5,000-10,000 steps.

- Equilibration:

- Perform a canonical (NVT) ensemble simulation for 100-500 ps, gradually heating the system to the target temperature (e.g., 300 K).

- Follow with an isothermal-isobaric (NPT) ensemble simulation for 100-500 ps to adjust the system density to the target pressure (e.g., 1 bar).

- Production Simulation: Run multiple independent production simulations (at least 3 replicas of 100 ns each) for each integration algorithm being tested (e.g., Velocity Verlet vs. GaMD). Utilize different random seeds for initial velocities to assess statistical significance.

- Data Analysis:

- Stability: Calculate the root mean square deviation (RMSD) of the protein backbone atoms relative to the starting structure to monitor structural convergence.

- Fluctuations: Compute the root mean square fluctuation (RMSF) of Cα atoms to evaluate residue-specific flexibility.

- Compactness: Determine the radius of gyration (Rg) to assess the overall compactness of the protein structure.

- Energy Conservation: For microcanonical (NVE) ensemble tests, monitor the total energy drift to evaluate the symplectic nature of the integrator.

Protocol for Evaluating AI vs. MD Sampling for IDPs

This protocol is designed for challenging systems like IDPs, where sampling efficiency is critical [1].

- System Generation: Select an IDP sequence of interest.

- Conformational Ensemble Generation:

- AI/DL Method: Input the amino acid sequence into a deep learning model (e.g., a specialized generative model for IDPs) to produce a large ensemble of predicted structures (e.g., 10,000 conformations).

- MD Simulation: Perform extensive, long-timescale MD simulations (multiple μs-long replicas) of the same IDP sequence, starting from an extended conformation.

- Validation against Experimental Data:

- Small-Angle X-Ray Scattering (SAXS): Calculate the theoretical scattering profile from both the AI-generated and MD-sampled ensembles and compare it to experimental SAXS data, typically using the χ² metric.

- NMR Chemical Shifts: Back-calculate NMR chemical shifts from both ensembles and compute the correlation with experimentally measured chemical shifts.

- J-Couplings: Compare calculated and experimental ³J-couplings, which are sensitive to backbone dihedral angles.

- Ensemble Analysis:

- Diversity: Quantify the structural diversity within each ensemble using measures like the pairwise RMSD distribution.

- Rare States: Analyze the ensembles for the presence of transient, low-population states that may be functionally relevant.

Visualizing Workflows and Logical Relationships

The following diagrams, generated with Graphviz, illustrate the core logical relationships and experimental workflows described in this guide.

MD & AI Integration Logic

Algorithm Comparison Methodology

The Scientist's Toolkit: Essential Research Reagents and Solutions

This table details key computational tools and resources essential for conducting research on MD integration algorithms and their AI-enhanced counterparts.

Table 3: Key Research Reagent Solutions for MD Integration Algorithm Research

| Item Name | Function/Brief Explanation | Example Use Case |

|---|---|---|

| Molecular Dynamics Software | Software suites that implement integration algorithms and force fields to run simulations. | GROMACS, AMBER, NAMD, OpenMM for running production MD simulations and analysis. |

| Coarse-Grained Force Fields | Simplified models that reduce the number of particles, speeding up calculations for larger systems. | MARTINI force field for simulating large biomolecular complexes or membranes over longer timescales. |

| AI-Based Structure Prediction Servers | Web-based platforms that use deep learning to predict protein structures from sequence. | AlphaFold2, Robetta, trRosetta for generating initial structural models or conformational ensembles. |

| Enhanced Sampling Plugins | Software tools integrated into MD packages that implement advanced sampling algorithms. | PLUMED for metadynamics or GaMD simulations to accelerate rare event sampling. |

| Quantum Chemistry Software | Provides highly accurate energy and force calculations for parameterizing force fields or modeling reactions. | Gaussian, ORCA for calculating partial charges or refining specific interactions in a small molecule ligand. |

| Trajectory Analysis Tools | Programs and libraries for processing, visualizing, and quantifying MD simulation data. | MDTraj, VMD, PyMOL for calculating RMSD, Rg, and other essential metrics from trajectory files. |

| 3-(4-ethoxyphenoxy)-5-nitrophenol | 3-(4-Ethoxyphenoxy)-5-Nitrophenol Research Chemical | High-purity 3-(4-Ethoxyphenoxy)-5-nitrophenol for research applications. This product is for Research Use Only (RUO) and is not intended for personal use. |

| [(2-Methoxybenzoyl)amino]thiourea | [(2-Methoxybenzoyl)amino]thiourea|RUO|Supplier | High-purity [(2-Methoxybenzoyl)amino]thiourea for research use only (RUO). Explore its applications in medicinal chemistry and organic synthesis. Not for human or veterinary diagnosis or therapy. |

In contemporary drug development, particularly for complex diseases, a singular technological approach is often insufficient. The integration of four key disciplines—Omics, Bioinformatics, Network Pharmacology, and Molecular Dynamics (MD) Simulation—has created a powerful, synergistic workflow for understanding disease mechanisms and accelerating therapeutic discovery [3] [4]. This paradigm shifts the traditional "one-drug, one-target" model to a holistic "network-target, multiple-component-therapeutics" approach, which is especially valuable for studying multi-target natural products and complex diseases like sepsis and cancer [3]. Omics technologies (genomics, proteomics, transcriptomics, metabolomics) provide the foundational data on molecular changes in disease states. Bioinformatics processes this data to identify key differentially expressed genes and pathways. Network Pharmacology maps these elements onto biological networks to predict drug-target interactions and polypharmacological effects. Finally, MD Simulation validates these predictions at the atomic level, providing dynamic insights into binding mechanisms and stability [4]. This guide provides a comparative analysis of how these pillars are integrated, with a specific focus on the performance of MD simulation algorithms and hardware that form the computational backbone of this workflow.

Comparative Analysis of Core Methodologies

Omics Technologies: Generating the Molecular Landscape

Omics technologies enable the comprehensive measurement of entire molecular classes in biological systems. The primary omics layers work in concert to build a multi-scale view of disease biology, generating the raw data that drives subsequent analysis in the integrated workflow.

Table 1: Core Omics Technologies and Their Roles in Integrated Workflows

| Omics Layer | Primary Focus | Key Outputs | Role in Integrated Workflow |

|---|---|---|---|

| Genomics | DNA sequence and structure | Genetic variants, polymorphisms | Identifies hereditary disease predispositions and targets |

| Transcriptomics | RNA expression levels | Differentially expressed genes (DEGs) | Reveals active pathways under disease or treatment conditions [4] |

| Proteomics | Protein abundance and modification | Protein expression, post-translational modifications | Identifies functional effectors and direct drug targets [4] |

| Metabolomics | Small-molecule metabolite profiles | Metabolic pathway alterations | Uncovers functional readouts of cellular status and drug metabolism |

Bioinformatics: From Data to Biological Insight

Bioinformatics provides the computational pipeline for transforming raw omics data into biological understanding. It applies statistical and computational methods to identify patterns, significantly enriching genes, and functional themes.

Table 2: Core Bioinformatics Analysis Modules

| Analysis Type | Methodology | Key Outcome | Application Example |

|---|---|---|---|

| Differential Expression | Statistical testing (e.g., limma R package) | Lists of significantly up/down-regulated genes or proteins [4] | Identifying 30 cross-species sepsis-related genes from GEO datasets [4] |

| Functional Enrichment | Gene Ontology (GO), Kyoto Encyclopedia of Genes and Genomes (KEGG) [4] | Significantly enriched biological processes and pathways | Mapping drug targets to sepsis-associated immunosuppression and inflammation pathways [4] |

| Protein-Protein Interaction (PPI) Network | STRING database, Cytoscape visualization [4] | Identification of hub genes within complex interaction networks | Using maximal clique centrality (MCC) to identify ELANE and CCL5 as core sepsis regulators [4] |

Network Pharmacology: Mapping the Polypharmacology Landscape

Network pharmacology investigates the complex web of interactions between drugs and their multiple targets, moving beyond the single-target paradigm. It is particularly suited for studying traditional medicine formulations, like Traditional Chinese Medicine (TCM), and their pleiotropic effects [3] [4]. The methodology involves constructing and analyzing networks that connect drugs, their predicted or known targets, related biological pathways, and disease outcomes. This approach helps elucidate synergistic (reinforcement, potentiation) and antagonistic (restraint, detoxification, counteraction) interactions between multiple compounds in a mixture, such as a botanical hybrid preparation (BHP) or TCM formula [3]. Machine learning further enhances this by building prognostic models; for instance, a StepCox[forward] + RSF model was used to identify core regulatory targets like ELANE and CCL5 in sepsis, with the model's performance validated using a C-index and time-dependent ROC curves (AUC: 0.72–0.95) [4].

Molecular Dynamics Simulation: Atomic-Level Validation

MD simulation provides the atomic-resolution validation within the integrated workflow, testing the binding interactions predicted by network pharmacology. After identifying key drug-target pairs (e.g., Ani HBr with ELANE and CCL5), molecular docking predicts the preferred binding orientation. MD simulations then take over to validate these complexes in a dynamic environment, simulating the physical movements of atoms and molecules over time, which is critical for assessing the stability of predicted binding modes [4]. The core MD algorithm involves a repeating cycle of: 1) computing forces based on the potential energy function, 2) updating particle velocities and positions using numerical integrators (e.g., leap-frog), and 3) outputting configuration data [5]. The stability of a ligand-protein complex, such as Ani HBr in the catalytic cleft of ELANE, is typically quantified using root-mean-square deviation (RMSD) and binding free energy calculations via the MM-PBSA method [4].

Performance Benchmarking: MD Algorithms and Hardware

The integration of MD simulations into the drug discovery workflow necessitates a thorough understanding of its performance aspects, from the underlying algorithms to the hardware that powers the computations.

MD Integration Algorithms and Protocols

The accuracy and efficiency of an MD simulation are governed by its integration algorithms and setup. Key considerations include:

- Integrator Choice: The leap-frog algorithm is a common default in packages like GROMACS for numerically solving Newton's equations of motion [5]. It calculates velocities at half-time steps and coordinates at integer time steps, providing good numerical stability.

- Time Step Selection: A 2 femtosecond (fs) timestep is standard when using bonds involving hydrogen atoms [6]. However, performance can be enhanced via hydrogen mass repartitioning (HMR), which allows for a 4 fs timestep by increasing the mass of hydrogen atoms and decreasing the mass of atoms to which they are bonded, thus maintaining total system mass while improving computational efficiency [6].

- Neighbor Searching: To efficiently compute non-bonded forces, MD codes like GROMACS use Verlet list algorithms that are updated periodically. This employs a buffered pair-list cut-off (larger than the interaction cut-off) to account for particle diffusion between updates, a crucial factor for energy conservation [5].

- Force Calculation: The non-bonded forces (( \mathbf{F}i = -\frac{\partial V}{\partial \mathbf{r}i} )) are computed as a sum of forces between non-bonded atom pairs plus forces from bonded interactions, restraints, and external forces [5].

Hardware and Software Performance Comparison

The performance of MD simulations is highly dependent on the computing hardware, particularly the GPU. Benchmarks across different MD software (AMBER, GROMACS, NAMD) and GPU models provide critical data for resource selection.

Table 3: AMBER 24 Performance Benchmark (ns/day) on Select NVIDIA GPUs [7]

| GPU Model | ~1M Atoms (STMV) | ~409K Atoms (Cellulose) | ~91K Atoms (FactorIX) | ~24K Atoms (DHFR) | Key Characteristics |

|---|---|---|---|---|---|

| RTX 5090 | 109.75 | 169.45 | 529.22 | 1655.19 | Highest performance for cost; 32 GB memory |

| RTX 6000 Ada | 70.97 | 123.98 | 489.93 | 1697.34 | 48 GB VRAM for large systems |

| B200 SXM | 114.16 | 182.32 | 473.74 | 1513.28 | Peak performance, high cost |

| H100 PCIe | 74.50 | 125.82 | 410.77 | 1532.08 | AI/ML hybrid workloads |

| L40S (Cloud) | ~250* | ~250* | ~250* | ~250* | Best cloud value, low cost/ns [8] |

Note: L40S performance is approximated from OpenMM benchmarks on a ~44k atom system [8].

Table 4: Cost Efficiency of Cloud GPUs for MD Simulation (OpenMM, ~44k atoms) [8]

| Cloud Provider | GPU Model | Speed (ns/day) | Relative Cost per 100 ns | Best Use Case |

|---|---|---|---|---|

| Nebius | L40S | 536 | Lowest (~40% of AWS T4 baseline) | Most traditional MD workloads |

| Nebius | H200 | 555 | ~87% of AWS T4 baseline | ML-enhanced workflows, top speed |

| AWS | T4 | 103 | Baseline (100%) | Budget option, long queues |

| Hyperstack | A100 | 250 | Lower than T4 & V100 | Balanced speed and affordability |

| AWS | V100 | 237 | ~133% of AWS T4 baseline | Legacy systems, limited new value |

Multi-GPU and Multi-Software Scaling

Different MD software packages leverage parallel computing resources differently, which is a critical factor in selecting an engine and configuring hardware.

- AMBER: Primarily optimized for single-GPU execution. Its multi-GPU (pmemd.cuda.MPI) version is designed for specialized methods like replica exchange rather than accelerating a single simulation [7] [6]. For multi-GPU systems, the recommended strategy is to run multiple independent simulations in parallel.

- GROMACS: Supports multi-GPU and multi-CPU parallelism effectively. A sample multi-GPU script for GROMACS 2023.2 uses

srun gmx mdrunwith-ntompfor OpenMP threads and flags (-nb gpu -pme gpu -update gpu) to direct different force calculations to the GPU [6]. - NAMD 3: Can utilize multiple GPUs for a single simulation. A sample job submission script for NAMD 3 requests 2 A100 GPUs and uses the

+idlepollflag to optimize GPU performance [6].

Integrated Workflow Visualization

The synergy between the four pillars can be visualized as a sequential, iterative workflow where the output of one stage becomes the input for the next, driving discovery from initial observation to atomic-level validation.

Diagram 1: Integrated discovery workflow.

Experimental Protocol for an Integrated Study

A detailed experimental protocol from a recent study on sepsis [4] exemplifies how these technologies are combined in practice. This protocol can serve as a template for similar integrative research.

Target Identification (Omics, Bioinformatics & Network Pharmacology)

- Identify Disease-Associated Genes: Curate sepsis-related genes from public databases (e.g., GEO: GSE65682) and GeneCards. Use the

limmaR package to identify differentially expressed genes (DEGs) with an adjusted p-value < 0.05 and |fold change| > 1 [4]. - Predict Drug Targets: Input the SMILES structure of the drug (e.g., Anisodamine hydrobromide, PubChem CID: 118856046) into target prediction servers (SwissTargetPrediction, SuperPred, PharmMapper) to generate a list of potential protein targets [4].

- Find Intersecting Genes: Perform a Venn analysis to identify genes that are both disease-associated (from Step 1) and predicted drug targets (from Step 2). These intersecting genes form the candidate set for further analysis.

- Construct PPI Network and Identify Hubs: Input the intersecting genes into the STRING database (confidence score > 0.7) to build a protein-protein interaction network. Visualize and analyze the network in Cytoscape. Use the CytoHubba plugin with the Maximal Clique Centrality (MCC) algorithm to identify top hub genes (e.g., ELANE, CCL5) [4].

- Build a Machine Learning Prognostic Model:

- Split a patient cohort (e.g., n=479) into training (70%) and validation (30%) sets.

- Evaluate multiple algorithms (e.g., RSF, Enet, StepCox) to select the optimal model based on the highest average C-index.

- Use the final model (e.g., StepCox[forward] + RSF) and feature importance analysis (e.g., SurvLIME) to validate the prognostic power of the hub genes.

Target Validation (Molecular Dynamics)

Molecular Docking:

- Prepare the 3D structure of the drug (Ani HBr) and target proteins (ELANE from PDB: 5ABW; CCL5 from PDB: 5CMD) using tools like AutoDock Tools and PyMOL.

- Define the docking grid, typically centered on the protein's known active site.

- Run docking simulations to generate potential binding poses and calculate binding affinity scores.

Molecular Dynamics Simulation:

- System Setup: Place the top-ranked docked complex in a solvation box (e.g., TIP3P water model) and add ions to neutralize the system's charge.

- Energy Minimization: Run a minimization step to remove any steric clashes.

- Equilibration: Perform equilibration in phases (e.g., NVT and NPT ensembles) to stabilize the system's temperature and pressure.

- Production Run: Execute a long-timescale MD simulation (e.g., 100-200 ns) using a package like AMBER, GROMACS, or NAMD. Use a 2 fs integration time step and apply constraints to bonds involving hydrogen atoms [6]. The simulation should employ periodic boundary conditions and particle mesh Ewald (PME) for long-range electrostatics.

- Trajectory Analysis: Analyze the resulting trajectory to calculate:

- Root-mean-square deviation (RMSD): To assess the stability of the protein-ligand complex.

- Root-mean-square fluctuation (RMSF): To evaluate residue flexibility.

- Molecular Mechanics/Poisson-Boltzmann Surface Area (MM-PBSA): To estimate the binding free energy of the complex and validate the stability of the binding interaction observed in the simulation [4].

Diagram 2: Detailed experimental protocol.

To implement the described integrated workflow, researchers require a suite of specific software tools, databases, and computational resources.

Table 5: Essential Reagents and Resources for the Four-Pillar Workflow

| Category | Resource/Reagent | Specific Example / Version | Primary Function |

|---|---|---|---|

| Omics Data Sources | GEO Database [4] | GSE65682 (Sepsis) | Repository for transcriptomics datasets |

| GeneCards [4] | v4.14 | Integrative database of human genes | |

| Bioinformatics Tools | R/Bioconductor Packages | limma, clusterProfiler [4] | Differential expression & functional enrichment |

| Protein Interaction DB | STRING (confidence >0.7) [4] | Constructing PPI networks | |

| Network Visualization | Cytoscape with CytoHubba [4] | Visualizing and identifying hub genes | |

| Network Pharmacology | Target Prediction | SwissTargetPrediction, SuperPred [4] | Predicting drug-protein interactions |

| Survival Modeling | Mime R package [4] | Building machine learning prognostic models | |

| MD Simulation | MD Software | GROMACS, AMBER (pmemd.cuda), NAMD, OpenMM [5] [7] [8] | Running molecular dynamics simulations |

| System Preparation | PDB (5ABW, 5CMD), AmberTools/parmed [4] [6] | Preparing protein structures & topologies | |

| Visualization/Analysis | PyMOL, VMD, MDTraj | Visualizing structures & analyzing trajectories | |

| Computing Hardware | Consumer GPU | NVIDIA RTX 5090, RTX 6000 Ada [9] [7] | High performance/cost for single-GPU workstations |

| Data Center/Cloud GPU | NVIDIA L40S, H200, A100 [8] | Scalable, high-memory, cloud-accessible computing |

Classical Molecular Dynamics (MD) has become an indispensable tool for researchers, scientists, and drug development professionals seeking to understand biological processes at the atomic level. However, the accurate computational representation of biomolecular recognition—including binding of small molecules, peptides, and proteins to their target receptors—faces significant theoretical and practical challenges. The high flexibility of biomolecules and the slow timescales of binding and dissociation processes present substantial obstacles for computational modelling [10]. These limitations stem primarily from two interconnected domains: the inherent constraints of empirical force fields and the overwhelming complexity of biomolecular systems, which often exhibit dynamics spanning microseconds to seconds, far beyond the routine simulation capabilities of classical approaches.

The core challenge lies in the fact that experimental techniques such as X-ray crystallography, NMR, and cryo-EM often capture only static pictures of protein complexes, making it difficult to probe intermediate conformational states relevant for drug design [10]. This review examines these limitations through a comparative lens, focusing on how different force fields and integration algorithms attempt to address these fundamental constraints while highlighting their performance characteristics through experimental data and methodological analysis.

Force Field Limitations: Accuracy Versus Transferability

Additive Force Field Constraints

Classical MD simulations rely on force fields (FFs)—sets of potential energy functions from which atomic forces are derived [11]. Traditional additive force fields divide interactions into bonded terms (bonds, angles, dihedrals) and non-bonded terms (electrostatic and van der Waals interactions) [11]. While this division provides computational efficiency, it introduces significant physical approximations that limit accuracy.

Table 1: Comparison of Major Additive Protein Force Fields

| Force Field | Key Features | Known Limitations | System Specialization |

|---|---|---|---|

| CHARMM C36 | New backbone CMAP potential; optimized side-chain dihedrals; improved LJ parameters for aliphatic hydrogens [12] | Misfolding observed in long simulations of certain proteins like pin WW domain; backbone inaccuracies [12] | Proteins, nucleic acids, lipids, carbohydrates [12] |

| Amber ff99SB-ILDN-Phi | Modified backbone potential; shifted beta-PPII equilibrium; improved water sampling [12] | Balance between helix and coil conformations requires empirical adjustment [12] | Proteins with improved sampling in aqueous environments [12] |

| GROMOS | Biomolecular specialization; parameterized for specific biological molecules [13] | Limited coverage of chemical space compared to CHARMM/Amber [12] | Intended specifically for biomolecules [13] |

| OPLS-AA | Comprehensive coverage of organic molecules; transferable parameters [13] | Less specialized for complex biomolecular interactions [12] | Broad organic molecular systems [13] |

The fundamental limitation of these additive force fields lies in their treatment of electronic polarization. As noted in current research: "It is clear that the next major step in advancing protein force field accuracy requires a different representation of the molecular energy surface. Specifically, the effects of charge polarization must be included, as fields induced by ions, solvent, other macromolecules, and the protein itself will affect electrostatic interactions" [12]. This missing physical component becomes particularly problematic when simulating binding events where electrostatic interactions play a crucial role.

Polarizable Force Fields: Progress and Persistent Challenges

The development of polarizable force fields represents the "next major step" in addressing electronic polarization limitations. Two prominent approaches have emerged: the Drude polarizable force field and the AMOEBA polarizable force field [12].

The Drude model assigns oscillating charged particles to atoms to simulate electronic polarization, with parameters developed for various biomolecular components including water models (SWM4-NDP), alkanes, alcohols, aromatic compounds, and nucleic acid bases [12]. Early tests demonstrated feasibility through simulation of a DNA octamer in aqueous solution with counterions [12]. Similarly, the AMOEBA force field implements a more sophisticated polarizable electrostatics model based on atomic multipoles rather than simple point charges.

While polarizable force fields theoretically provide more accurate physical representation, they come with substantial computational overhead—typically 3-10 times more expensive than additive force fields—limiting their application to large biomolecular systems on practical timescales. Parameterization also remains challenging, requiring extensive quantum mechanical calculations and experimental validation.

Biomolecular Complexity: Sampling Challenges and Timescale Limitations

The Timescale Dilemma in Binding and Dissociation

Biomolecular recognition processes central to drug design often occur on timescales that challenge even the most advanced classical MD implementations. While computing hardware advances have significantly increased accessible simulation times—with specialized systems like Anton3 achieving hundreds of microseconds per day for systems of ~1 million atoms [10]—this remains insufficient for many pharmaceutically relevant processes.

Table 2: Observed Simulation Timescales for Biomolecular Binding Events

| System Type | Binding Observed | Dissociation Observed | Simulation Time Required | Key Studies |

|---|---|---|---|---|

| Small-molecule fragments (weak binders) | Yes | Yes | Tens of microseconds | Pan et al. (2017): FKBP fragments [10] |

| Typical drug-like small molecules | Sometimes | Rarely | Hundreds of microseconds to milliseconds | Shan et al. (2011): Dasatinib to Src kinase [10] |

| Protein-peptide interactions | Yes (binding) | Rarely | Hundreds of microseconds | Zwier et al. (2016): p53-MDM2 with WE [10] |

| Protein-protein interactions | Yes (binding) | Very rarely | Hundreds of microseconds to milliseconds | Pan et al. (2019): barnase-barstar [10] |

The table illustrates a critical limitation: while binding events can sometimes be captured within feasible simulation timescales, dissociation events—which correlate better with drug efficacy—remain largely inaccessible to conventional MD [10]. This asymmetry creates significant gaps in our ability to predict complete binding kinetics and residence times for drug candidates.

Enhanced Sampling Methodologies

To address these timescale limitations, researchers have developed enhanced sampling methods that can be broadly categorized into collective variable (CV)-based and CV-free approaches:

CV-based methods like steered MD, umbrella sampling, metadynamics, and adaptive biasing force (ABF) apply potential or force bias along predefined collective variables to facilitate barrier crossing [10]. These methods require a priori knowledge of the system, which may not be available for complex biomolecular transitions. CV-free methods including replica exchange MD, tempered binding, and accelerated MD (aMD) don't require predefined reaction coordinates, making them more applicable to poorly understood systems but potentially less efficient for targeting specific transitions [10].

Integration Algorithms: Numerical Stability and Efficiency Trade-offs

Langevin Dynamics Integrators for Biomolecular Systems

Langevin and Brownian dynamics simulations play a prominent role in biomolecular research, with integration algorithms providing trajectories with different stability ranges and statistical accuracy [14]. These approaches incorporate frictional and random forces to represent implicit solvent environments, significantly reducing computational cost compared to explicit solvent simulations.

Recent comparative studies have evaluated numerous Langevin integrators, including the Grønbech-Jensen and Farago (GJF) method, focusing on their stability, accuracy in reproducing statistical averages, and practical usability with large timesteps [14]. The propagator formalism provides a unified framework for understanding these integrators, where the time evolution of the system is described by:

ð’«(tâ‚,tâ‚‚) · (ð©,ðª)|{t=tâ‚} = (ð©,ðª)|{t=tâ‚‚}

where the propagator acts through successive timesteps using the Liouville operator [14].

Table 3: Performance Comparison of MD Software and Algorithms

| Software | GPU Support | Key Strengths | Specialized Integrators | Performance Characteristics |

|---|---|---|---|---|

| GROMACS | Yes [6] [13] | High performance MD; comprehensive analysis [13] | LINCS/SETTLE constraints; Velocity Verlet variants [6] | Optimized for CPU and GPU; efficient parallelization [6] |

| AMBER | Yes [6] [13] | Biomolecular specialization; PMEMD [6] | Hydrogen mass repartitioning (4fs timesteps) [6] | Efficient GPU implementation; multiple GPU support mainly for replica exchange [6] |

| NAMD | Yes [13] | Fast parallel MD; CUDA acceleration [13] | Multiple timestepping; Langevin dynamics [13] | Optimized for large systems; strong scaling capabilities [13] |

| OpenMM | Yes [13] | High flexibility; Python scriptable [13] | Custom integrators; extensive Langevin options [14] [13] | Exceptional GPU performance; highly customizable [13] |

| CHARMM | Yes [13] | Comprehensive force field coverage [12] [13] | Drude polarizable model support [12] | Broad biomolecular applicability; polarizable simulations [12] |

Practical Considerations for Integration Timesteps

A critical practical consideration for classical MD is the maximum stable integration timestep, which directly impacts the accessible simulation timescales. A common approach to extending timesteps involves hydrogen mass repartitioning, where hydrogen masses are increased while decreasing masses of bonded atoms to maintain total mass, enabling 4 femtosecond timesteps instead of the conventional 2 femtoseconds [6]. This technique, implementable through tools like parmed in AMBER, provides immediate 2x speedup without significant accuracy loss for many biological systems [6].

Experimental Protocols and Benchmarking Methodologies

Standardized Benchmarking Approaches

Robust comparison of MD algorithms requires standardized benchmarking protocols. Best practices include:

Performance Evaluation: Assessing CPU efficiency by comparing actual speedup on N CPUs versus the expected 100% efficient speedup (speed on 1CPU × N) [6]. This reveals whether additional computational resources actually improve performance or introduce inefficiencies.

Statistical Accuracy Assessment: Evaluating how well integrators reproduce statistical averages, velocity and position autocorrelation functions, and thermodynamic properties across different timesteps [14].

Open-Source Validation Framework: Implementing integrators within maintained open-source packages like ESPResSo, with automated Python tests scripted by independent researchers to ensure objectivity, reusability, and maintenance of implementations [14].

Research Reagent Solutions: Essential Computational Tools

Table 4: Essential Research Tools for MD Method Development

| Tool Category | Specific Solutions | Function | Application Context |

|---|---|---|---|

| MD Simulation Engines | GROMACS, AMBER, NAMD, OpenMM, CHARMM [13] | Core simulation execution; algorithm implementation | Biomolecular dynamics; method development; production simulations [6] [13] |

| Force Fields | CHARMM36, Amber ff19SB, Drude Polarizable, AMOEBA [12] | Define potential energy functions and parameters | System-specific accuracy; polarizable vs. additive simulations [12] |

| Enhanced Sampling Plugins | PLUMED, Colvars | Collective variable analysis and bias implementation | Free energy calculations; rare event sampling [10] |

| Analysis Packages | MDTraj, MDAnalysis, VMD, CPPTRAJ | Trajectory analysis; visualization; property calculation | Result interpretation; publication-quality figures [13] |

| Benchmarking Suites | ESPResSo tests [14] | Integrator validation; performance profiling | Method comparison; stability assessment [14] |

Classical MD simulations face fundamental constraints in force field accuracy and biomolecular complexity that directly impact their predictive power for drug discovery applications. Additive force fields, while computationally efficient, lack explicit polarization effects critical for accurate electrostatic modeling in binding interactions. Polarizable force fields address this limitation but introduce substantial computational overhead. Meanwhile, the timescales of biomolecular recognition processes often exceed what conventional MD can reliably access, necessitating enhanced sampling methods that introduce their own approximations and potential biases.

The comparative analysis of integration algorithms reveals ongoing trade-offs between numerical stability, statistical accuracy, and computational efficiency. Langevin dynamics integrators provide implicit solvent capabilities but vary significantly in their conservation of thermodynamic properties and stability at larger timesteps. For researchers and drug development professionals, these limitations necessitate careful methodological choices based on specific scientific questions, with force field selection, sampling algorithms, and integration methods tailored to the particular biomolecular system and properties of interest. As methodological developments continue, particularly in machine learning-assisted approaches and increasingly accurate polarizable force fields, the field moves toward overcoming these persistent challenges in classical MD simulation.

Evolution from Single-Target to Multi-Target Therapeutic Strategies

For decades, the "one drug, one target" paradigm dominated drug discovery, fueled by the belief that highly selective medicines would offer optimal efficacy and safety profiles. This approach revolutionized treatment for numerous diseases with single etiological causes, such as targeting specific pathogens in infectious diseases. However, the limitations of single-target therapies became increasingly apparent when applied to complex, multifactorial diseases like cancer, neurological disorders, and autoimmune conditions [15]. The therapeutic landscape is now undergoing a fundamental transformation toward multi-target strategies that acknowledge and address the complex network biology underlying most chronic diseases [16].

This evolution stems from recognizing that disease systems characterized by dysregulated biological pathways often prove resilient to single-target interventions. Biological systems frequently utilize redundant mechanisms or activate compensatory pathways that bypass a single inhibited target, leading to limited efficacy and emergent drug resistance [16]. Multi-target therapeutics represent a paradigm shift designed to overcome these limitations by attacking disease systems on multiple fronts simultaneously, resulting in enhanced efficacy and reduced vulnerability to adaptive resistance [17] [16].

The comparative analysis presented in this guide examines the scientific foundation, experimental evidence, and practical implementation of both therapeutic strategies, providing researchers and drug development professionals with a framework for selecting appropriate targeting approaches based on disease complexity and therapeutic objectives.

Theoretical Foundations: From Single-Target Precision to Multi-Target Network Pharmacology

The Single-Target Strategy

The single-target approach aims to combat disease by selectively attacking specific genes, proteins, or pathways responsible for pathological processes. This strategy operates on the principle that high selectivity for individual molecular targets minimizes off-target effects and reduces harm to healthy cells, thereby maximizing therapeutic safety [17]. This approach has produced remarkable successes, particularly for diseases with well-defined, singular pathological drivers, such as trastuzumab targeting HER2 in breast cancer and infliximab targeting TNF-α in autoimmune disorders [18].

However, the single-target strategy demonstrates significant limitations when applied to complex diseases with multifaceted etiologies. In Alzheimer's disease (AD), for instance, multiple hypotheses—including amyloid cascade, tau pathology, neuroinflammation, mitochondrial dysfunction, and cholinergic deficit—have been proposed, each supported by substantial evidence yet insufficient individually to explain the full disease spectrum [15]. Similar complexity exists in oncology, where intratumor heterogeneity, Darwinian selection, and compensatory pathway activation frequently render single-target therapies ineffective against advanced cancers [17].

The Multi-Target Strategy

Multi-target strategies encompass two primary modalities: combination therapies employing two or more drugs with different mechanisms of action, and multi-target-directed ligands (MTDLs) consisting of single chemical entities designed to modulate multiple targets simultaneously [17]. The theoretical foundation for both approaches rests on network pharmacology principles, which recognize that most diseases arise from dysregulated biological networks rather than isolated molecular defects [16].

Multi-target therapeutics offer several theoretical advantages over single-target approaches. By simultaneously modulating multiple pathways, they can: (1) produce synergistic effects unattainable with single agents; (2) overcome clonal heterogeneity in complex diseases; (3) reduce the probability of drug resistance development; (4) enable lower doses of individual components, potentially reducing side effects; and (5) provide more predictable pharmacokinetic profiles compared to drug combinations [17] [16].

The rationale for multi-targeting is particularly compelling for diseases like cancer, where "the ability of cancer cells to develop resistance against traditional treatments, and the growing number of drug-resistant cancers highlights the need for more research and the development of new treatments" [17]. Similarly, in Alzheimer's disease, the multifactorial hypothesis proposes that different causes and mechanisms underlie different patient populations, with multiple distinct pathological processes contributing to individual cases [15].

Comparative Analysis: Efficacy Across Disease Models

Experimental Models and Their Translational Value

Preclinical evaluation of therapeutic strategies employs diverse disease models that recapitulate specific aspects of human pathology. The table below summarizes key experimental models used in neurology and oncology research, with their respective translational applications:

Table 1: Preclinical Models for Evaluating Therapeutic Strategies

| Disease Area | Experimental Model | Key Applications | Translational Value |

|---|---|---|---|

| Epilepsy | Maximal electroshock seizure (MES) test | Identify efficacy against generalized tonic-clonic seizures | Predicts efficacy against generalized seizure types [19] |

| Subcutaneous pentylenetetrazole (PTZ) test | Identify efficacy against nonconvulsive seizures | Screening for absence and myoclonic seizure protection [19] | |

| 6-Hz psychomotor seizure test | Identify efficacy against difficult-to-treat focal seizures | Model of therapy-resistant epilepsy [19] | |

| Intrahippocampal kainate model | Study spontaneous recurrent seizures in chronic epilepsy | Models mesial temporal lobe epilepsy with hippocampal sclerosis [19] | |

| Kindling model | Investigate epileptogenesis and chronic seizure susceptibility | Models progressive epilepsy development [19] | |

| Cancer | Cell-based phenotypic assays | Screen for multi-target effects in disease-relevant context | Preserves pathway interactions for combination discovery [16] |

| Xenograft models | Evaluate antitumor efficacy in vivo | Assesses tumor growth inhibition in physiological environment [17] |

Quantitative Comparison of Therapeutic Efficacy

Direct comparison of single-target versus multi-target compounds in standardized experimental models reveals distinct efficacy profiles, particularly in challenging disease models. The following table summarizes quantitative efficacy data (ED50 values) for representative antiseizure medications across multiple seizure models:

Table 2: Efficacy Profiles of Single-Target vs. Multi-Target Antiseizure Medications [19]

| Compound | Primary Targets | MES Test ED50 (mg/kg) | s.c. PTZ Test ED50 (mg/kg) | 6-Hz Test ED50 (mg/kg, 44 mA) | Amygdala Kindled Seizures ED50 (mg/kg) |

|---|---|---|---|---|---|

| Multi-Target ASMs | |||||

| Cenobamate | GABAA receptors, persistent Na+ currents | 9.8 | 28.5 | 16.4 | 16.5 |

| Valproate | GABA synthesis, NMDA receptors, ion channels | 271 | 149 | 310 | ~330 |

| Topiramate | GABAA & NMDA receptors, ion channels | 33 | NE | 13.3 | - |

| Single-Target ASMs | |||||

| Phenytoin | Voltage-activated Na+ channels | 9.5 | NE | NE | 30 |

| Carbamazepine | Voltage-activated Na+ channels | 8.8 | NE | NE | 8 |

| Lacosamide | Voltage-activated Na+ channels | 4.5 | NE | 13.5 | - |

| Ethosuximide | T-type Ca2+ channels | NE | 130 | NE | NE |

ED50 = Median effective dose; NE = No efficacy at doses below toxicity threshold

The data reveals that multi-target antisiezure medications (ASMs) generally demonstrate broader efficacy across diverse seizure models compared to single-target ASMs. Notably, cenobamate—with its dual mechanism enhancing GABAergic inhibition and blocking persistent sodium currents—shows robust efficacy across multiple models, including the therapy-resistant 6-Hz seizure test (44 mA) where many single-target ASMs fail [19]. This pattern supports the therapeutic advantage of multi-targeting for complex neurological conditions like treatment-resistant epilepsy.

In oncology, similar advantages emerge for multi-target approaches. Combination therapies have demonstrated the ability to improve treatment outcomes, produce synergistic anticancer effects, overcome clonal heterogeneity, and reduce the probability of drug resistance development [17]. The efficacy advantage is particularly evident for multi-targeted kinase inhibitors like sunitinib and sorafenib, which simultaneously inhibit multiple pathways driving tumor growth and angiogenesis [16].

Experimental Approaches for Multi-Target Drug Discovery

Methodologies for Multi-Target Therapeutic Development

The discovery and development of multi-target therapeutics employs distinct methodological approaches compared to traditional single-target drug discovery:

Multi-Target Drug Discovery Workflow

Key Methodological Protocols

Cell-Based Phenotypic Screening for Combination Effects

Purpose: To identify synergistic drug combinations in disease-relevant cellular models that preserve pathway interactions [16].

Workflow:

- Cell Model Selection: Choose disease-relevant cell lines (e.g., cancer cell lines with specific driver mutations, neuronal cultures with disease-relevant pathology)

- Compound Library Preparation: Assemble a diverse collection of compounds targeting different pathways relevant to the disease

- Matrix Combination Screening: Test compounds in pairwise combinations across multiple concentration ratios (e.g., 3×3 or 5×5 matrices)

- Viability/Response Assessment: Measure treatment effects using appropriate endpoints (cell viability, apoptosis, functional readouts)

- Synergy Analysis: Calculate combination indices using established methods (Chou-Talalay, Bliss independence, Loewe additivity)

- Hit Validation: Confirm synergistic combinations in secondary assays and additional models

Critical Considerations:

- Include appropriate single-agent controls for all tested concentrations

- Use multiple effect levels (e.g., IC50, IC75, IC90) for robust synergy assessment

- Employ cell models that maintain disease-relevant pathway interactions

- Consider temporal aspects of combination effects (simultaneous vs. sequential dosing)

Framework Combination Approach for MTDL Design

Purpose: To rationally design single chemical entities with multi-target activity by combining structural elements from known active compounds [17].

Workflow:

- Target Selection: Identify therapeutically relevant target combinations based on disease biology

- Pharmacophore Identification: Determine key structural features required for activity at each target

- Molecular Design:

- Fusing: Combining distinct pharmacophoric moieties with zero-length linker or minimal spacer

- Merging: Integrating pharmacophores into a single molecular scaffold with retained activity

- Linking: Connecting pharmacophores via cleavable or non-cleavable linkers

- Synthesis & Characterization: Chemical synthesis and in vitro profiling against intended targets

- ADMET Optimization: Refine structures to achieve favorable pharmacokinetics and safety profiles

Critical Considerations:

- Balance molecular complexity with drug-like properties

- Consider potential for target-driven polypharmacology versus designed multi-targeting

- Evaluate potential for off-target effects through comprehensive selectivity profiling

- Optimize for balanced potency across multiple targets when therapeutically desirable

The Scientist's Toolkit: Essential Research Reagents

Table 3: Key Research Reagents for Multi-Target Therapeutic Development

| Reagent Category | Specific Examples | Research Applications | Function in Experimental Design |

|---|---|---|---|

| Cell-Based Assay Systems | Primary neuronal cultures, Patient-derived cancer cells, Recombinant cell lines | Disease modeling, Combination screening, Mechanism studies | Provide physiologically relevant context for evaluating multi-target effects [16] |

| Pathway-Specific Modulators | Kinase inhibitors, Receptor antagonists, Enzyme activators/inhibitors | Target validation, Combination discovery, Pathway analysis | Probe specific biological pathways to identify productive target combinations [16] |

| Phenotypic Readout Reagents | Viability dyes (MTT, Resazurin), Apoptosis markers (Annexin V), High-content imaging reagents | Efficacy assessment, Mechanism elucidation, Toxicity evaluation | Quantify therapeutic effects in complex biological systems [16] |

| Compound Libraries | Known bioactive collections, Targeted kinase inhibitor sets, Natural product extracts | Combination screening, Polypharmacology profiling, Hit identification | Source of chemical tools for systematic combination searches [16] |

| Analytical Tools for Synergy | Combination index calculators, Bliss independence analysis software, Response surface methodology | Data analysis, Synergy quantification, Hit prioritization | Differentiate additive, synergistic, and antagonistic drug interactions [16] |

| N-cyclopentyl-3-methoxybenzamide | N-cyclopentyl-3-methoxybenzamide, CAS:331435-52-0, MF:C13H17NO2, MW:219.28 g/mol | Chemical Reagent | Bench Chemicals |

| 3-azido-5-(azidomethyl)benzoic acid | 3-azido-5-(azidomethyl)benzoic acid, CAS:1310822-77-5, MF:C8H6N6O2, MW:218.2 | Chemical Reagent | Bench Chemicals |

Clinical Translation: From Bench to Bedside

Clinical Evidence Supporting Multi-Target Strategies

Clinical studies across therapeutic areas provide compelling evidence for the advantages of multi-target approaches in complex diseases. In epilepsy treatment, cenobamate—a recently approved multi-target ASM—has demonstrated superior efficacy in randomized controlled trials with treatment-resistant focal epilepsy patients, far surpassing the efficacy of other newer ASMs [19]. This clinical success contrasts with the failure of padsevonil, an intentionally designed dual-target ASM (targeting SV2A and GABAA receptors) that failed to separate from placebo in phase IIb trials despite promising preclinical results [19].

In oncology, the advantages of multi-target strategies are well-established. Combination therapies are now standard of care for most cancers, with regimens combining cytotoxics, targeted agents, and immunotherapies demonstrating improved outcomes compared to single-agent approaches [17]. The development of multi-target kinase inhibitors (sunitinib, sorafenib, pazopanib) and antibody-drug conjugates (trastuzumab emtansine) represents successful translation of multi-target principles into clinical practice [17] [16].

For Alzheimer's disease, despite the continued dominance of single-target approaches in clinical development, the repeated failures of amyloid-focused therapies have strengthened the argument for multi-target strategies. The recognition that "each AD case may have a different combination of etiological factors/insults that cause the onset of AD in this individual" supports patient stratification and combination approaches tailored to individual patient pathology [15].

Advantages and Challenges in Clinical Development

Multi-target therapeutic strategies present unique considerations in clinical development:

Advantages:

- Overcoming Resistance: Simultaneous targeting of multiple pathways reduces the probability of resistance development, particularly relevant in oncology and antimicrobial therapy [17]

- Enhanced Efficacy: Synergistic interactions can produce therapeutic effects unattainable with single agents [16]

- Improved Safety Profiles: Lower doses of individual components may reduce specific side effects while maintaining efficacy [17]

- Broader Applicability: Address disease heterogeneity by targeting multiple drivers simultaneously [15]

Challenges:

- Development Complexity: Identifying optimal target combinations and dose ratios requires extensive preclinical investigation [16]

- Regulatory Hurdles: Combination therapies may require demonstration of each component's contribution, while MTDLs face characterization challenges [17]

- Clinical Trial Design: Traditional trial designs may be suboptimal for evaluating multi-target approaches, necessitating adaptive designs and biomarker-stratified populations [15]

- Pharmacokinetic Optimization: Achieving balanced exposure at multiple targets with different affinity requirements presents formulation challenges [17]

Future Perspectives and Emerging Trends

The evolution from single-target to multi-target therapeutic strategies continues to advance, with several emerging trends shaping future development:

Artificial Intelligence in Multi-Target Drug Discovery: AI and machine learning are increasingly applied to identify productive target combinations, predict polypharmacological profiles, and design optimized MTDLs. These approaches can analyze vast biological datasets to uncover non-obvious target relationships and predict synergistic interactions [20].

Patient Stratification for Multi-Target Therapies: Recognition that different patient subpopulations may benefit from distinct target combinations is driving precision medicine approaches in multi-target therapy development. Biomarker-driven patient selection will likely enhance the success rates of both combination therapies and MTDLs [15].

Advanced Therapeutic Modalities: New modalities beyond small molecules and antibodies are expanding the multi-target toolkit. Bispecific antibodies, antibody-drug conjugates, proteolysis-targeting chimeras (PROTACs), and cell therapies with engineered signaling logic all represent technological advances enabling sophisticated multi-target interventions [18] [17].

Regulatory Science Evolution: Regulatory agencies are developing frameworks to accommodate the unique characteristics of multi-target therapies, including combination products and complex MTDLs. This evolution is critical for efficient translation of multi-target approaches to clinical practice [21].

The continued integration of network pharmacology, systems biology, and computational modeling into drug discovery pipelines promises to accelerate the development of optimized multi-target therapeutics for complex diseases. As these approaches mature, multi-target strategies are positioned to become the dominant paradigm for treating cancer, neurological disorders, and other complex conditions where single-target interventions have demonstrated limited success.

The field of molecular simulation is undergoing a fundamental transformation, moving from purely classical Newtonian mechanics toward hybrid and fully quantum mechanical approaches. This shift is largely driven by the limitations of classical molecular dynamics (MD) in addressing complex quantum phenomena and the simultaneous emergence of quantum computing as a viable computational platform. Classical MD simulations have established themselves as a powerful tool in biomedical research, offering critical insights into intricate biomolecular processes, structural flexibility, and molecular interactions, playing a pivotal role in therapeutic development [22]. These simulations leverage rigorously tested force fields in software packages such as GROMACS, DESMOND, and AMBER, which have demonstrated consistent performance across diverse biological applications [22].

However, traditional MD faces significant challenges in accurately simulating quantum effects, dealing with the computational complexity of large systems, and achieving sufficient sampling of conformational spaces, particularly for complex biomolecules like intrinsically disordered proteins (IDPs) [1]. The integration of machine learning and deep learning technologies has begun to address some limitations of classical MD, but quantum computing promises a more fundamental solution by leveraging quantum mechanical principles directly in computation [22] [23]. This comparative analysis examines the foundational differences, current capabilities, and future potential of classical Newtonian versus quantum mechanical approaches to molecular simulation, with particular emphasis on their application in drug development and biomolecular research.

Foundational Principles: Classical vs. Quantum Computational Frameworks

Classical Newtonian Dynamics in Molecular Simulation

Classical molecular dynamics operates on well-established Newtonian physical principles, where atomic motions are determined by numerical integration of Newton's equations of motion. The core of classical MD lies in its force fields—mathematical representations of potential energy surfaces that describe how atoms interact. These force fields typically include terms for bond stretching, angle bending, torsional rotations, and non-bonded interactions (van der Waals and electrostatic forces) [22]. The CHARMM36 and GAFF2 force fields represent widely adopted parameter sets for biomolecular and ligand systems respectively [24].

The mathematical foundation relies on Hamilton's equations or the Lagrangian formulation of mechanics, with time evolution governed by integration algorithms such as Verlet, Leap-frog, or Velocity Verlet. These algorithms preserve the symplectic structure of Hamiltonian mechanics, enabling stable long-time integration. A critical aspect involves maintaining energy conservation and controlling numerical errors through time step selection, typically 1-2 femtoseconds for biological systems, constrained by the highest frequency vibrations (C-H bond stretches) [24].

Quantum Mechanical Approaches and Quantum Computing Foundations

Quantum approaches to molecular simulation operate on fundamentally different principles, representing systems through wavefunctions rather than precise atomic positions and velocities. Where classical MD approximates electrons through parameterized force fields, quantum methods explicitly treat electronic degrees of freedom, enabling accurate modeling of bond formation/breaking, charge transfer, and quantum tunneling effects.

Quantum computing introduces additional revolutionary concepts—qubit superposition, entanglement, and quantum interference—that potentially offer exponential speedup for specific computational tasks relevant to molecular simulation. Quantum algorithms for chemistry, such as the variational quantum eigensolver (VQE) and quantum phase estimation (QPE), aim to solve the electronic Schrödinger equation more efficiently than classical computers. These approaches map molecular Hamiltonians to qubit representations, leveraging quantum circuits to prepare and measure molecular wavefunctions.

The table below summarizes the core differences between these computational frameworks:

Table 1: Foundational Principles of Classical vs. Quantum Computational Approaches

| Aspect | Classical Newtonian MD | Quantum Mechanical Approaches |

|---|---|---|

| Theoretical Foundation | Newton's equations of motion; Empirical force fields | Schrödinger equation; Electronic structure theory |

| System Representation | Atomic coordinates & velocities | Wavefunctions & density matrices |

| Key Approximation | Born-Oppenheimer approximation; Point charges | Basis set truncation; Active space selection |

| Computational Scaling | O(N) to O(N²) with particle-mesh Ewald | O(N³) to O(e^N) for exact methods on classical computers |

| Time Evolution | Numerical integration (Verlet algorithms) | Time-dependent Schrödinger equation |

| Treatment of Electrons | Implicit via force field parameters | Explicit quantum mechanical particles |

| Dominant Software | GROMACS, AMBER, DESMOND [22] | QChem, PySCF, Qiskit Nature |

Comparative Performance Analysis: Integration Algorithms and Sampling Efficiency

Classical MD Integration Algorithms and Performance Metrics

Classical MD integration algorithms balance numerical accuracy, energy conservation, and computational efficiency. The most widely used algorithms employ a symmetric decomposition of the classical time-evolution operator, preserving the symplectic structure of Hamiltonian mechanics. The following table benchmarks popular integration schemes used in production MD simulations:

Table 2: Performance Comparison of Classical MD Integration Algorithms

| Algorithm | Order of Accuracy | Stability Limit (fs) | Energy Conservation | Memory Requirements | Key Applications |

|---|---|---|---|---|---|

| Verlet | 2nd order | 1-2 fs | Excellent | Low (stores r(t-Δt), r(t)) | General biomolecular MD [24] |

| Leap-frog | 2nd order | 1-2 fs | Very Good | Low (stores v(t-Δt/2), r(t)) | Large-scale production MD |

| Velocity Verlet | 2nd order | 1-2 fs | Excellent | Medium (stores r(t), v(t), a(t)) | Path-integral MD; Thermostatted systems |

| Beeman | 3rd order | 2-3 fs | Good | High (multiple previous steps) | Systems with velocity-dependent forces |

| Langevin | 1st order | 2-4 fs | Poor (dissipative) | Low | Implicit solvent; Enhanced sampling |

In practical applications, these algorithms enable simulations of large biomolecular systems (>100,000 atoms) for timescales reaching microseconds to milliseconds, though adequate sampling remains challenging for complex biomolecules like intrinsically disordered proteins (IDPs) [1]. Classical MD has demonstrated particular value in studying structural flexibility, molecular interactions, and their roles in drug development [22].

Enhanced Sampling and Machine Learning Integration

To address sampling limitations in conventional MD, specialized techniques have been developed that often combine classical dynamics with statistical mechanical principles. Gaussian accelerated MD (GaMD) has proven effective for enhancing conformational sampling of biomolecules while maintaining reasonable computational cost [1]. In studies of ArkA, a proline-rich IDP, GaMD successfully captured proline isomerization events, revealing that all five prolines significantly sampled the cis conformation, leading to a more compact ensemble with reduced polyproline II helix content that better aligned with experimental circular dichroism data [1].

Machine learning force fields (MLFFs) represent another significant advancement, enabling quantum-level accuracy at classical MD cost for large-scale simulations of complex aqueous and interfacial systems [23]. These ML-enhanced approaches facilitate simulations that were previously computationally prohibitive, providing new physical insights into aqueous solutions and interfaces. For instance, MLFFs allow nanosecond-scale simulations with thousands of atoms while maintaining quantum chemistry accuracy, and ML-enhanced sampling facilitates crossing large reaction barriers while exploring extensive configuration spaces [23].

Quantum Algorithm Scaling and Early Performance Indicators

Quantum computing approaches to molecular simulation present a fundamentally different scaling behavior compared to classical methods. While full-scale quantum advantage for chemical applications remains theoretical, early experiments and complexity analyses suggest promising directions:

Table 3: Quantum Algorithm Performance for Molecular Simulation

| Quantum Algorithm | Theoretical Scaling | Qubit Requirements | Circuit Depth | Current Limitations |

|---|---|---|---|---|

| Variational Quantum Eigensolver (VQE) | Polynomial (depends on ansatz) | 50-100 for small molecules | Moderate | Barren plateaus; Ansatz design |

| Quantum Phase Estimation (QPE) | O(1/ε) for precision ε | 100+ for meaningful systems | Very deep | Coherence time limitations |

| Quantum Monte Carlo (QMC) | Polynomial speedup | 50-150 for relevant systems | Variable | Signal-to-noise issues |

| Trotter-Based Dynamics | O(t/ε) for time t, precision ε | 50-100 for small systems | Depth grows with time | Error accumulation |

The integration of machine learning with quantum computing (Quantum Machine Learning) shows particular promise for optimizing variational quantum algorithms and analyzing quantum simulation outputs. ML-driven data analytics, especially graph-based approaches for featurizing molecular systems, can yield reliable low-dimensional reaction coordinates that improve interpretation of high-dimensional simulation data [23].

Experimental Protocols and Methodologies

Standard Classical MD Simulation Protocol

The following detailed methodology represents a typical workflow for classical MD simulations of biomolecular systems, as implemented in widely used packages like GROMACS [24]:

System Preparation: Obtain initial protein coordinates from experimental structures (Protein Data Bank) or homology modeling. For drug design applications, include inhibitor/ligand molecules positioned in binding sites based on docking studies [22].

Force Field Parameterization: Assign appropriate parameters from established force fields (CHARMM36, AMBER, OPLS-AA). For small molecules, generate parameters using tools like CGenFF or GAFF2 [24].

Solvation and Ion Addition: Place the biomolecule in a simulation box with explicit solvent molecules (typically TIP3P, SPC, or TIP4P water models). Add ions to neutralize system charge and achieve physiological concentration (e.g., 150mM NaCl).

Energy Minimization: Perform steepest descent or conjugate gradient minimization (50,000 steps or until maximum force <1000 kJ/mol/nm) to remove bad contacts and prepare for dynamics [24].

Equilibration:

- NVT ensemble: 100 ps at 310 K using the V-rescale thermostat (Ï„=0.1 ps) with position restraints on protein and ligand heavy atoms [24].

- NPT ensemble: 100 ps at 310 K and 1 bar using the Parrinello-Rahman barostat (Ï„=2.0 ps) with similar position restraints.

Production Simulation: Run unrestrained dynamics for 50-100 ns (or longer for complex processes) at constant temperature (310 K) and pressure (1 bar) using a 2 fs time step with LINCS constraints on all bonds involving hydrogen atoms [24].

Analysis: Trajectories are saved every 10-100 ps for subsequent analysis of structural properties, dynamics, and interactions using built-in tools and custom scripts.

This protocol has been successfully applied in diverse contexts, from studying protein-inhibitor interactions for drug development to investigating the molecular networks of dioxin-associated liposarcoma [22] [24].

Enhanced Sampling Protocol for IDPs and Complex Systems

For challenging systems like intrinsically disordered proteins (IDPs) where conventional MD struggles with adequate sampling, specialized protocols are implemented:

Accelerated MD (aMD): Boost the potential energy surface to reduce energy barriers, employing a dual-boost strategy that separately boosts the dihedral and total potential energy terms.

Gaussian Accelerated MD (GaMD): Apply a harmonic boost potential that follows a Gaussian distribution, enabling enhanced sampling without the need for predefined collective variables, as demonstrated in studies of ArkA IDP [1].

Replica Exchange MD (REMD): Run multiple replicas at different temperatures (or with different Hamiltonians), allowing periodic exchange between replicas according to Metropolis criterion to overcome kinetic traps.

Metadynamics: Employ bias potentials in selected collective variables (CVs) to encourage exploration of configuration space and reconstruct free energy surfaces.

These advanced sampling techniques have proven particularly valuable for IDPs, which challenge traditional structure-function paradigms by existing as dynamic ensembles rather than stable tertiary structures [1].

Quantum Computing Experimental Workflow for Molecular Simulation

Early quantum computing applications for molecular systems follow a distinct workflow:

Molecular Hamiltonian Generation: Compute the second-quantized electronic structure of the target molecule using classical methods (Hartree-Fock, DFT) with a selected basis set.

Qubit Mapping: Transform the fermionic Hamiltonian to qubit representation using Jordan-Wigner, Bravyi-Kitaev, or other fermion-to-qubit transformations.

Ansatz Design: Prepare parameterized wavefunction ansätze appropriate for the quantum hardware, such as unitary coupled cluster (UCC) or hardware-efficient ansätze.

Variational Optimization: Execute the hybrid quantum-classical optimization loop, where the quantum processor prepares and measures expectation values, and a classical optimizer adjusts parameters.

Result Extraction: Measure the energy and other molecular properties from the optimized quantum state, potentially using error mitigation techniques to improve accuracy.

This workflow represents the current state-of-the-art for quantum computational chemistry on noisy intermediate-scale quantum (NISQ) devices.

Visualization of Computational Workflows

Classical Molecular Dynamics Simulation Workflow

Diagram 1: Classical MD Workflow

Enhanced Sampling with Machine Learning Integration

Diagram 2: ML-Enhanced Sampling

Quantum-Classical Hybrid Simulation Approach

Diagram 3: Quantum-Classical Hybrid

Research Reagent Solutions: Computational Tools for Molecular Simulation

The following table details essential software tools, force fields, and computational resources that form the foundational "research reagents" for molecular simulation across classical and quantum computational paradigms:

Table 4: Essential Research Reagent Solutions for Molecular Simulation

| Tool Category | Specific Solutions | Primary Function | Application Context |

|---|---|---|---|

| Classical MD Software | GROMACS [24], DESMOND [22], AMBER [22] | Biomolecular MD simulation with empirical force fields | Drug design; Protein-ligand interactions; Structural biology |

| Force Fields | CHARMM36 [24], AMBER, GAFF2 [24] | Parameter sets defining molecular interactions | Specific to biomolecules (CHARMM36) or drug-like molecules (GAFF2) |

| Enhanced Sampling | Gaussian accelerated MD (GaMD) [1], Metadynamics, REPLICA | Accelerate conformational sampling | IDPs [1]; Rare events; Free energy calculations |

| Machine Learning MD | ML Force Fields (MLFFs) [23], Graph Neural Networks | Quantum accuracy at classical cost; Dimensionality reduction | Aqueous systems [23]; Reaction coordinate discovery |

| Quantum Chemistry | QChem, PySCF, ORCA | Electronic structure calculations | Reference data; System preparation for quantum computing |

| Quantum Algorithms | VQE, QPE, Trotter-Suzuki | Quantum solutions to electronic structure | Small molecule simulations on quantum hardware |

| Analysis & Visualization | PyMOL [24], VMD, MDAnalysis | Trajectory analysis; Molecular graphics | Structural analysis; Publication figures |

| Specialized Databases | PubChem [24], ChEMBL [24], UniProt [24] | Chemical and biological target information | Drug discovery; Target identification; System preparation |

The comparative analysis of classical Newtonian and quantum mechanical approaches to molecular simulation reveals a rapidly evolving landscape where hybrid strategies currently offer the most practical value. Classical MD simulations continue to provide indispensable insights for drug development, leveraging well-validated force fields and efficient integration algorithms [22]. Meanwhile, machine learning integration is addressing key limitations in conformational sampling and force field accuracy, particularly for challenging systems like intrinsically disordered proteins and complex aqueous interfaces [1] [23].

Quantum computing approaches, while still in early stages of application to molecular simulation, represent a fundamentally different computational paradigm with potential for exponential speedup for specific electronic structure problems. The most productive near-term strategy employs classical MD for sampling configurational space and dynamics, machine learning for enhancing sampling efficiency and extracting insights, and quantum computing for targeted electronic structure calculations where classical methods struggle.